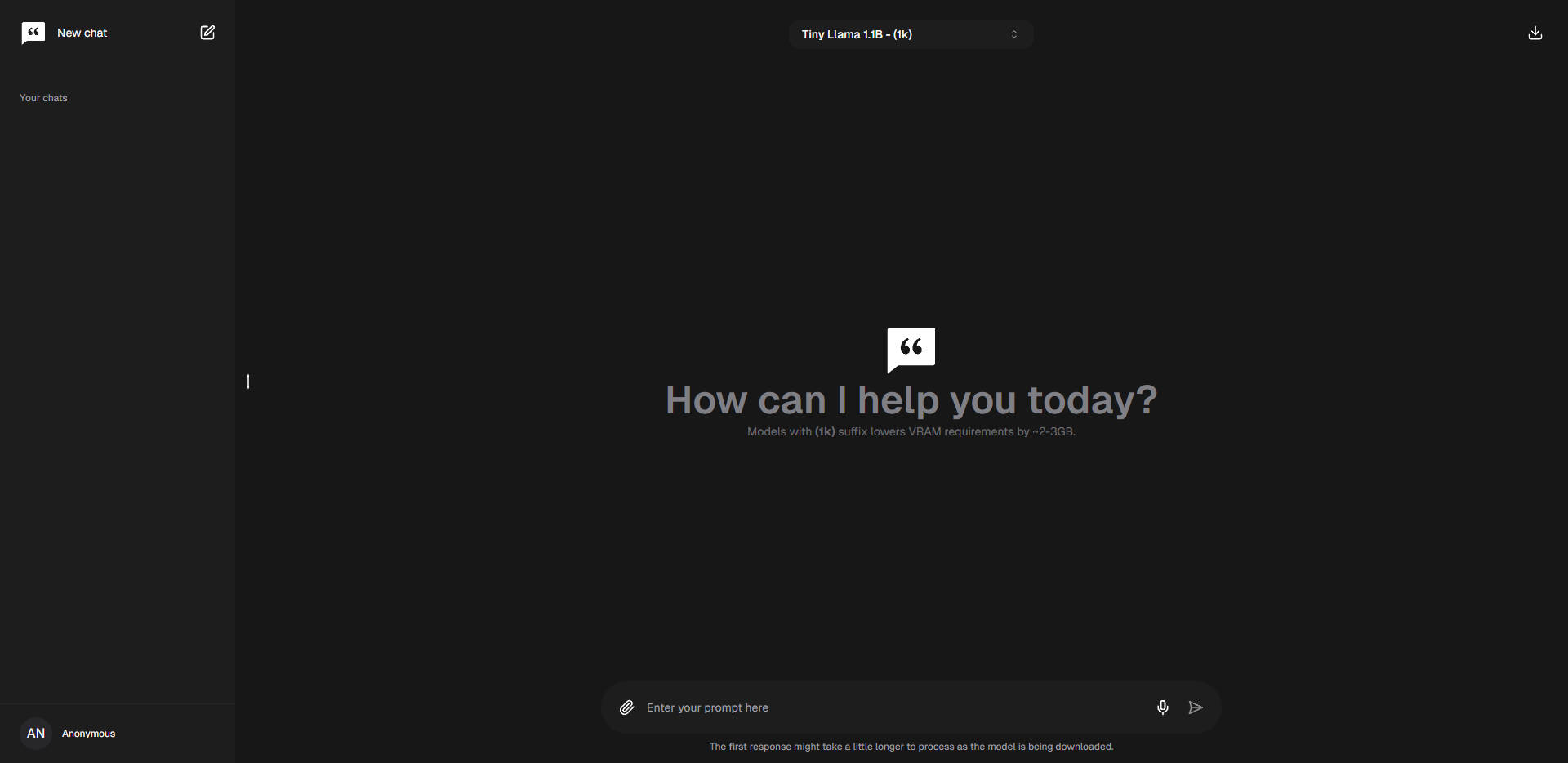

What is ChattyUI?

Chattyis your private AI that leverages WebGPU to run large language models (LLMs) natively & privately in your browser, bringing you the most feature rich in-browser AI experience.

Features

In-browser privacy:All AI models run locally (client side) on your hardware, ensuring that your data is processed only on your pc. No server-side processing!

Offline:Once the initial download of a model is processed, you'll be able to use it without an active internet connection.

Chat history:Access and manage your conversation history.

Supports new open-source models:Chat with popular open-source models such as Gemma, Llama2 & 3 and Mistral!

Responsive design:If your phone supports WebGl, you'll be able to use Chatty just as you would on desktop.

Intuitive UI:Inspired by popular AI interfaces such as Gemini and ChatGPT to enhance similarity in the user experience.

Markdown & code highlight:Messages returned as markdown will be displayed as such & messages that include code, will be highlighted for easy access.

Chat with files:Load files (pdf & all non-binary files supported - even code files) and ask the models questions about them - fully local! Your documents never gets processed outside of your local environment, thanks to XenovaTransformerEmbeddings& MemoryVectorStore

Custom memory support:Add custom instructions/memory to allow the AI to provide better and more personalized responses.

Export chat messages:Seamlessly generate and save your chat messages in either json or markdown format.

Voice input support:Use voice interactions to interact with the models.

Regenerate responses:Not quite the response you were hoping for? Quickly regenerate it without having to write out your prompt again.

Light & Dark mode:Switch between light & dark mode.

More information on ChattyUI

Top 5 Countries

Traffic Sources

ChattyUI Alternatives

ChattyUI Alternatives-

ChatWise is a desktop AI chatbot app for macOS and Windows. Integrate multiple LLMs like GPT - 4, enjoy speed, privacy with local data storage, multimodal input, web search, and more. Ideal for researchers, developers, and content creators.

-

ChatLLM Pro is an offline generative AI chat extension for the browser. It allows you to have private conversation with your page without leaking any data to the 3rd party service such as ChatGPT. Download at chatllm.pro

-

RecurseChat for Mac: Private, offline AI chat with local LLMs & RAG. Seamlessly manage ChatGPT/Claude. Secure, zero-config, and powerful.

-

BrowserAI: Run production - ready LLMs directly in your browser. It's simple, fast, private, and open - source. Features include WebGPU acceleration, zero server costs, and offline capability. Ideal for developers, companies, and hobbyists.

-

Enhanced ChatGPT Clone: Features OpenAI, GPT-4 Vision, Bing, Anthropic, OpenRouter, Google Gemini, AI model switching, message search, langchain, DALL-E-3, ChatGPT Plugins, OpenAI Functions, Secure Multi-User System, Presets, completely open-source for self-hosting.