What is Code2LLM?

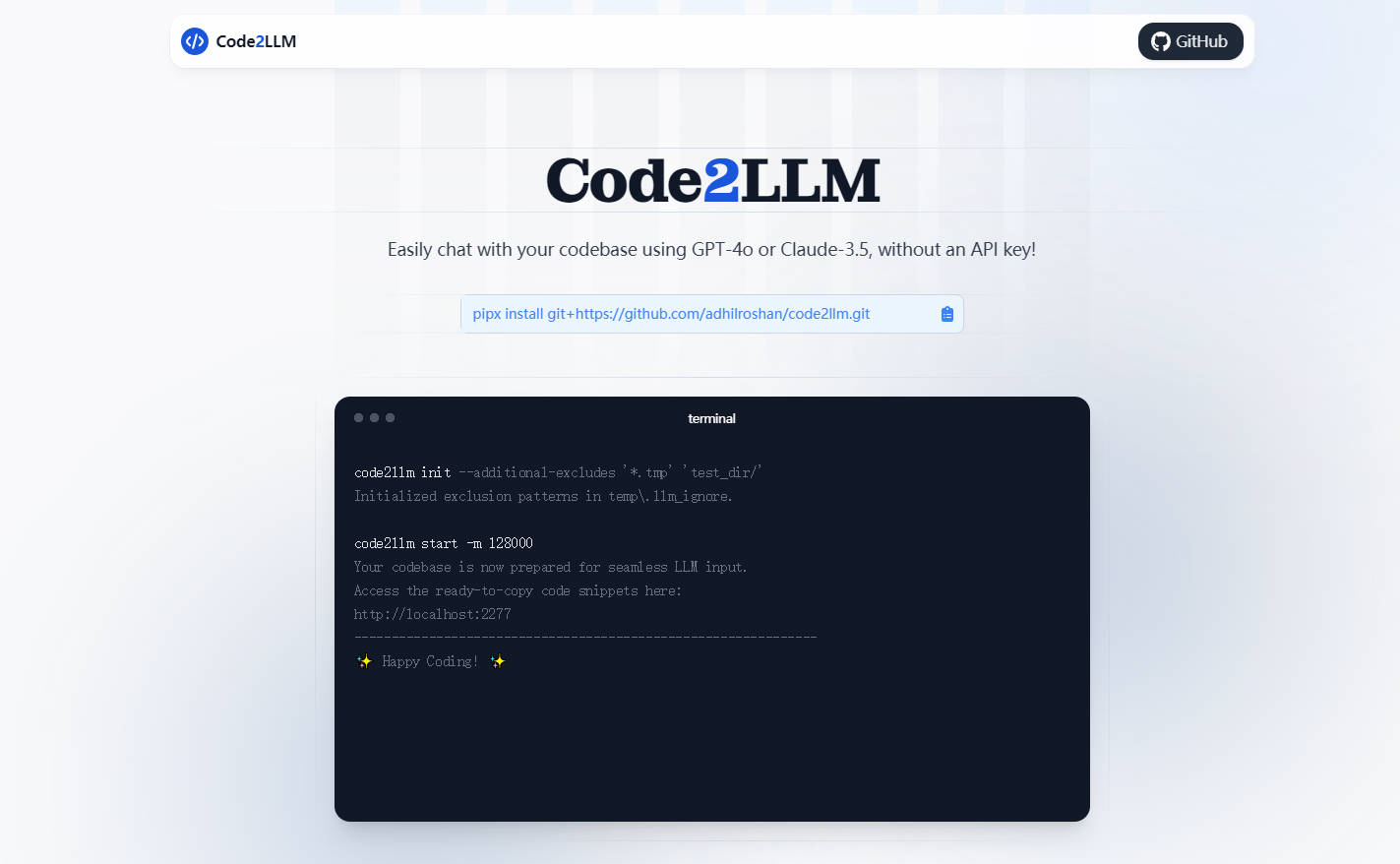

Code2LLM is a cutting-edge CLI tool designed to revolutionize the way developers interact with their codebases. Leveraging advanced models like GPT-4o and Claude-3.5 Sonnet, it enables direct communication with your code, eliminating the need for API keys and providing instant insights and answers. This tool is a game-changer for developers seeking to enhance productivity and streamline their workflow.

Key Features:

📂 Code Extraction: Extracts and formats code from a specified directory, making it easily accessible for analysis.

🧩 Chunking: Splits code into manageable chunks, ensuring it fits within the input constraints of language models.

🌐 Web Interface: Offers a user-friendly interface to view, copy, and interact with extracted code chunks.

💻 CLI Support: Provides command-line interface capabilities for initializing and running the extraction process.

🛠️ Customizable Exclusions: Allows users to define patterns to exclude specific files and directories from processing.

Conclusion:

Code2LLM is a powerful tool that simplifies code analysis and interaction, making it an indispensable asset for developers. With its advanced features and user-friendly interfaces, it offers a seamless way to enhance productivity and gain deeper insights into your codebase. Experience the future of code interaction with Code2LLM!

More information on Code2LLM

Code2LLM Alternatives

Load more Alternatives-

LLxprt Code: Universal AI CLI for multi-model LLMs. Access Google, OpenAI, Anthropic & more from your terminal. Boost coding, debugging & automation.

-

Code2Prompt simplifies code ingestion, turning your codebase into structured prompts for AI and automation.

-

Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

-

OneFileLLM: CLI tool to unify data for LLMs. Supports GitHub, ArXiv, web scraping & more. XML output & token counts. Stop data wrangling!

-

Claude Code is an agentic coding tool that lives in your terminal, understands your codebase, and helps you code faster by executing routine tasks, explaining complex code, and handling git workflows - all through natural language commands.