CogniSelect

NativeMind

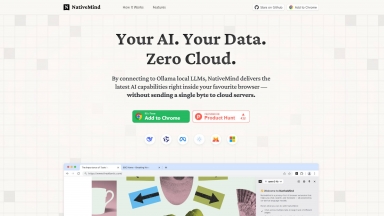

NativeMind

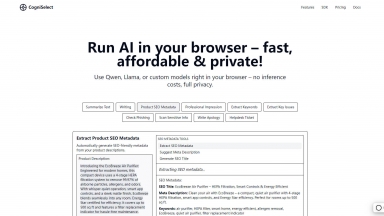

CogniSelect

| Launched | 2025-05 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Vercel,Gzip,OpenGraph,Progressive Web App,HSTS |

| Tag | Inference Apis,Developer Tools,Software Development |

NativeMind

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Text Generators,Summarize Text,Browser Extension |

CogniSelect Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

NativeMind Rank/Visit

| Global Rank | 2025078 |

| Country | Korea, Republic of |

| Month Visit | 10592 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

local.ai - Explore Local AI Playground, a free app for offline AI experimentation. Features include CPU inferencing, model management, and more.

Browserai.dev - BrowserAI: Run production - ready LLMs directly in your browser. It's simple, fast, private, and open - source. Features include WebGPU acceleration, zero server costs, and offline capability. Ideal for developers, companies, and hobbyists.

ChattyUI - Open-source, feature rich Gemini/ChatGPT-like interface for running open-source models (Gemma, Mistral, LLama3 etc.) locally in the browser using WebGPU. No server-side processing - your data never leaves your pc!

Kolosal AI - Kolosal AI is an open-source platform that enables users to run large language models (LLMs) locally on devices like laptops, desktops, and even Raspberry Pi, prioritizing speed, efficiency, privacy, and eco-friendliness.