InternLM2

InternLM2

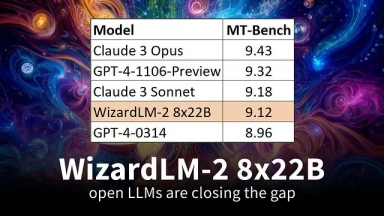

WizardLM-2

WizardLM-2

InternLM2

| Launched | 2024 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Chatbot Builder,Developer Tools,Agent Development Frameworks |

WizardLM-2

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | GitHub Pages |

| Tag | Task Automation |

InternLM2 Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

WizardLM-2 Rank/Visit

| Global Rank | 3227668 |

| Country | United States |

| Month Visit | 14219 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

StableLM - Discover StableLM, an open-source language model by Stability AI. Generate high-performing text and code on personal devices with small and efficient models. Transparent, accessible, and supportive AI technology for developers and researchers.

LM Studio - LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

PolyLM - PolyLM, a revolutionary polyglot LLM, supports 18 languages, excels in tasks, and is open-source. Ideal for devs, researchers, and businesses for multilingual needs.

WizardLM - Enhance language models, improve performance, and get accurate results. WizardLM is the ultimate tool for coding, math, and NLP tasks.