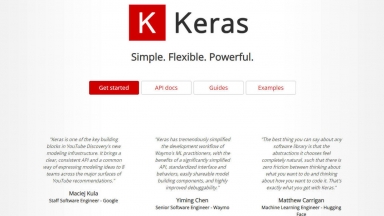

Keras

Keras

Hyperpod AI

Hyperpod AI

Keras

| Launched | 2015-04 |

| Pricing Model | |

| Starting Price | |

| Tech used | Google Tag Manager,Amazon AWS CloudFront,Google Fonts,Bootstrap,Amazon AWS S3 |

| Tag | Software Development,Data Science,Code Generation |

Hyperpod AI

| Launched | 2024-12 |

| Pricing Model | Free Trial |

| Starting Price | |

| Tech used | |

| Tag | Mlops,Infrastructure,App Generators |

Keras Rank/Visit

| Global Rank | 147597 |

| Country | India |

| Month Visit | 308086 |

Top 5 Countries

Traffic Sources

Hyperpod AI Rank/Visit

| Global Rank | 561239 |

| Country | |

| Month Visit | 47892 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

DeepKE - DeepKE: Unified toolkit for high-precision Knowledge Extraction. Conquer low-resource, multimodal, & document-level data to build robust Knowledge Graphs.

Caffe - Caffe is a deep learning framework made with expression, speed, and modularity in mind.

DeepInfra - Run the top AI models using a simple API, pay per use. Low cost, scalable and production ready infrastructure.

KeaML Deployments - Streamline your AI development journey with KeaML - pre-configured environments, optimized resources, and collaborative tools. Experience seamless AI projects.

ktransformers - KTransformers, an open - source project by Tsinghua's KVCache.AI team and QuJing Tech, optimizes large - language model inference. It reduces hardware thresholds, runs 671B - parameter models on 24GB - VRAM single - GPUs, boosts inference speed (up to 286 tokens/s pre - processing, 14 tokens/s generation), and is suitable for personal, enterprise, and academic use.