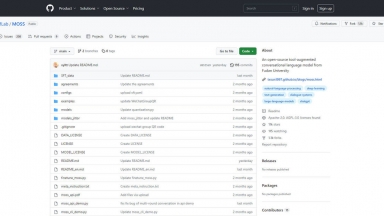

MOSS

MOSS

Molmo AI

Molmo AI

MOSS

| Launched | 2023 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Chatbot Character,Question Answering,Text Generators |

Molmo AI

| Launched | 2024-09 |

| Pricing Model | Free Trial |

| Starting Price | |

| Tech used | Cloudflare CDN,Next.js,Gzip,OpenGraph,Webpack,YouTube |

| Tag | Data Analysis,Data Science |

MOSS Rank/Visit

| Global Rank | 0 |

| Country | |

| Month Visit | 0 |

Top 5 Countries

Traffic Sources

Molmo AI Rank/Visit

| Global Rank | 1382983 |

| Country | United States |

| Month Visit | 22012 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

XVERSE-MoE-A36B - XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.

JetMoE-8B - JetMoE-8B is trained with less than $ 0.1 million1 cost but outperforms LLaMA2-7B from Meta AI, who has multi-billion-dollar training resources. LLM training can be much cheaper than people generally thought.

Moonshine - Moonshine speech-to-text models. Fast, accurate, resource-efficient. Ideal for on-device processing. Outperforms Whisper. For real-time transcription & voice commands. Empowers diverse applications.

Yuan2.0-M32 - Yuan2.0-M32 is a Mixture-of-Experts (MoE) language model with 32 experts, of which 2 are active.