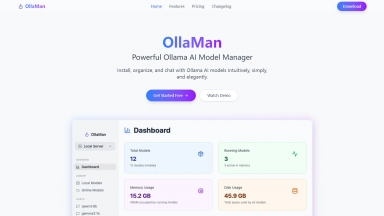

OllaMan

OllaMan

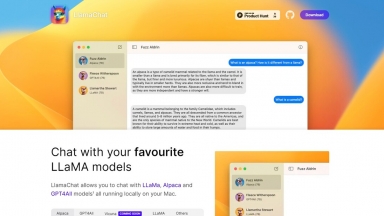

LlamaChat

LlamaChat

OllaMan

| Launched | 2025-04 |

| Pricing Model | Free Trial |

| Starting Price | |

| Tech used | Cloudflare Analytics,Umami,Cloudflare CDN,Next.js |

| Tag | Software Development,Developer Tools,Chatbot Builder |

LlamaChat

| Launched | 2023-04 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Next.js,Nginx |

| Tag | Answer Generators,Developer Tools,Chatbot Builder |

OllaMan Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

LlamaChat Rank/Visit

| Global Rank | 10385447 |

| Country | United States |

| Month Visit | 248 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Ollama - Run large language models locally using Ollama. Enjoy easy installation, model customization, and seamless integration for NLP and chatbot development.

oterm - oterm: Terminal UI for Ollama. Customize models, save chats, integrate tools via MCP, & display images. Streamline your AI workflow!

Ollama Docker - Streamline your Ollama deployments using Docker Compose. Dive into a containerized environment designed for simplicity and efficiency.

ManyLLM - ManyLLM: Unify & secure your local LLM workflows. A privacy-first workspace for developers, researchers, with OpenAI API compatibility & local RAG.