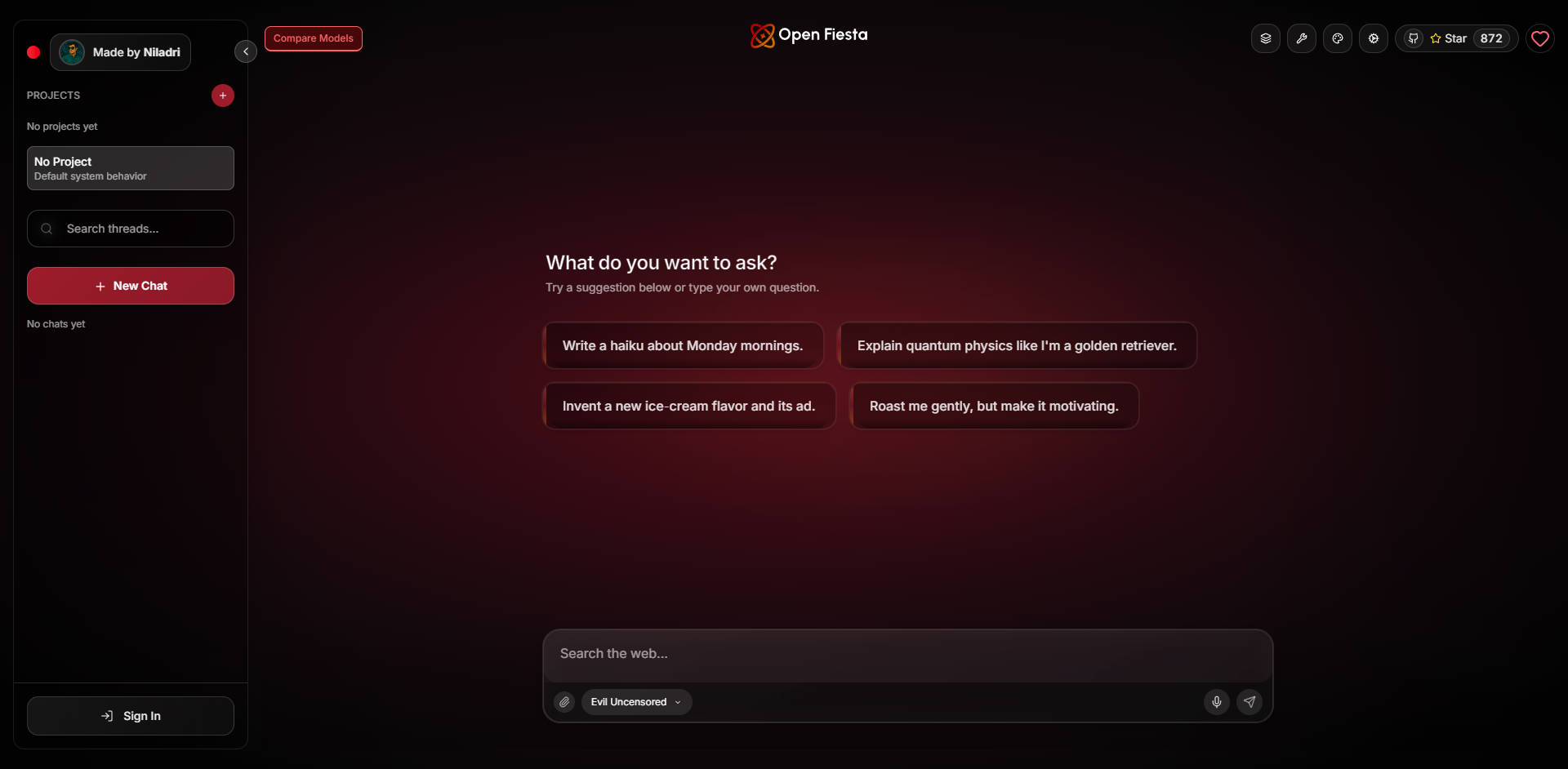

What is Open Fiesta?

Open-Fiesta is an open-source, multi-model AI chat playground designed for developers and AI enthusiasts. Built on Next.js, it allows you to run, compare, and evaluate responses from a wide range of AI models side-by-side in a clean, self-hostable interface.

Key Features

⚡ Run Multiple Models SimultaneouslySelect up to 5 different AI models and send them the same prompt at once. This side-by-side comparison makes it incredibly efficient to evaluate output quality, tone, and accuracy for your specific use case.

🔄 Broad Provider & Model SupportConnect to major providers like Google Gemini and OpenRouter, giving you access to dozens of popular models including Llama 3.1, Mistral, Qwen, and DeepSeek. You can also integrate your own locally-hosted models via built-in Ollama support.

🔎 Enhanced Interaction ToolsGo beyond simple text prompts. Toggle on-demand web search to provide models with real-time information or attach images (with Gemini) to test multimodal capabilities, all within a streamlined chat interface.

📦 Self-Hosted with Full ControlDeploy Open-Fiesta on your own infrastructure using Docker. With Supabase integration for authentication and chat persistence, you maintain complete ownership of your conversations and data.

🔗 Shareable ConversationsEasily share a specific chat session with colleagues or friends through a unique, shareable link. This is perfect for collaborating on prompt engineering or showcasing interesting model behaviors.

Use Cases

Model Evaluation for Developers: A developer building a customer service chatbot can use Open-Fiesta to send a complex user query to Llama 3.1, Gemini 1.5 Flash, and a fine-tuned Mistral model simultaneously. By comparing the responses side-by-side, they can quickly determine which model provides the most accurate, helpful, and appropriately toned answer for their application without switching between different platforms.

Prompt Engineering & Refinement: A marketing specialist can test a new ad copy prompt across five different models to see which one generates the most creative and compelling options. They can then refine the prompt and re-run the comparison in seconds to optimize their results.

Exploring Local Models: An AI enthusiast running models locally with Ollama can use Open-Fiesta as a unified interface to chat with

llama3,mistral, andgemmaat the same time. This allows them to experiment with the latest open-source models on their own hardware without needing to rely on third-party services.

Why Choose Open-Fiesta?

Open-Fiesta is designed for users who need more than a single-model chat interface. Its key advantage lies in providing a flexible and powerful environment for direct, multi-model evaluation.

Unmatched Flexibility: Seamlessly switch between cloud-based APIs from Google and OpenRouter and your own locally-run Ollama models. You aren't locked into a single ecosystem.

True Comparative Analysis: While other tools let you switch models, Open-Fiesta is built specifically for simultaneous, side-by-side comparison, which is the most effective way to gauge relative performance.

Complete Data Privacy and Ownership: As a self-hostable, open-source tool, you control the entire environment. Your API keys, prompts, and conversation history remain on your infrastructure, not a third-party's.

Conclusion

Open-Fiesta provides the tools you need to move beyond basic AI chat and into serious model evaluation. By enabling direct, simultaneous comparisons in a clean and controllable environment, it empowers you to make informed decisions about which models best suit your needs.

Explore the Open-Fiesta project to take control of your AI experimentation.