BenchLLM by V7

BenchLLM by V7

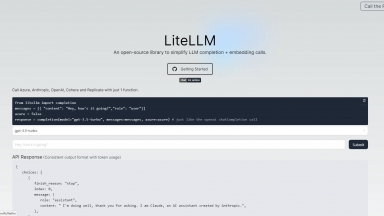

LiteLLM

LiteLLM

BenchLLM by V7

| Launched | 2023-07 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Framer,Google Fonts,HSTS |

| Tag | Test Automation,Llm Benchmark Leaderboard |

LiteLLM

| Launched | 2023-08 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Next.js,Vercel,Webpack,HSTS |

| Tag | Gateway |

BenchLLM by V7 Rank/Visit

| Global Rank | 12812835 |

| Country | United States |

| Month Visit | 961 |

Top 5 Countries

Traffic Sources

LiteLLM Rank/Visit

| Global Rank | 102564 |

| Country | United States |

| Month Visit | 482337 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

LiveBench - LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.

ModelBench - Launch AI products faster with no-code LLM evaluations. Compare 180+ models, craft prompts, and test confidently.

AI2 WildBench Leaderboard - WildBench is an advanced benchmarking tool that evaluates LLMs on a diverse set of real-world tasks. It's essential for those looking to enhance AI performance and understand model limitations in practical scenarios.

Deepchecks - Deepchecks: The end-to-end platform for LLM evaluation. Systematically test, compare, & monitor your AI apps from dev to production. Reduce hallucinations & ship faster.

Confident AI - Companies of all sizes use Confident AI justify why their LLM deserves to be in production.