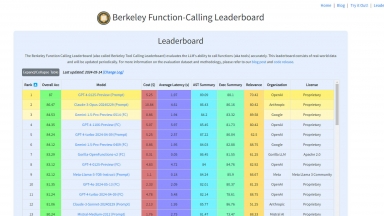

Berkeley Function-Calling Leaderboard

Berkeley Function-Calling Leaderboard

Klu LLM Benchmarks

Klu LLM Benchmarks

Berkeley Function-Calling Leaderboard

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Google Analytics,Google Tag Manager,cdnjs,Fastly,Google Fonts,Bootstrap,GitHub Pages,Gzip,Varnish,YouTube |

| Tag | Llm Benchmark Leaderboard,Data Analysis,Data Visualization |

Klu LLM Benchmarks

| Launched | 2023-01 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Segment |

| Tag | Llm Benchmark Leaderboard,Data Analysis,Data Visualization |

Berkeley Function-Calling Leaderboard Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Klu LLM Benchmarks Rank/Visit

| Global Rank | 295079 |

| Country | India |

| Month Visit | 129619 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Huggingface's Open LLM Leaderboard - Huggingface’s Open LLM Leaderboard aims to foster open collaboration and transparency in the evaluation of language models.

Scale Leaderboard - The SEAL Leaderboards show that OpenAI’s GPT family of LLMs ranks first in three of the four initial domains it’s using to rank AI models, with Anthropic PBC’s popular Claude 3 Opus grabbing first place in the fourth category. Google LLC’s Gemini models also did well, ranking joint-first with the GPT models in a couple of the domains.

LiveBench - LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.

Hugging Face Agent Leaderboard - Choose the best AI agent for your needs with the Agent Leaderboard—unbiased, real-world performance insights across 14 benchmarks.