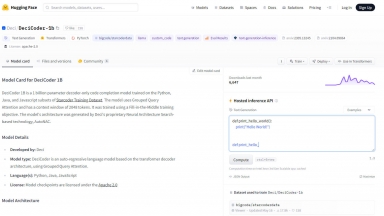

DeciCoder

DeciCoder

Replit Code V1.5 3B

Replit Code V1.5 3B

DeciCoder

| Launched | 2023 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Amazon AWS CloudFront,cdnjs,Google Fonts,KaTeX,RSS,Stripe |

| Tag | Code Autocomplete,Code Development,Developer Tools |

Replit Code V1.5 3B

| Launched | 2024 |

| Pricing Model | Free |

| Starting Price | 2023.10 |

| Tech used | |

| Tag | Code Autocomplete,Code Development,Documentation Generation |

DeciCoder Rank/Visit

| Global Rank | 0 |

| Country | |

| Month Visit | 0 |

Top 5 Countries

Traffic Sources

Replit Code V1.5 3B Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

StableCode - StableCode-Completion-Alpha-3B-4K is a 3 billion parameter decoder-only code completion model pre-trained on diverse sets of programming languages that topped the stackoverflow developer survey.

Deci - Deci empowers deep learning developers to accelerate inference on edge or cloud, reach production faster, and maximize hardware potential.

DeepCoder-14B-Preview - DeepCoder: 64K context code AI. Open-source 14B model beats expectations! Long context, RL training, top performance.

StarCoder - StarCoder and StarCoderBase are Large Language Models for Code (Code LLMs) trained on permissively licensed data from GitHub, including from 80+ programming languages, Git commits, GitHub issues, and Jupyter notebooks.