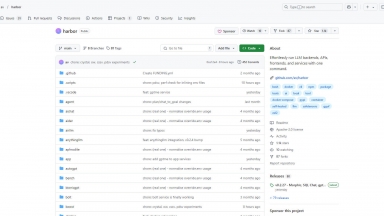

Harbor

Harbor

LazyLLM

LazyLLM

Harbor

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Mlops,Task Automation,Developer Tools |

LazyLLM

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Low Code,Mlops |

Harbor Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

LazyLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

LM Studio - LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

ManyLLM - ManyLLM: Unify & secure your local LLM workflows. A privacy-first workspace for developers, researchers, with OpenAI API compatibility & local RAG.

LLxprt Code - LLxprt Code: Universal AI CLI for multi-model LLMs. Access Google, OpenAI, Anthropic & more from your terminal. Boost coding, debugging & automation.

Haystack - Haystack: The open-source Python framework to build & deploy production-ready LLM applications. Flexible, modular, and built for scale.