JetMoE-8B

JetMoE-8B

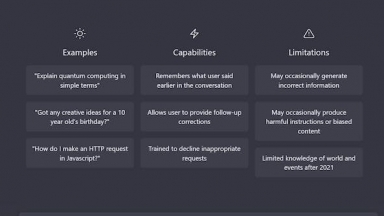

ChatGPT

ChatGPT

JetMoE-8B

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Text Generators,Answer Generators,Chatbot Builder |

ChatGPT

| Launched | 2007-01 |

| Pricing Model | Freemium |

| Starting Price | |

| Tech used | |

| Tag | Content Creation,Chatbot Character,Communication |

JetMoE-8B Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

ChatGPT Rank/Visit

| Global Rank | 6 |

| Country | United States |

| Month Visit | 5846786290 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

XVERSE-MoE-A36B - XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.

Molmo AI - Molmo AI is an open-source multimodal artificial intelligence model developed by AI2. It can process and generate various types of data, including text and images.

Yuan2.0-M32 - Yuan2.0-M32 is a Mixture-of-Experts (MoE) language model with 32 experts, of which 2 are active.

OpenBMB - OpenBMB: Building a large-scale pre-trained language model center and tools to accelerate training, tuning, and inference of big models with over 10 billion parameters. Join our open-source community and bring big models to everyone.

Gemma 3 270M - Gemma 3 270M: Compact, hyper-efficient AI for specialized tasks. Fine-tune for precise instruction following & low-cost, on-device deployment.