What is JetMoE-8B?

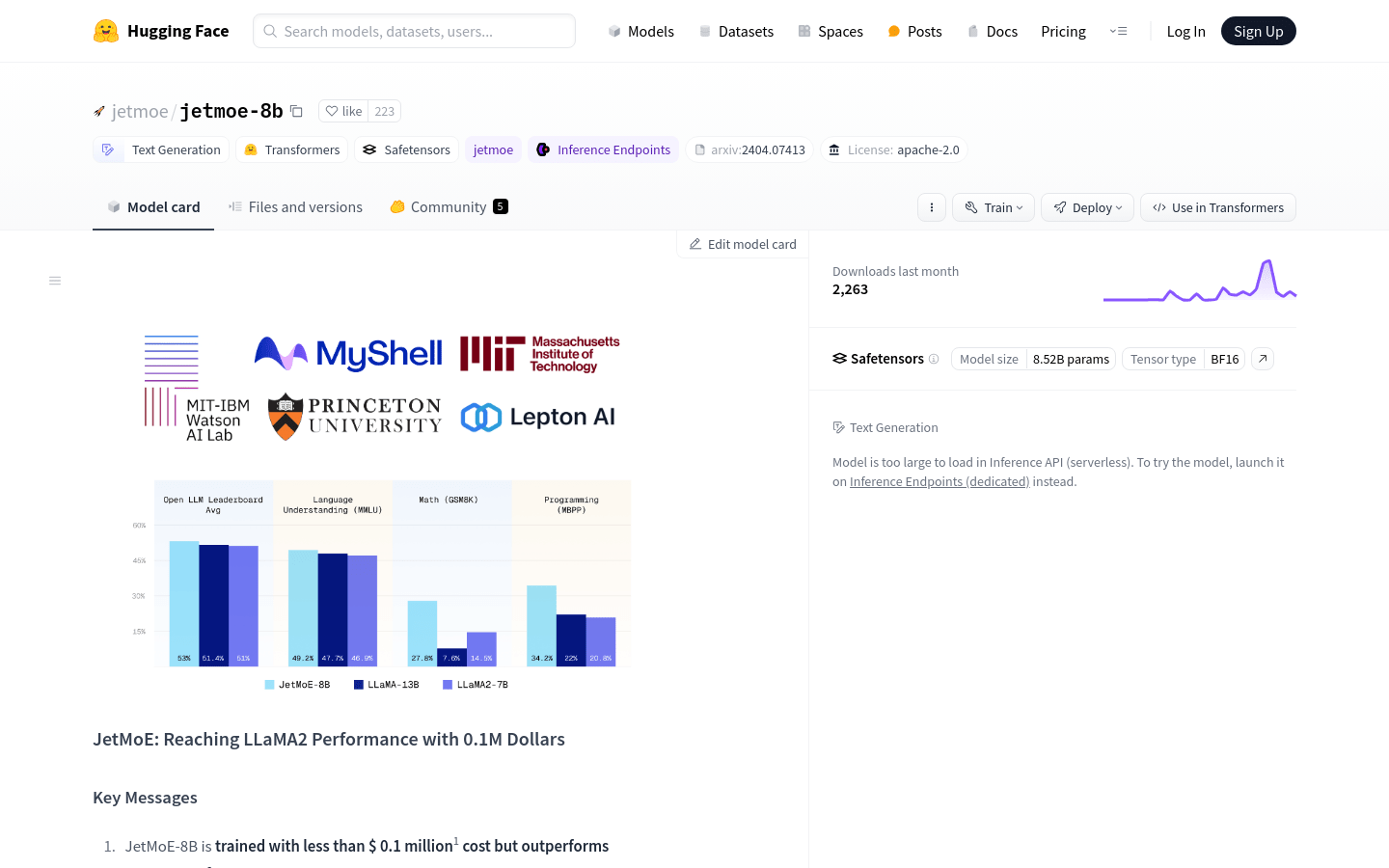

JetMoE-8B, developed by Yikang Shen, Zhen Guo, Tianle Cai, and Zengyi Qin, is an open-source, academia-friendly AI model trained with minimal cost. Despite its modest training budget of less than $0.1 million, JetMoE-8B surpasses multi-billion-dollar models like LLaMA2-7B. With only public datasets and affordable compute resources, JetMoE-8B sets a new standard for cost-effective, high-performance language models.

Key Features:

👩🔬 Affordable Training:Trained with just $0.1 million on a consumer-grade GPU, JetMoE-8B showcases cost-efficient AI development without sacrificing quality.

🚀 High Performance:With 2.2 billion active parameters during inference, JetMoE-8B achieves superior performance compared to models with similar computational costs, like Gemma-2B.

🌐 Open Source:Utilizing only public datasets and open-sourced code, JetMoE-8B promotes collaboration and accessibility in the AI community.

Use Cases:

Enhancing Customer Support: JetMoE-8B can power chatbots to provide efficient and accurate responses to customer inquiries, improving user satisfaction and reducing workload for support teams.

Research Assistance: Academic institutions can leverage JetMoE-8B for natural language processing tasks, facilitating advancements in fields like linguistics, psychology, and social sciences.

Personalized Content Generation: Content creators can use JetMoE-8B to generate tailored articles, product descriptions, or marketing materials, optimizing engagement and conversion rates.

Conclusion:

JetMoE-8B represents a breakthrough in AI development, offering unparalleled performance at a fraction of the cost of traditional models. Whether for academic research, commercial applications, or societal impact, JetMoE-8B empowers users to harness the power of state-of-the-art language models without breaking the bank. Experience the efficiency and effectiveness of JetMoE-8B today and join the forefront of AI innovation.

More information on JetMoE-8B

JetMoE-8B Alternatives

JetMoE-8B Alternatives-

XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.

-

Molmo AI is an open-source multimodal artificial intelligence model developed by AI2. It can process and generate various types of data, including text and images.

-

Yuan2.0-M32 is a Mixture-of-Experts (MoE) language model with 32 experts, of which 2 are active.

-

OpenBMB: Building a large-scale pre-trained language model center and tools to accelerate training, tuning, and inference of big models with over 10 billion parameters. Join our open-source community and bring big models to everyone.

-

Gemma 3 270M: Compact, hyper-efficient AI for specialized tasks. Fine-tune for precise instruction following & low-cost, on-device deployment.