LLAMA-Factory

LLAMA-Factory

LLAMA-Factory

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Low Code |

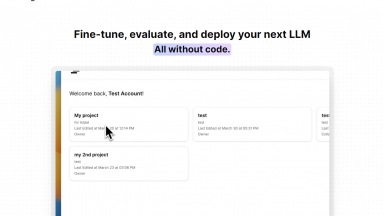

OneLLM

| Launched | 2024-03 |

| Pricing Model | Freemium |

| Starting Price | $19 /mo |

| Tech used | Next.js,Vercel,Gzip,OpenGraph,Webpack,HSTS |

| Tag | Text Analysis,No-Code,Data Analysis |

LLAMA-Factory Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

OneLLM Rank/Visit

| Global Rank | 11268274 |

| Country | Germany |

| Month Visit | 87 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Ludwig - Create custom AI models with ease using Ludwig. Scale, optimize, and experiment effortlessly with declarative configuration and expert-level control.

LazyLLM - LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

LM Studio - LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

LlamaEdge - The LlamaEdge project makes it easy for you to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally.