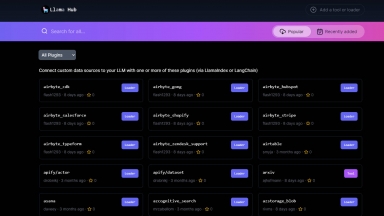

LlamaHub

LlamaHub

LlamaIndex

LlamaIndex

LlamaHub

| Launched | 2023-02 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Next.js,Vercel,Gzip,Webpack,HSTS |

| Tag | Data Integration,Question Answering,Data Provider |

LlamaIndex

| Launched | 2023-05 |

| Pricing Model | Freemium |

| Starting Price | |

| Tech used | Google Tag Manager,Next.js,Vercel,Gzip,OpenGraph,Progressive Web App,Webpack,HSTS |

| Tag | Data Integration,Data Analysis |

LlamaHub Rank/Visit

| Global Rank | 627955 |

| Country | United States |

| Month Visit | 53508 |

Top 5 Countries

Traffic Sources

LlamaIndex Rank/Visit

| Global Rank | 83732 |

| Country | United States |

| Month Visit | 530822 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Llama 2 - Llama 2 is a powerful AI tool that empowers developers while promoting responsible practices. Enhancing safety in chat use cases and fostering collaboration in academic research, it shapes the future of AI responsibly.

Code Llama - Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

llamafile - Llamafile is a project by a team over at Mozilla. It allows users to distribute and run LLMs using a single, platform-independent file.

vLLM - A high-throughput and memory-efficient inference and serving engine for LLMs