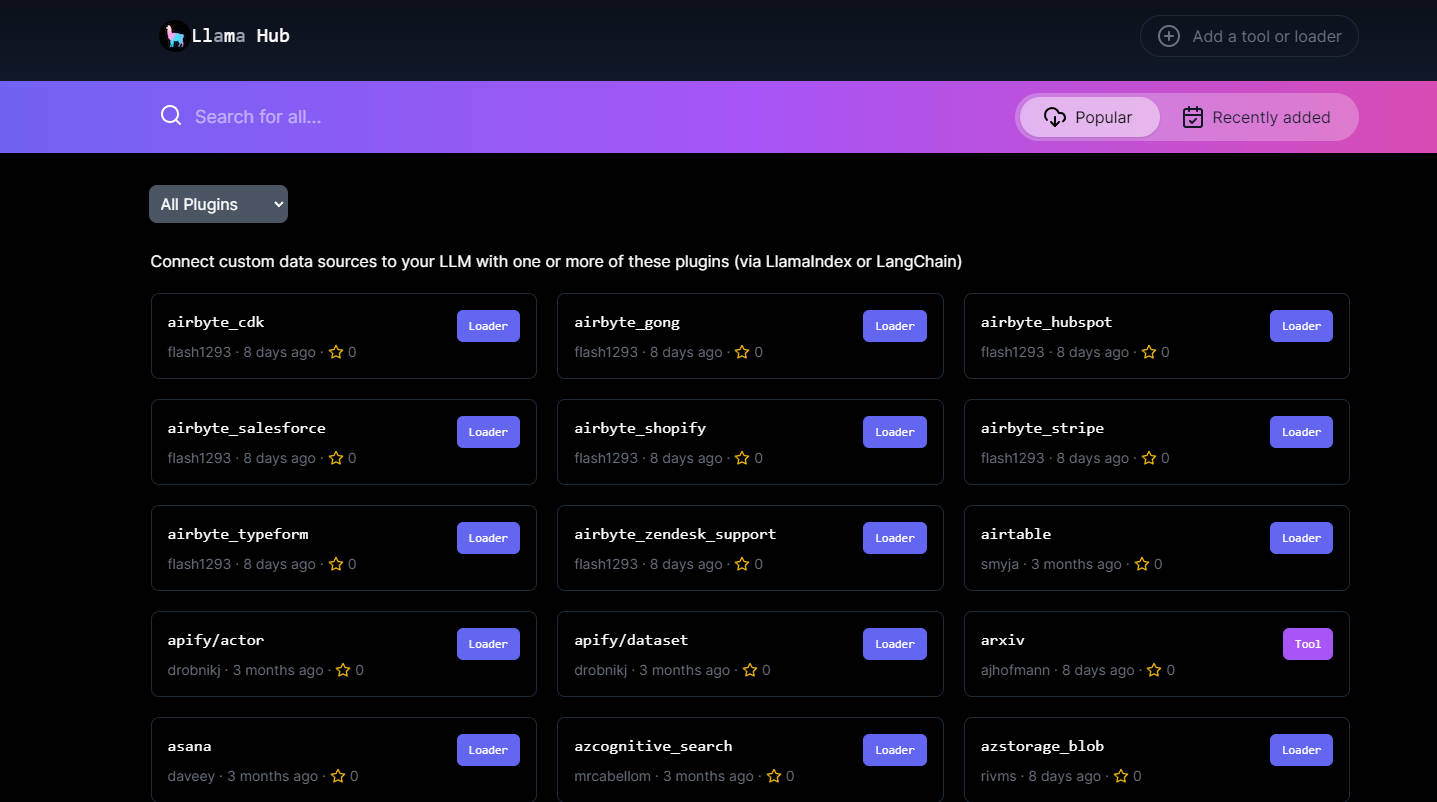

What is LlamaHub?

LlamaHub is a library of data loaders, readers, and tools created by the community to connect large language models to various knowledge sources. It allows for the creation of customized data agents to work with data and unlock the full capabilities of large language models.

Key Features:

1. General-purpose utilities for ingestion of data for search and retrieval by large language models.

2. Tools for models to read and write to third-party data services and sources.

3. Examples of data agents for loading and parsing data from Google Docs, SQL Databases, Notion, Slack, and managing Google Calendar, Gmail inbox, and OpenAPI specs.

4. LangChain for question answering and loading documents.

LlamaHub is a valuable tool for connecting large language models with various knowledge sources. Its general-purpose utilities and tools make it easy to ingest data and create customized data agents, unlocking the full potential of large language models.

More information on LlamaHub

Top 5 Countries

Traffic Sources

LlamaHub Alternatives

Load more Alternatives-

-

Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

-

-

LlamaIndex builds intelligent AI agents over your enterprise data. Power LLMs with advanced RAG, turning complex documents into reliable, actionable insights.

-