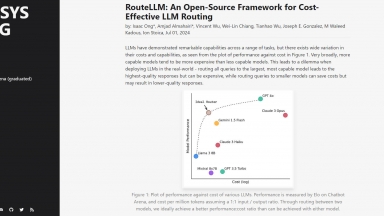

RouteLLM

RouteLLM

LLMGateway

LLMGateway

RouteLLM

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Infrastructure,Business Intelligence,Workflow Automation |

LLMGateway

| Launched | 2025-05 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag |

RouteLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

LLMGateway Rank/Visit

| Global Rank | 1424016 |

| Country | United States |

| Month Visit | 17403 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

vLLM Semantic Router - Semantic routing is the process of dynamically selecting the most suitable language model for a given input query based on the semantic content, complexity, and intent of the request. Rather than using a single model for all tasks, semantic routers analyze the input and direct it to specialized models optimized for specific domains or complexity levels.

FastRouter.ai - FastRouter.ai optimizes production AI with smart LLM routing. Unify 100+ models, cut costs, ensure reliability & scale effortlessly with one API.

ModelPilot - ModelPilot unifies 30+ LLMs via one API. Intelligently optimize cost, speed, quality & carbon for every request. Eliminate vendor lock-in & save.

Requesty - Stop managing multiple LLM APIs. Requesty unifies access, optimizes costs, and ensures reliability for your AI applications.