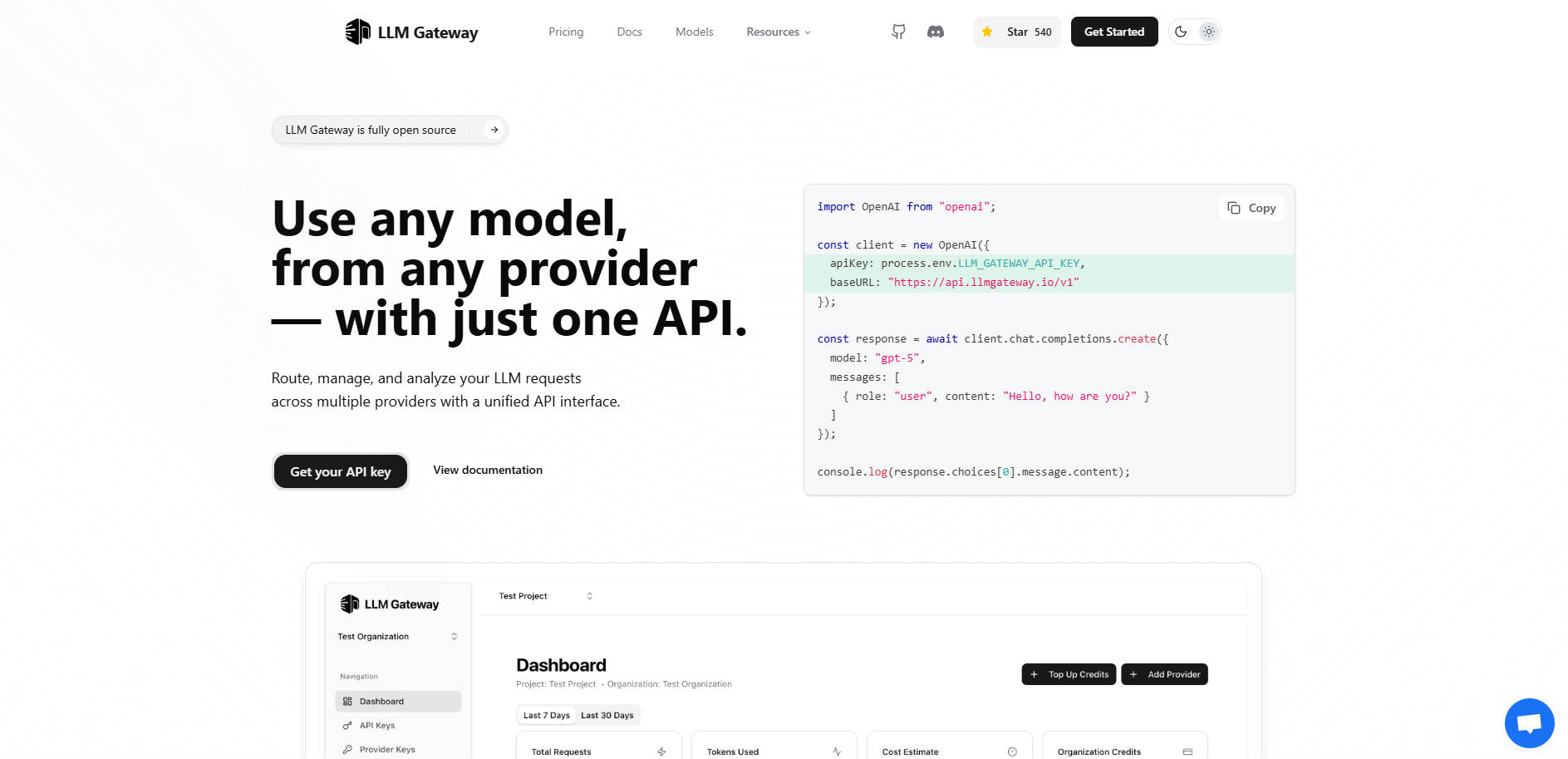

What is LLMGateway?

LLM Gateway is a robust, open-source API gateway designed to act as intelligent middleware between your application and the diverse ecosystem of Large Language Model (LLM) providers. If you are building applications that rely on multiple AI models (such as OpenAI, Anthropic, or Google AI Studio), integrating them directly creates complexity, vendor lock-in risk, and fragmented cost tracking.

LLM Gateway centralizes your entire LLM infrastructure, allowing you to route, manage credentials, track usage, and analyze performance across all providers through a single, unified interface. You gain immediate control over costs and performance optimization without complex code changes.

Key Features

🌐 Unified API Interface for Seamless Migration

LLM Gateway maintains compatibility with the widely adopted OpenAI API format. This critical feature means you can connect to any supported provider—regardless of their native SDK—using a single, standardized request structure. You can switch models or providers instantly without rewriting your core application logic or migrating complex provider-specific code.

🚦 Intelligent Multi-Provider Routing

Route requests dynamically to the optimal LLM provider based on criteria like model availability, cost, or performance requirements. The Gateway manages the underlying connections to providers like OpenAI, Anthropic, and Google Vertex AI, ensuring your application remains provider-agnostic and resilient to single-vendor outages.

💰 Centralized Usage Analytics and Cost Tracking

Gain deep visibility into your LLM consumption. LLM Gateway automatically tracks key usage metrics—including the number of requests, tokens consumed, and actual costs—across all models and providers. This centralized dashboard allows you to monitor spending in real-time and make informed budgeting decisions based on verifiable data.

⏱️ Performance Monitoring and Comparison

Identify the most efficient models for specific tasks by analyzing response times and cost-effectiveness. The Gateway provides comparative performance tracking, helping you benchmark different models against your actual usage data so you can optimize prompt strategies and select models that deliver the best balance of speed and value.

⚙️ Flexible Deployment Options

Choose the deployment method that fits your security and control needs. You can start immediately with the Hosted Version for zero setup, or deploy the Self-Hosted (open-source core) version on your own infrastructure. Self-hosting ensures maximum data privacy and complete control over configuration and traffic flow.

Use Cases

1. A/B Testing and Model Benchmarking

Instead of manually configuring and running separate tests for different models, you can route identical prompts through LLM Gateway, specifying different underlying models (e.g., gpt-4o vs. a high-performance Anthropic model). The built-in performance tracking instantly compares latency, token usage, and cost, allowing you to select the definitive production model based on real-world metrics.

2. Simplifying Vendor Migration and Failover

When a new, more cost-effective model is released by a different vendor, or if you need a failover strategy, LLM Gateway eliminates the need for extensive refactoring. Because your application only communicates with the Gateway’s unified API, you simply update the routing configuration or model parameter within the Gateway layer, achieving instantaneous migration or failover protection.

3. Real-Time Budget Management

For development teams managing multiple projects, LLM Gateway provides a consolidated view of spending. You can monitor which applications or specific API keys are driving the highest token usage, allowing product owners to quickly identify inefficiencies, implement usage caps, or adjust model choices to stay within defined budget constraints.

Why Choose LLM Gateway?

LLM Gateway offers a unique combination of flexibility, control, and functional depth that differentiates it from connecting directly to individual provider APIs.

Open-Source Core with Enterprise Options: The core routing and monitoring functionality is open-source (AGPLv3), giving technical teams full transparency and control over their LLM traffic flow. For larger organizations, the Enterprise license provides critical features like advanced billing, team management, and priority support.

True Vendor Agnosticism: By utilizing the OpenAI API standard for all providers, LLM Gateway future-proofs your application architecture. Your code base remains stable even as you experiment with, or shift entirely to, new LLM providers.

Data Control: The self-hosted deployment option ensures that your LLM request data and API traffic remain within your existing infrastructure, satisfying stringent compliance and security requirements.

Conclusion

LLM Gateway provides the essential control plane necessary for building sophisticated, multi-model AI applications efficiently and cost-effectively. It transforms a fragmented set of API integrations into a single, manageable, and highly optimized service layer. Take control of your LLM strategy, simplify your architecture, and start optimizing your performance today.

Explore how LLM Gateway can centralize your AI infrastructure.