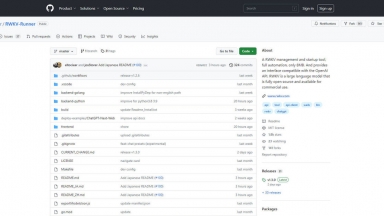

RWKV-Runner

RWKV-Runner

Runner H

Runner H

RWKV-Runner

| Launched | 2023 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Software Development |

Runner H

| Launched | 2024-04 |

| Pricing Model | Free Trial |

| Starting Price | |

| Tech used | Nuxt.js,Vercel,Gzip,OpenGraph,HSTS |

| Tag | Web Scraper |

RWKV-Runner Rank/Visit

| Global Rank | 0 |

| Country | |

| Month Visit | 0 |

Top 5 Countries

Traffic Sources

Runner H Rank/Visit

| Global Rank | 158927 |

| Country | United States |

| Month Visit | 241522 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

RWKV-LM - RWKV is an RNN with transformer-level LLM performance. It can be directly trained like a GPT (parallelizable). So it's combining the best of RNN and transformer - great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding.

ChatRWKV - ChatRWKV is like ChatGPT but powered by RWKV (100% RNN) language model, and open source.

ktransformers - KTransformers, an open - source project by Tsinghua's KVCache.AI team and QuJing Tech, optimizes large - language model inference. It reduces hardware thresholds, runs 671B - parameter models on 24GB - VRAM single - GPUs, boosts inference speed (up to 286 tokens/s pre - processing, 14 tokens/s generation), and is suitable for personal, enterprise, and academic use.

Command-R - Command-R is a scalable generative model targeting RAG and Tool Use to enable production-scale AI for enterprise.