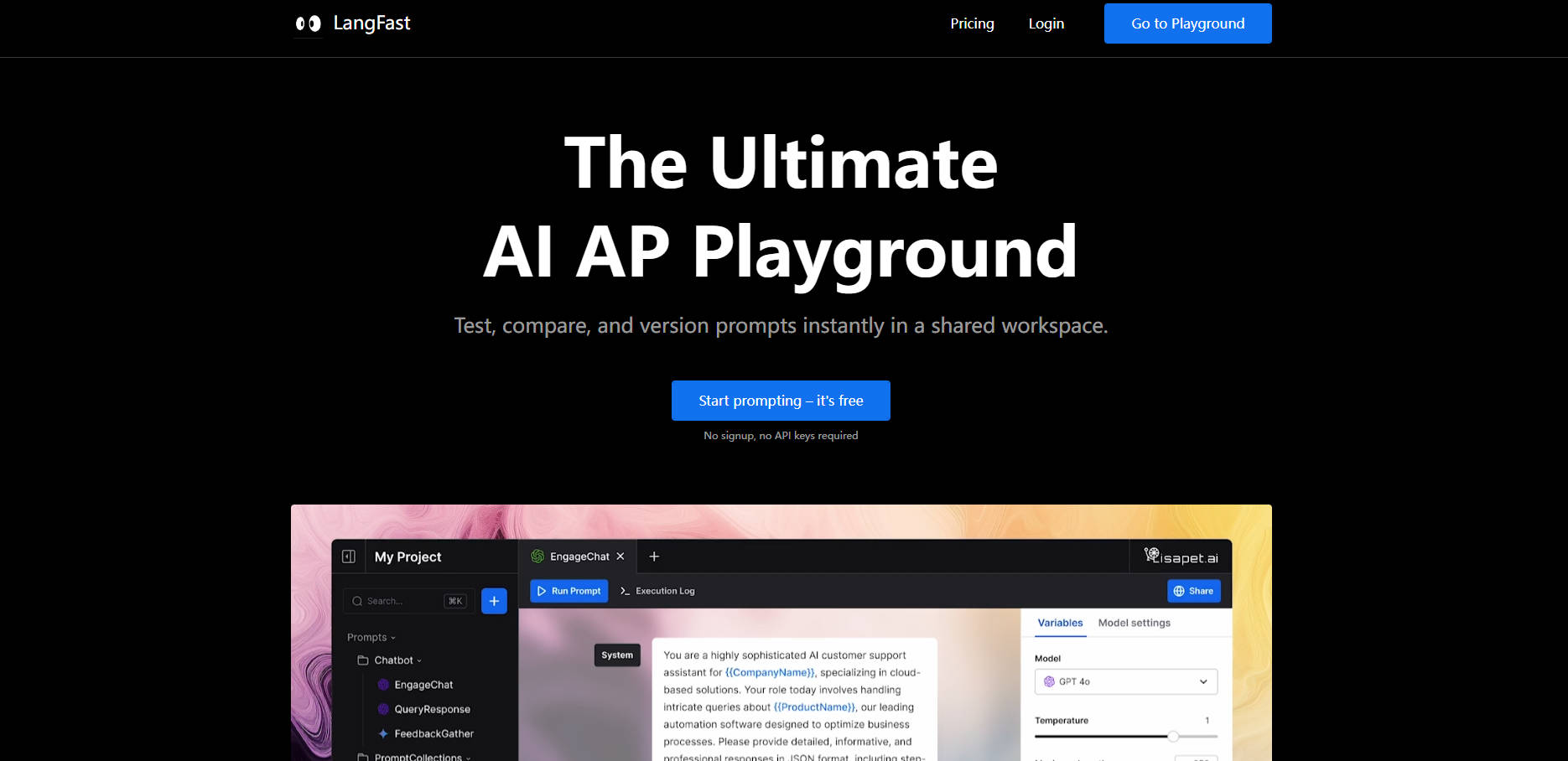

What is LangFast?

LangFast is a streamlined LLM playground designed for developers, prompt engineers, and product teams. It removes the friction of traditional prompt engineering by allowing you to instantly test, compare, and version prompts across dozens of models in a shared workspace—no sign-ups or API keys required to get started.

Key Features

LangFast provides a comprehensive suite of tools to move from rapid experimentation to production-ready prompts with confidence.

🚀 Instantly Compare and Iterate on Prompts Use the side-by-side editor to test multiple prompt variations at once against different models. This allows you to quickly identify the most effective combination of wording, parameters, and AI provider for your specific task.

📊 Ensure Reliable, Structured Outputs Define a required JSON Schema for your prompt's output. LangFast ensures the LLM's response consistently matches your expected data structure, eliminating parsing errors and making it safe to integrate directly into your applications.

🔬 Evaluate Prompts with Test-Driven Engineering Move beyond single-prompt testing by building comprehensive test cases and data sets. You can create test suites with AI-powered evaluations and pre-defined success criteria to objectively measure and compare the performance of multiple prompts across different scenarios.

🔑 Access 50+ LLMs with Zero Setup Get immediate access to a wide range of models from providers like OpenAI and Anthropic without needing to manage your own API keys. The platform handles the configuration, so you can focus entirely on building and testing.

🖼️ Harness Advanced Vision and Function Calling Go beyond text with support for image inputs to leverage powerful Vision APIs. You can also enrich your prompts by defining functions, enabling the LLM to interact with your internal systems and tools for more dynamic and capable results.

Use Cases

Developing a New AI Feature: An engineer building a product summarizer needs the output in a clean JSON format. Using LangFast, they can test three prompt variations side-by-side on both GPT-4o and Claude 3 Sonnet, enforce a strict JSON Schema for the output, and choose the top-performing combination in minutes.

Refining a Customer Support Bot: A product manager creates a dataset of 100 common user questions. The prompt engineer runs a test comparing two prompt versions against this dataset, using AI-powered assertions to check for tone and accuracy. They then share the performance report with the team for feedback directly within the platform.

Why Choose LangFast?

LangFast was built to directly address the common frustrations of working with LLMs in a professional environment. It focuses on speed, accessibility, and a transparent development process.

Immediate, Frictionless Access: Most tools require you to sign up, find your API keys, and configure your environment before you can even write a single prompt. With LangFast, you just open a URL and start working instantly. This removes significant barriers to experimentation and collaboration.

Designed for Speed and Simplicity: The interface is intentionally lightweight and focused. It’s a "no-bullshit" playground designed for efficient testing and iteration, not for upselling you on complex features you don't need.

Transparent and Fair Pricing: Instead of locking you into an expensive monthly subscription, LangFast offers a one-time payment for its Pro plan. This provides a predictable, affordable cost structure, especially for teams whose usage may vary from day to day.

Conclusion:

LangFast is the ideal LLM playground for professionals and teams who value efficiency, collaboration, and rigorous testing. By removing the unnecessary setup and providing powerful tools for comparison and evaluation, it empowers you to build better AI-powered features, faster.

Explore the LangFast playground to see how quickly you can refine your next prompt.

More information on LangFast

LangFast Alternatives

Load more Alternatives-

Streamline LLM prompt engineering. PromptLayer offers management, evaluation, & observability in one platform. Build better AI, faster.

-

-

We're in Public Preview now! Teammate Lang is all-in-one solution for LLM App developers and operations. No-code editor, Semantic Cache, Prompt version management, LLM data platform, A/B testing, QA, Playground with 20+ models including GPT, PaLM, Llama, Cohere.

-

SysPrompt is a comprehensive platform designed to simplify the management, testing, and optimization of prompts for Large Language Models (LLMs). It's a collaborative environment where teams can work together in real time, track prompt versions, run evaluations, and test across different LLM models—all in one place.

-

Stop scattering LLM prompts! PromptShuttle helps you manage, test, and monitor prompts outside your code. Unify models & collaborate seamlessly.