What is PromptShuttle?

Tired of wrestling with LLM prompts scattered throughout your codebase? Feel like experimenting with different models and iterations is a slow, painful process? You're not alone! Managing prompts as your application grows can quickly become messy, making collaboration tough and visibility non-existent.

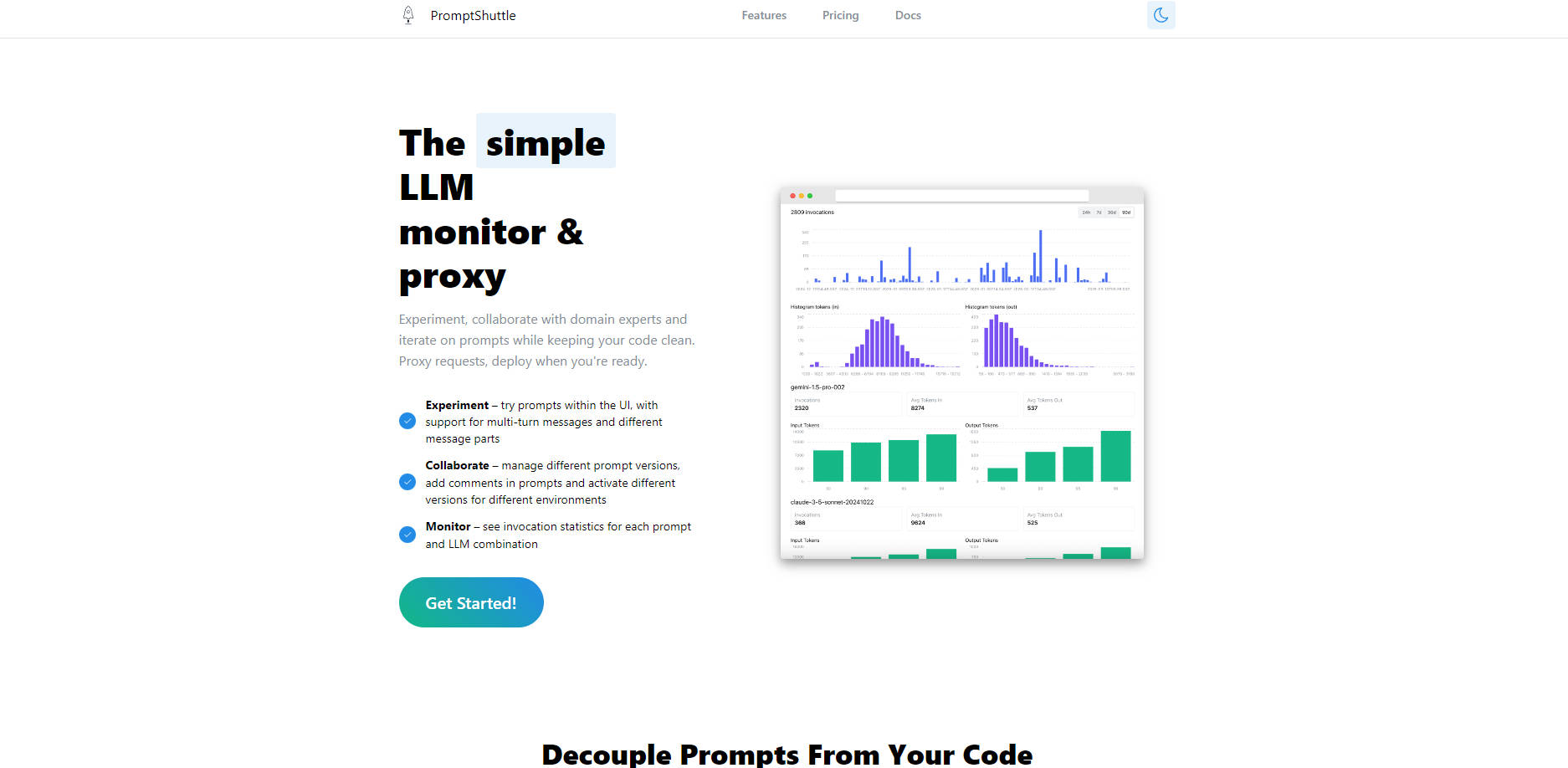

That's where PromptShuttle comes in! It’s your simple, powerful LLM monitor and proxy designed to completely decouple your prompts from your code. This means you can experiment freely, collaborate seamlessly with your team and domain experts, and deploy prompt updates without ever touching your core application code. Get crystal-clear visibility into your LLM usage and confidently iterate towards world-class prompts!

✨ Experiment Effortlessly: Try prompts against a huge variety of LLMs – separately or all at once – and instantly compare results side-by-side. Find the perfect model and prompt combination faster than ever!

🤝 Collaborate Like a Pro: Manage different prompt versions, add inline comments (// or /* */ style!) for crystal-clear context, and track every change with a simple UI. It's built for teams to work together seamlessly!

📈 Monitor with Confidence: See detailed invocation statistics for every prompt and LLM combination right in one place. Understand performance, track usage, and make data-driven decisions to optimize your LLM workflows!

🔗 Decouple Prompts from Code: Move your prompt logic out of your application code entirely. Update prompts, switch models, or deploy new versions per environment without writing or deploying new code!

🚦 Simplify LLM Integration: Use PromptShuttle as a smart proxy! Access dozens of models (like GPT-4o, Claude 3.7 Sonnet, Llama3, Gemini 1.5, and many more!) through a single, unified API. Get consolidated logs, centralized billing, and even set up automated fallbacks!

✏️ Build Dynamic Templates: Easily template your prompts using [[tokens]] and replace values with a simple JSON object in your API calls. Create reusable, flexible prompts that adapt to your needs!

👻 Test Without Cost: Use the built-in "Fake LLM" responder to test your prompt templates thoroughly during development without incurring any LLM API costs. Iterate rapidly and save money!

See PromptShuttle in Action:

Rapid Prompt Iteration: As a developer, you're building a new feature that relies on a specific LLM task. Use PromptShuttle's Experiment UI and Fake LLM to quickly draft and test variations of your prompt, compare results across different models like GPT-4o and Claude 3.7, and find the absolute best one before integrating it into your application.

Team Prompt Refinement: Your product requires nuanced responses, and domain experts have crucial input on prompt wording. Use PromptShuttle's collaboration features – versioning and comments – to allow domain experts and product managers to suggest and track changes to prompts managed outside the codebase, ensuring everyone contributes to the best possible output.

Robust Production Workflows: You've deployed an application relying on LLMs. Use PromptShuttle as the proxy to handle all LLM requests. Monitor usage statistics for performance insights, manage prompt versions for different environments (staging vs. production), and leverage automated fallbacks to increase the reliability of your LLM integrations, just like TenderStrike does for their AI analysis!

PromptShuttle makes building, managing, and monitoring your LLM prompts straightforward and efficient. It’s the smart way to keep your code clean, boost team collaboration, and gain essential visibility into your LLM activity.

Ready to simplify your LLM workflow?

Sign Up Free Today & Get Instant Access to All Models!

More information on PromptShuttle

PromptShuttle Alternatives

Load more Alternatives-

SysPrompt is a comprehensive platform designed to simplify the management, testing, and optimization of prompts for Large Language Models (LLMs). It's a collaborative environment where teams can work together in real time, track prompt versions, run evaluations, and test across different LLM models—all in one place.

-

Streamline LLM prompt engineering. PromptLayer offers management, evaluation, & observability in one platform. Build better AI, faster.

-

PromptTools is an open-source platform that helps developers build, monitor, and improve LLM applications through experimentation, evaluation, and feedback.

-

-