What is NanoGPT?

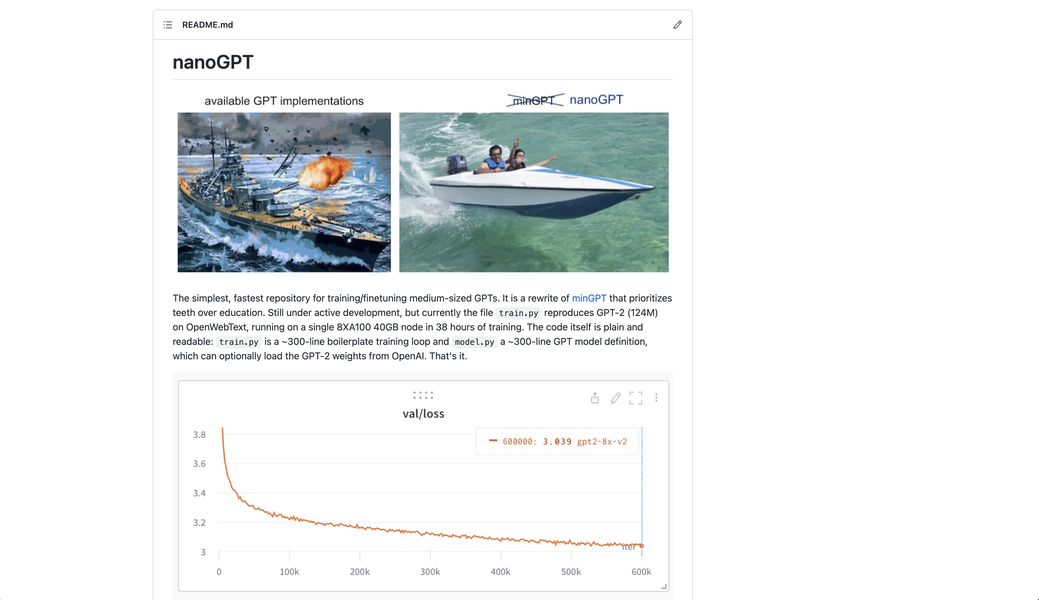

nanoGPT is a repository for training and fine-tuning medium-sized GPTs. It is a simplified version of minGPT that focuses on efficiency and ease of use. The code is straightforward and easy to modify for different purposes, such as training new models or fine-tuning pretrained checkpoints. The software can be installed using pip and requires dependencies such as PyTorch, numpy, transformers, datasets, tiktoken, wandb, and tqdm.

Key Features:

Training and Fine-tuning: nanoGPT allows users to train and fine-tune medium-sized GPT models. The code provides a boilerplate training loop and a GPT model definition, making it easy to customize and adapt to specific needs. Users can train new models from scratch or finetune pretrained checkpoints.

Fast and Efficient: nanoGPT is designed to be fast and efficient, enabling users to train GPT-2 models on OpenWebText in just 4 days using a single 8XA100 40GB node. The code is optimized for performance and can be run on GPUs or CPUs, depending on the available computational resources.

Easy to Use: The codebase of nanoGPT is simple and readable, making it accessible even for non-deep learning professionals. It provides clear instructions and examples for getting started, including training a character-level GPT on Shakespeare's works. The software is highly customizable and allows users to experiment with different hyperparameters and model configurations.

Use Cases:

Natural Language Generation: nanoGPT can be used for generating human-like text, making it suitable for applications such as chatbots, virtual assistants, and content generation. By training or fine-tuning GPT models, users can create language models that produce coherent and contextually relevant text.

Text Completion and Summarization: With its ability to generate text, nanoGPT can be utilized for tasks like text completion and summarization. By providing partial sentences or document summaries as input, the model can generate relevant and coherent completions or summaries.

Language Modeling Research: Researchers in the field of natural language processing can benefit from nanoGPT for language modeling experiments. The software provides a flexible and customizable framework for training and fine-tuning GPT models, allowing researchers to explore different architectures, techniques, and datasets.

Conclusion:

nanoGPT is a user-friendly and efficient tool for training and fine-tuning medium-sized GPT models. With its simple codebase and clear instructions, users can easily train models from scratch or adapt pretrained checkpoints for their specific needs. The software is suitable for various applications, including natural language generation, text completion, summarization, and language modeling research. By leveraging the power of GPT models, nanoGPT enables users to generate high-quality and contextually relevant text.

More information on NanoGPT

NanoGPT Alternatives

Load more Alternatives-

GPT-NeoX-20B is a 20 billion parameter autoregressive language model trained on the Pile using the GPT-NeoX library.

-

Discover how TextGen revolutionizes language generation tasks with extensive model compatibility. Create content, develop chatbots, and augment datasets effortlessly.

-

-

Unlock creativity & productivity with Playground ChatGPT. Tailor prompts, adjust controls, and access multiple AI models for diverse content generation.

-

Process large text files efficiently with LightspeedGPT. It splits and handles extensive files, uses multithreading, and integrates GPT-3.5 and GPT-4 models for optimal performance.