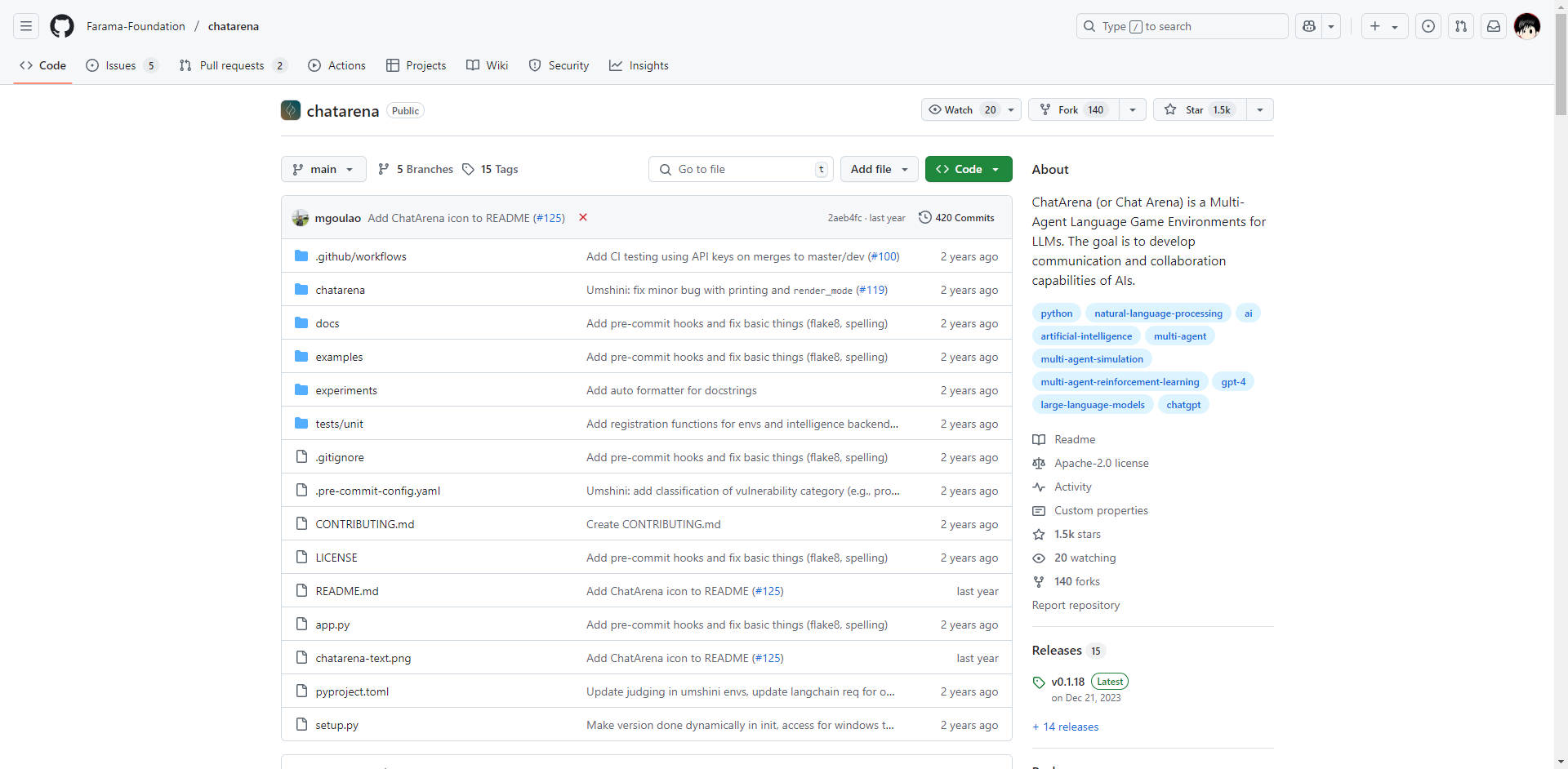

What is ChatArena?

ChatArena is a powerful Python library specifically designed to help researchers and developers explore, benchmark, and train autonomous Large Language Model (LLM) agents within diverse, multi-agent language game environments. It provides a structured yet flexible framework for simulating complex social interactions, enabling you to deepen your understanding of AI communication and collaboration capabilities.

Core Capabilities

ChatArena offers the essential tools you need to work with LLM agents in dynamic, interactive settings:

🗣️ Flexible Framework for Defining Interactions: At its core, ChatArena provides an abstract, flexible framework built on principles similar to Markov Decision Processes. This allows you to precisely define multiple players, environments, and the intricate interactions between them, giving you complete control over your simulation designs.

🌍 Rich Set of Language Game Environments: The library includes a variety of pre-built environments designed for understanding, benchmarking, or training LLM agents. Explore scenarios ranging from simple conversations and rule-based games like Rock-paper-scissors and Tic-tac-toe (both moderator-driven and hard-coded) to more complex social deduction games like Chameleon, and adaptations of classic games like Chess from PettingZoo.

🖥️ User-Friendly Interfaces: Develop and test your LLM agents with ease using intuitive interfaces. ChatArena provides both a Web UI and a Command Line Interface (CLI), allowing you to interact with and prompt engineer your agents as they participate in environments.

🔧 Component-Based Customization: Tailor ChatArena to your specific research needs. The architecture is designed with distinct, customizable components—Arena, Environment, Language Backend, and Player—allowing you to modify game loops, define new game dynamics, integrate different language models, or customize agent interaction logic.

Practical Applications

ChatArena helps you tackle key challenges in LLM agent research and development:

Evaluating Agent Performance in Complex Scenarios: Deploy your LLM agents into environments like the Chameleon social deduction game to rigorously test their ability to strategize, deceive, identify deception, and manage incomplete information under pressure.

Benchmarking Different LLMs: Use standard game environments like Tic-tac-toe or Chess to create consistent benchmarks, allowing you to compare the performance, decision-making capabilities, and adherence to rules across various LLM models or different versions of your own agents.

Prototyping and Training Custom Agents: Leverage ChatArena's flexible framework to design novel multi-agent interactions specific to your research questions. Develop and iterate on custom agents, using the provided interfaces and structure to streamline the training or fine-tuning process for specific communication or collaboration tasks.

Why Choose ChatArena?

ChatArena stands out by offering a unique combination of a robust, abstract framework for defining any multi-agent language game and a ready-to-use collection of diverse environments. This balance of flexibility and out-of-the-box functionality makes it an ideal platform for exploring the frontiers of autonomous LLM agent behavior and social intelligence research.

Conclusion

For researchers and developers focused on advancing the capabilities of autonomous LLM agents in interactive settings, ChatArena provides the essential environments and flexible framework you need. Explore the possibilities for understanding, benchmarking, and training sophisticated AI behaviors.

Learn more about ChatArena and get started today.