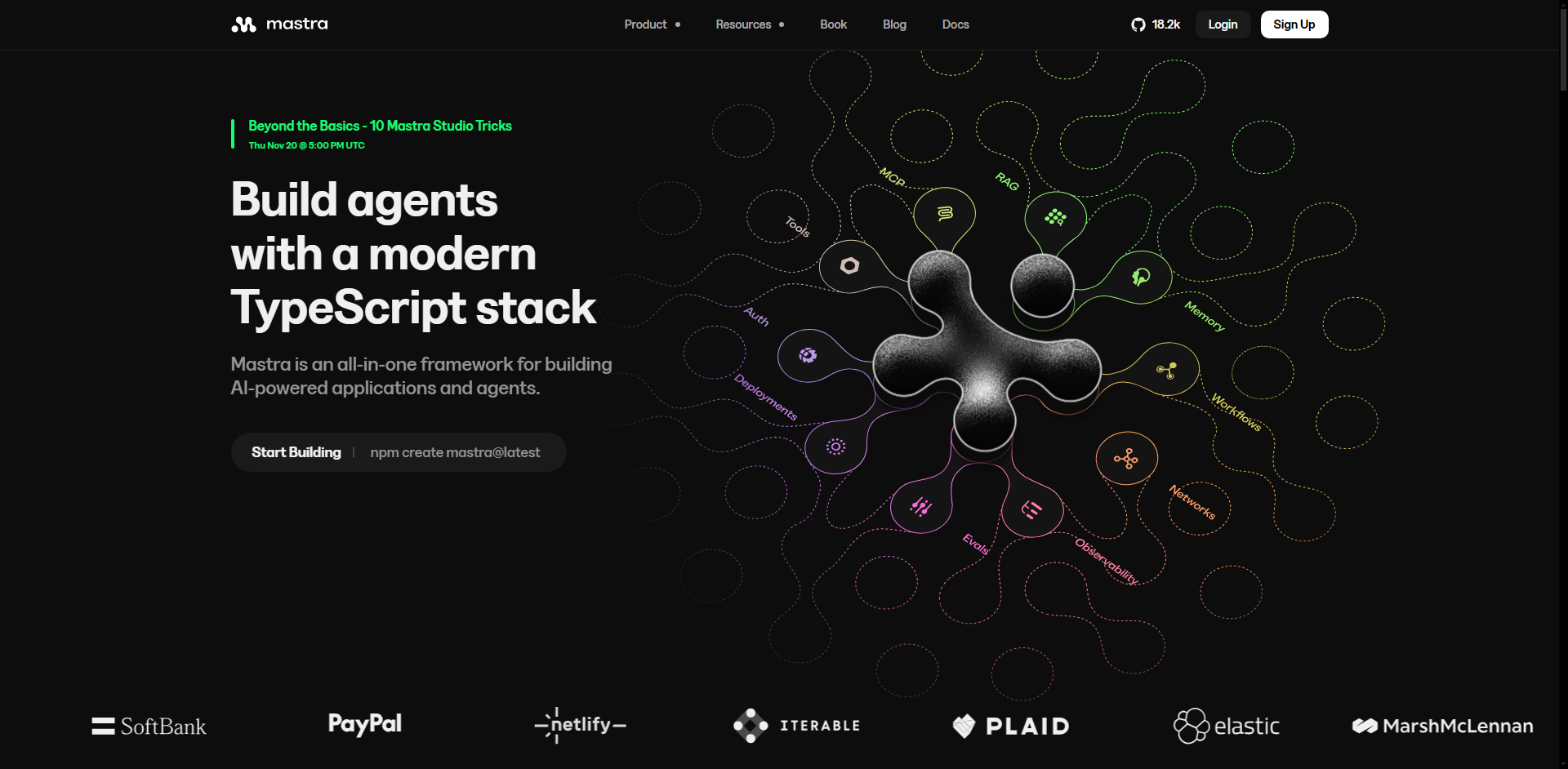

What is Mastra?

Mastra is the comprehensive, TypeScript-native framework designed for developing, tuning, and scaling robust AI applications and autonomous agents. It solves the complexity of integrating diverse models and managing multi-step agentic workflows by providing an all-in-one solution that covers everything from early prototyping to reliable production deployment. For developers already working in the modern TypeScript ecosystem (Node.js, React, Next.js), Mastra offers the easiest path to shipping sophisticated AI products with confidence.

Key Features

Mastra is built around a set of powerful, integrated components that streamline the development of reliable agents and complex AI-driven processes.

🌎 Universal Model Routing

Connect to over 40 distinct model providers (including OpenAI, Anthropic, and Gemini) through a single, standardized interface. This abstraction ensures maximum flexibility, allowing you to dynamically swap foundational models based on cost, performance, or availability without needing to rewrite core application logic.

🤖 Autonomous Agent Design

Enable the creation of sophisticated agents that utilize LLMs and external tools to solve complex, open-ended tasks. Mastra agents handle internal reasoning, tool selection, and iterative problem-solving until a designated goal or stopping condition is met, reducing the burden on developers to manage every decision point manually.

⚙️ Graph-Based Workflow Orchestration

Implement complex multi-step processes using Mastra's intuitive graph-based workflow engine. You gain explicit, dependable control over execution logic using simple, chainable syntax (.then(), .branch(), .parallel()), which is essential for orchestrating critical business processes that rely on multiple steps and conditional routing.

🤝 Human-in-the-Loop Persistence

Easily suspend any agent or workflow indefinitely, awaiting user input, approval, or external data before seamlessly resuming execution. This state-aware feature allows the system to pause and remember its exact execution state, enabling the creation of reliable, sophisticated systems that require intermittent human oversight across long time periods.

🧪 Production Essentials & Observability

Mastra provides built-in evaluation tools (scorers) and advanced AI Tracing capabilities to continuously observe, measure, and refine agent behavior. This focus on reliability is crucial for shipping production AI products, allowing teams to quickly identify failure modes, track token usage, and ensure consistent, high-quality performance.

Use Cases

Mastra's flexible architecture allows developers to move beyond simple chat interfaces and create genuinely functional, action-oriented AI applications.

1. Building Domain-Specific Copilots

Develop internal or customer-facing copilots (e.g., for financial analysis or deep research) that leverage Retrieval Augmented Generation (RAG). By creating vector query tools utilizing embedders and rerankers, Mastra agents can retrieve context from proprietary databases and documents, ensuring responses are accurate, grounded, and tailored to specific domain knowledge.

2. Automating Complex Multi-Step Operations

Use the workflow engine to orchestrate sophisticated backend processes, such as automated customer onboarding, data validation, or compliance checks, where execution requires sequential steps, external API calls, and conditional branching. The framework ensures reliable execution and state management, even when human input is required mid-process.

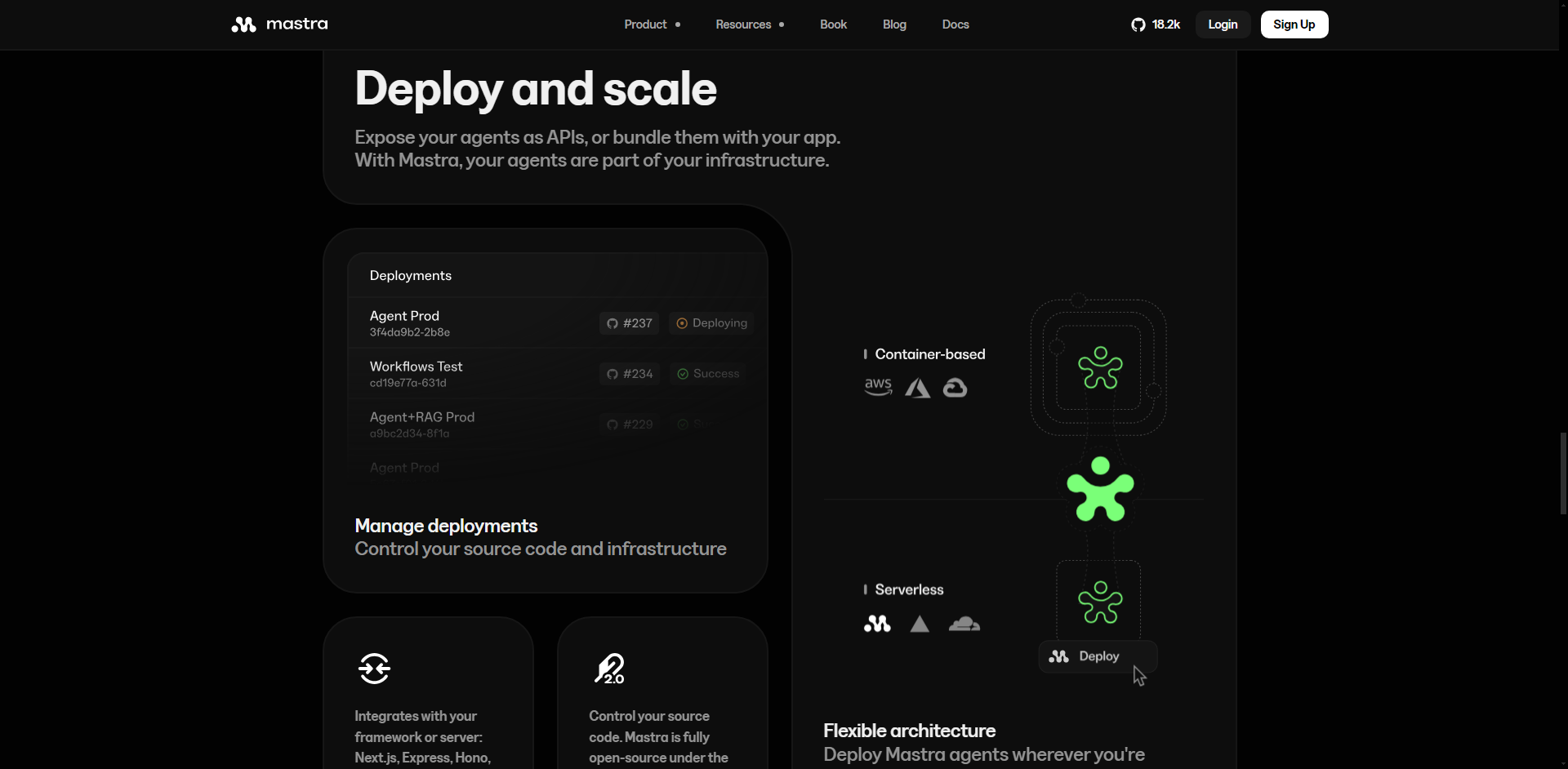

3. Integrating Generative Intelligence into Existing Apps

Seamlessly bundle Mastra agents and workflows into existing frontend and backend applications built on React, Next.js, or Node.js. This integration capacity allows you to deploy advanced agent reasoning and Generative UI components directly into your current product stack without needing to manage separate infrastructure.

Unique Advantages

Mastra is purpose-built to address the specific challenges of deploying reliable AI in enterprise and production environments, offering key differentiators over generalized tools.

TypeScript-Native Focus: Mastra is designed from the ground up for TypeScript, offering superior type safety, predictability, and tooling integration. This focus supports the philosophy that while Python excels at model training, TypeScript is optimal for shipping and maintaining reliable, scalable AI products in production.

All-in-One Production Framework: Unlike solutions that require stitching together separate libraries for model routing, workflow orchestration, observability, and authentication, Mastra provides a single, cohesive framework. This significantly simplifies dependency management and reduces time spent moving prototypes to production stability.

Open-Source and Extensible: Fully open-source under the Apache 2.0 license, Mastra ensures transparency and allows developers full control over their infrastructure and source code. You can deploy Mastra agents wherever your primary application is hosted, or as a standalone server (Express, Hono, etc.).

Conclusion

Mastra provides the structure, tools, and production focus necessary to move beyond simple prototypes and deploy highly reliable, scalable AI agents and applications. If you are a TypeScript developer ready to ship sophisticated AI products with confidence and control, Mastra is your definitive framework.

More information on Mastra

Top 5 Countries

Traffic Sources

Mastra Alternatives

Load more Alternatives-

-

-

-

Superexpert.AI: Open source platform for developers. Build flexible AI agents easily with no code, custom tools, RAG. Get full control and deploy anywhere.

-

Sage: The multi-agent AI framework for complex problems. Orchestrate specialized agents, intelligently decompose tasks, and build robust, production-ready AI applications.