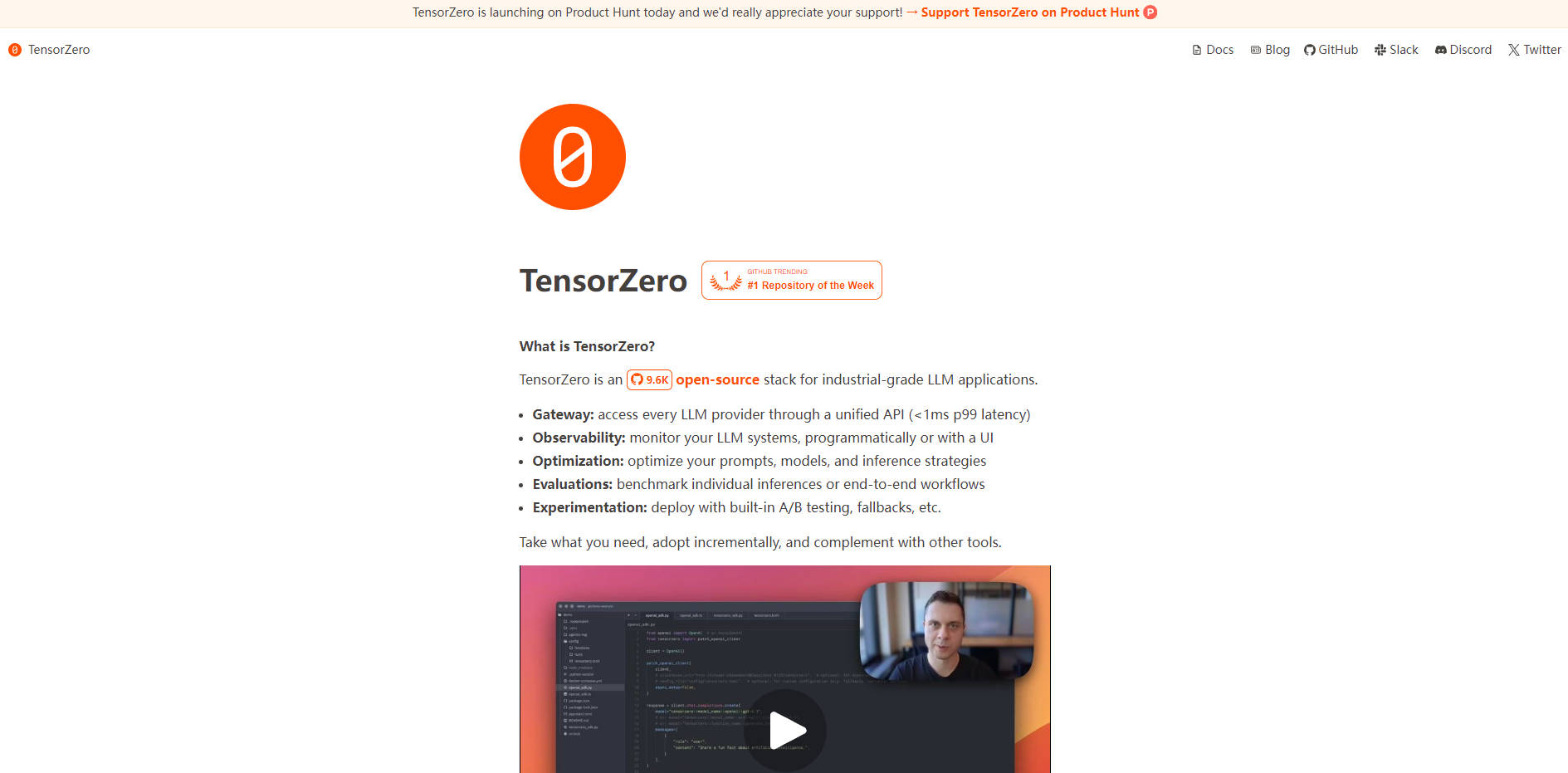

What is TensorZero?

TensorZero is an open-source, industrial-grade stack designed for developers building sophisticated LLM applications. It provides a unified, high-performance toolkit to help you move from prototype to production with confidence. By integrating every critical component of the LLMOps lifecycle, TensorZero enables you to create a powerful feedback loop, turning production data into smarter, faster, and more cost-effective models.

Key Features

🌐 Unified LLM Gateway: Access every major LLM provider (like OpenAI, Anthropic, and Groq) or self-hosted models through a single, consistent API. Built in Rust, the gateway is engineered for extreme performance (<1ms p99 latency overhead), ensuring your application remains fast and responsive at scale.

🔍 Integrated Observability: Automatically store and analyze every inference, metric, and piece of user feedback in your own database. You can use the TensorZero UI to debug individual calls or monitor high-level trends, giving you a complete picture of your application's performance and behavior.

📈 Data-Driven Optimization: Turn insights into action by using production metrics and human feedback to systematically improve your system. TensorZero facilitates supervised fine-tuning, automated prompt engineering, and advanced inference strategies to enhance model accuracy and reduce operational costs.

📊 Robust Evaluation Framework: Make informed decisions by benchmarking prompts, models, and configurations. Use heuristics, LLM-powered judges, or custom logic to run evaluations that function like unit and integration tests for your AI workflows.

🧪 Confident Experimentation: Ship new features and models safely with built-in A/B testing, strategic routing, and automated fallbacks. This allows you to validate changes with real-world data before deploying them to all users.

Use Cases

Dramatically Reduce API Costs: Imagine your application relies on a large, expensive model for data extraction. Using TensorZero, you can collect a small dataset of high-quality examples from production, then use the fine-tuning tools to train a much smaller model (like GPT-4o Mini). The result is a model that can outperform the larger one on your specific task at a fraction of the cost and latency.

Build a Reliable Research Agent: You're developing an agentic RAG system that answers complex questions by searching multiple sources. With TensorZero's observability, you can trace the agent's entire reasoning process for each query. When it fails, you can pinpoint the exact step, correct its behavior, and add the interaction to an evaluation dataset to prevent future regressions.

Align a Model to Nuanced Preferences: Your goal is to generate creative content (like haikus) that matches a specific, subjective style. By collecting user feedback on generated content, you can create a preference dataset and use it to fine-tune a base model. TensorZero's integrated stack allows this "data flywheel" to operate continuously, progressively aligning the model's output with your desired taste.

Why Choose TensorZero?

For teams focused on building durable, high-quality LLM systems, TensorZero offers several key advantages over a collection of disparate tools.

A Truly Integrated Stack: TensorZero isn't just a set of tools; it's a unified system where each component enhances the others. For example, data logged through the Observability module can be directly used to create evaluation datasets, which in turn generate insights for fine-tuning a model—all within a single, cohesive workflow.

Engineered for Production Demands: Performance is a core design principle. The Rust-based gateway ensures minimal latency even at high throughput (10k+ QPS). The entire stack is self-hosted, giving you complete control over your data, security, and infrastructure, with full support for GitOps workflows.

Completely Open-Source and Transparent: TensorZero is 100% open-source (Apache 2.0) with no paid features or vendor lock-in. You have full access to the codebase and the freedom to customize, extend, and integrate it as needed, ensuring it fits perfectly within your existing technical environment.

Conclusion

TensorZero provides the critical infrastructure for building and scaling professional LLM applications. By unifying the LLMOps lifecycle into a single, high-performance stack, it empowers you to create a continuous improvement loop driven by real-world data. This principled approach helps you build more reliable, intelligent, and efficient AI products.

Explore the Quick Start guide to deploy your first production-ready LLM application in just 5 minutes.

Frequently Asked Questions (FAQ)

1. How much does TensorZero cost? Nothing. TensorZero is 100% free and open-source under the Apache 2.0 license. It is self-hosted, so you only incur costs for the infrastructure you choose to run it on. There are no paid features or enterprise licenses.

2. Is TensorZero ready for production use? Yes. It was designed from the ground up for industrial-grade applications and is already being used in production environments, including at a large financial institution for automating code changelogs.

3. What languages and frameworks can I use with TensorZero? You can integrate TensorZero from any major programming language. It offers a dedicated Python client, compatibility with any OpenAI SDK (e.g., for Python or Node.js), and a standard HTTP API for all other environments.