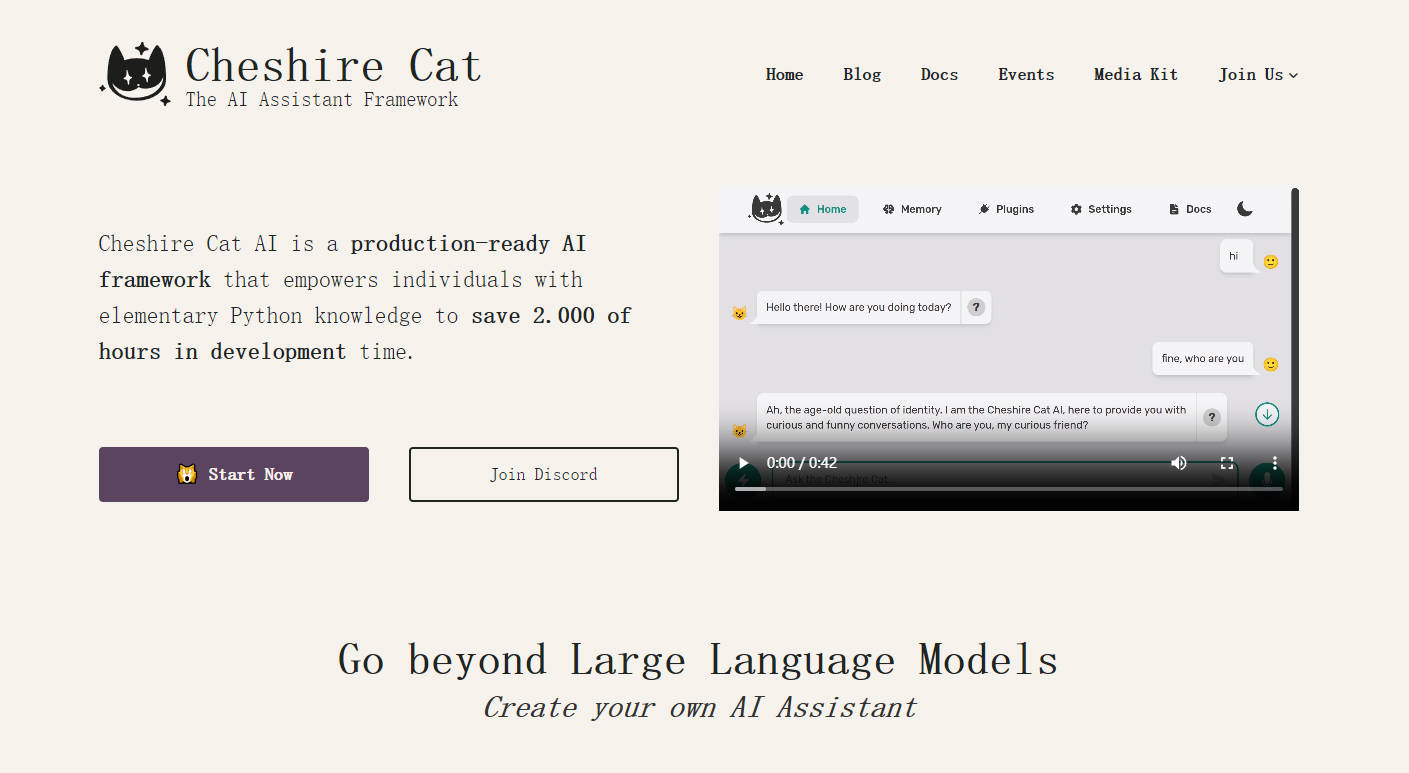

What is Cheshire Cat AI?

Cheshire Cat AI is a robust, production-ready framework designed for developers and architects who need to quickly deploy, customize, and scale advanced conversational AI agents. It addresses the complexity of integrating AI by offering a "microservice first" architecture, allowing you to seamlessly add a sophisticated conversational layer to new or pre-existing enterprise applications without wrestling with heavy infrastructure or rigid frameworks.

Key Features

🔌 Effortless Extensibility via Simple Plugins

Cheshire Cat AI features a uniquely simplified plugin architecture where "a plugin is just a folder." This approach allows you to focus on agent functionality, using Python files to add custom hooks, tools, and forms. Forget the complexity of deep OOP hierarchies; the system supports live-reloading during debug sessions, dramatically accelerating your development cycle.

🧠 Advanced Conversational Intelligence (Hooks, Tools, Forms)

Build agents capable of more than simple Q&A. Leverage the Hooks system to customize system prompts and message pipelines, enabling fine-grained control over agent behavior. Utilize Tools for robust function calling, allowing the LLM to interact with external APIs, databases, or domotics systems. Implement Forms to handle goal-oriented, multi-turn conversations, automatically gathering complex structured information based on Pydantic models.

📚 Train with Custom Knowledge and Data

Equip your AI agent with deep domain expertise by easily uploading various document types, including PDFs, TXT, Markdown, JSON, and web pages. This built-in RAG (Retrieval-Augmented Generation) capability ensures your agent provides accurate, context-aware responses grounded in your specific organizational data, transforming raw documents into actionable intelligence.

⚙️ Flexible, Docker-Based Deployment

Designed for modern infrastructure, the framework is 100% Dockerized, ensuring "plug & play" integration into your existing architecture. Deploy the Cat as a single container alongside essential services like vector databases (e.g., Qdrant), LLM runners (e.g., Ollama, vLLM), and reverse proxies (Caddy, Nginx), guaranteeing consistent performance and easy scaling in production environments.

Use Cases

Building Transactional Enterprise Assistants: Develop internal AI agents that go beyond reporting. Using Tools, the agent can query your CRM or ERP system. Combined with Forms, it can handle complex, multi-step requests, such as processing a new ticket or gathering structured data for an HR request, all within a natural language dialogue.

Integrating Conversational AI into Existing Software: If you have a legacy application (like a Django or WordPress site) and want to add a powerful conversational layer without a complete overhaul, the microservice-first architecture and extensive HTTP/WebSocket API make this straightforward. You can use community-built clients in common languages to stream tokens and notifications directly into your existing user interfaces.

Creating Domain-Specific Knowledge Bots: Quickly launch specialized chatbots for legal, technical support, or internal training. By utilizing the document upload feature, you train the agent exclusively on internal manuals, technical specs, or compliance guidelines. The Admin Panel allows you to manage the memory contents and configure the specific commercial or open-source LLMs and embedders best suited for your domain.

Conclusion

Cheshire Cat AI provides the architectural flexibility and advanced conversational features needed to move your AI agents from concept to production rapidly and reliably. By prioritizing easy integration (Docker), robust extensibility (Plugins), and comprehensive dialogue management (Hooks, Tools, Forms), it empowers developers to build sophisticated, context-aware applications that truly interact with the world.