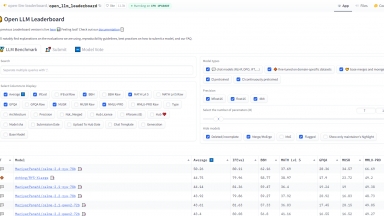

Huggingface's Open LLM Leaderboard

Huggingface's Open LLM Leaderboard

BenchLLM by V7

BenchLLM by V7

Huggingface's Open LLM Leaderboard

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Llm Benchmark Leaderboard,Data Analysis |

BenchLLM by V7

| Launched | 2023-07 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Framer,Google Fonts,HSTS |

| Tag | Test Automation,Llm Benchmark Leaderboard |

Huggingface's Open LLM Leaderboard Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

BenchLLM by V7 Rank/Visit

| Global Rank | 12812835 |

| Country | United States |

| Month Visit | 961 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Klu LLM Benchmarks - Real-time Klu.ai data powers this leaderboard for evaluating LLM providers, enabling selection of the optimal API and model for your needs.

Berkeley Function-Calling Leaderboard - Explore The Berkeley Function Calling Leaderboard (also called The Berkeley Tool Calling Leaderboard) to see the LLM's ability to call functions (aka tools) accurately.

LiveBench - LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.

LLM Explorer - Discover, compare, and rank Large Language Models effortlessly with LLM Extractum. Simplify your selection process and empower innovation in AI applications.

LightEval - LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron.