What is Huggingface's Open LLM Leaderboard?

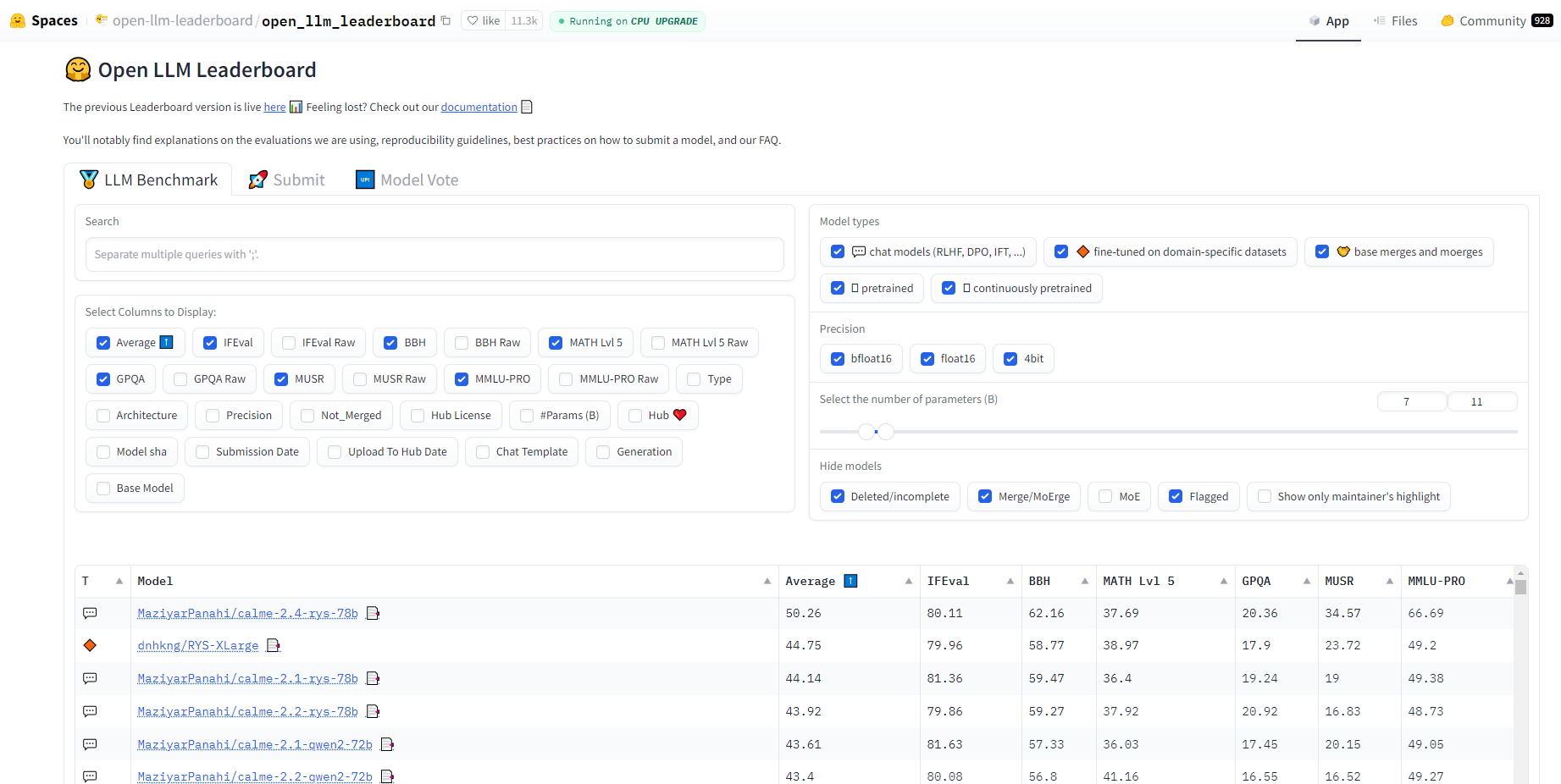

Huggingface's Open LLM Leaderboard introduces a suite of improvements to its predecessor, which has already served as a vital hub for over 2 million visitors. It now offers more challenging benchmarks, a refined evaluation process, and a better user experience. The leaderboard's primary aim is to distill the true potential of LLMs by overcoming the limitations of existing benchmarks, such as ease and data contamination, ensuring that model performances reflect genuine advancements rather than optimized metrics.

Key Features

New Benchmarks: Six rigorous benchmarks are introduced to test a range of skills from knowledge and reasoning to complex math and instruction following.

Standardized Scoring: A new scoring system that standardizes results to account for varying difficulty levels across different benchmarks.

Updated Evaluation Harness: Collaboration with EleutherAI for an updated harness to ensure evaluations remain consistent and reproducible.

Maintainer Recommendations: A curated list of top-performing models from various sources, providing a reliable starting point for users.

Community Voting: A voting system allowing the community to prioritize models for evaluation, ensuring that the most anticipated models are assessed promptly.

Use Cases

Research and Development: Researchers can identify the most promising models for further development or customization based on detailed performance metrics.

Business Implementation: Companies seeking to integrate LLMs into their products can select models that excel in relevant tasks and domains.

Educational Purposes: Educators and students can use the leaderboard to understand the current state of LLM capabilities and the field's progression.

Conclusion

Huggingface's Open LLM Leaderboard is not just an update; it's a significant advancement in the evaluation of LLMs. By offering a more accurate, challenging, and community-driven assessment, it paves the way for the next generation of language models. Explore the leaderboard, contribute your models, and be part of shaping the future of AI.

More information on Huggingface's Open LLM Leaderboard

Huggingface's Open LLM Leaderboard Alternatives

Load more Alternatives-

Real-time Klu.ai data powers this leaderboard for evaluating LLM providers, enabling selection of the optimal API and model for your needs.

-

Explore The Berkeley Function Calling Leaderboard (also called The Berkeley Tool Calling Leaderboard) to see the LLM's ability to call functions (aka tools) accurately.

-

-

Discover, compare, and rank Large Language Models effortlessly with LLM Extractum. Simplify your selection process and empower innovation in AI applications.

-