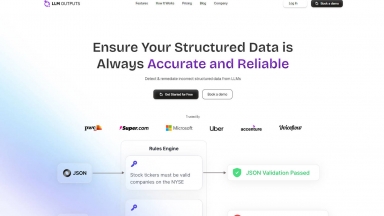

LLM Outputs

LLM Outputs

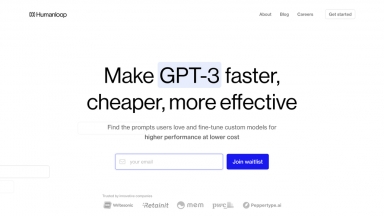

Humanloop

Humanloop

LLM Outputs

| Launched | 2024-08 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Google Analytics,Google Tag Manager,Webflow,Amazon AWS CloudFront,Google Fonts,jQuery,Gzip,OpenGraph,HSTS |

| Tag | Data Analysis,Data Extraction,Data Enrichment |

Humanloop

| Launched | 2006-05 |

| Pricing Model | Free Trial |

| Starting Price | |

| Tech used | Next.js,Vercel,Progressive Web App,RSS,Webpack,HSTS |

| Tag | Mlops |

LLM Outputs Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Humanloop Rank/Visit

| Global Rank | 476326 |

| Country | United States |

| Month Visit | 78423 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Deepchecks - Deepchecks: The end-to-end platform for LLM evaluation. Systematically test, compare, & monitor your AI apps from dev to production. Reduce hallucinations & ship faster.

Confident AI - Companies of all sizes use Confident AI justify why their LLM deserves to be in production.

Traceloop - Traceloop is an observability tool for LLM apps. Real-time monitoring, backtesting, instant alerts. Supports multiple providers. Ensure reliable LLM deployments.

LazyLLM - LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.