What is LLM Outputs?

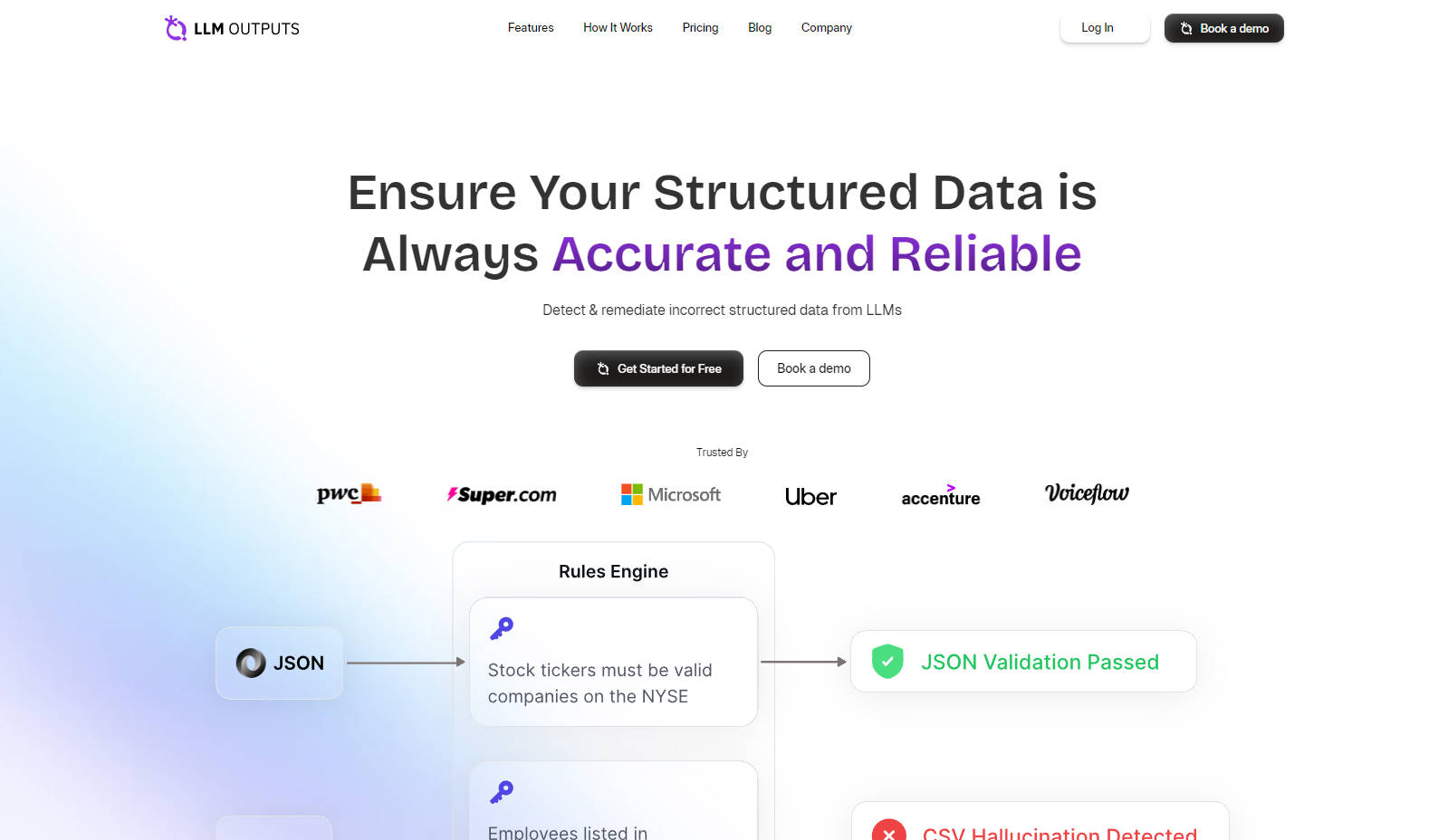

LLM Outputs revolutionizes AI-generated structured data integrity by offering real-time monitoring and seamless integration to detect and eliminate hallucinations. This ensures the data you rely on is not only accurate and reliable but also contextually relevant. By preventing errors and fabrications, LLM Outputs enhances operational efficiency and data trustworthiness, making it an indispensable tool for any organization leveraging large language models.

Key Features:

Format Conformity: Guarantees structured data adheres to specified formats like JSON, CSV, or XML, every time, eradicating format errors.

Hallucination Detection: Employs advanced AI models to identify and rectify data hallucinations, preserving the integrity of your structured data.

Contextual Accuracy: Maintains data relevance and precision for intended use by aligning it with the right context.

Seamless Integration: Provides easy-to-inject code snippets for quick integration into existing systems, with a developer-friendly approach.

Real-Time Monitoring: Offers low-latency APIs for immediate detection and correction of discrepancies in structured data.

Use Cases:

AI Data Integrity: Ensures the data used to train AI models is accurate, leading to improved performance and reliability.

Financial Data Validation: Protects against erroneous financial data that could lead to costly mistakes or compliance issues.

Enterprise Data Pipelines: Streamlines data workflows in large organizations by validating structured data in real-time.

Conclusion:

LLM Outputs is the benchmark for maintaining structured data accuracy, providing organizations with an innovative solution that integrates effortlessly into existing workflows. With the power of real-time validation, you can trust your data like never before. Ready to elevate your data accuracy? Try LLM Outputs for free today and transform how you manage your structured data.

FAQs:

Q: What is hallucination in structured data, and why is it important to detect it?

A:Hallucination in structured data refers to the introduction of inaccuracies or fabrications by AI models. Detecting these is crucial as they can compromise data reliability and lead to erroneous decision-making. LLM Outputs specializes in identifying such issues to maintain data integrity.

Q: How does LLM Outputs integrate with existing systems?

A:LLM Outputs integrates seamlessly with your current systems by providing code snippets that you can inject into your workflow. It works alongside your tools to validate structured data, ensuring accuracy without disrupting your operations.

Q: What makes LLM Outputs' hallucination detection models superior?

A:LLM Outputs employs cutting-edge AI technology that is fine-tuned for detecting and eliminating hallucinations in structured data. Its models are unparalleled in ensuring consistent accuracy and reliability, setting a new standard for data validation in AI-generated content.