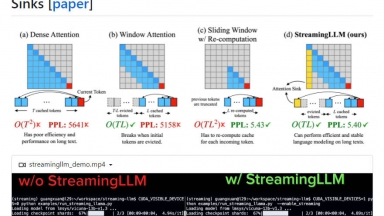

StreamingLLM

StreamingLLM

Flowstack

Flowstack

StreamingLLM

| Launched | 2024 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Workflow Automation,Developer Tools,Communication |

Flowstack

| Launched | 2023-05 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Google Tag Manager,Webflow,Amazon AWS CloudFront,Cloudflare CDN,Google Fonts,jQuery,Gzip,OpenGraph |

| Tag | Data Analysis,Business Intelligence,Developer Tools |

StreamingLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Flowstack Rank/Visit

| Global Rank | 10914910 |

| Country | United States |

| Month Visit | 1744 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

vLLM - A high-throughput and memory-efficient inference and serving engine for LLMs

EasyLLM - EasyLLM is an open source project that provides helpful tools and methods for working with large language models (LLMs), both open source and closed source. Get immediataly started or check out the documentation.

LLMLingua - To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

LazyLLM - LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

LMCache - LMCache is an open-source Knowledge Delivery Network (KDN) that accelerates LLM applications by optimizing data storage and retrieval.