What is StreamingLLM?

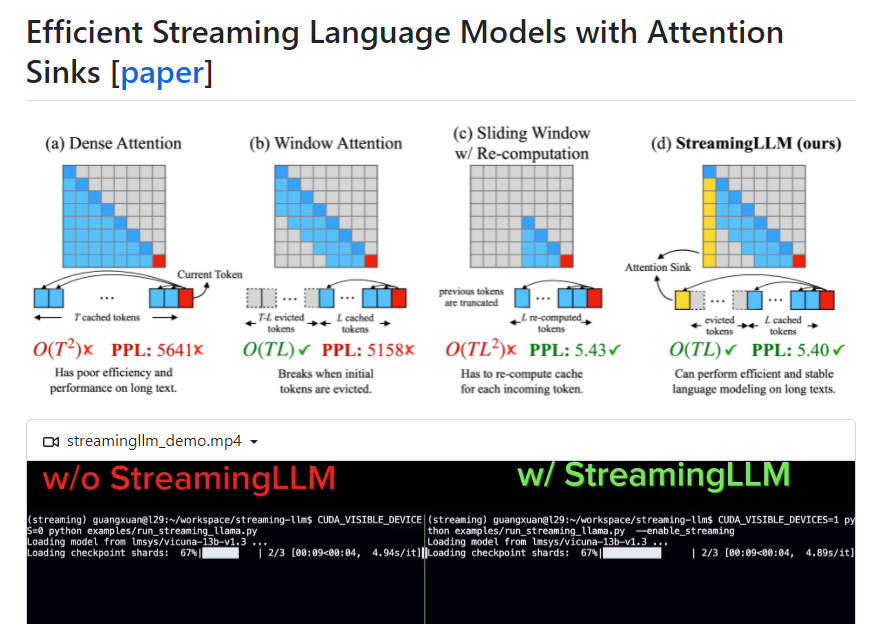

StreamingLLM, is an efficient framework that allows Large Language Models (LLMs) to be deployed in streaming applications without sacrificing efficiency and performance. It addresses the challenges of caching previous tokens' Key and Value states (KV) during decoding and the inability of popular LLMs to generalize to longer texts than their training sequence length. By introducing attention sinks and retaining the KV of initial tokens, StreamingLLM enables LLMs trained with a finite length attention window to handle infinite sequence lengths without fine-tuning. It outperforms sliding window recomputation baselines by up to 22.2x speedup.

Key Features:

1. Efficient deployment: StreamingLLM allows LLMs to be used in streaming applications without compromising efficiency or performance.

2. Attention sinks: By keeping the KV of initial tokens as attention sinks, StreamingLLM recovers the performance of window attention even when text length surpasses cache size.

3. Generalization to infinite sequence length: With StreamingLLM, LLMs can handle inputs of any length without needing a cache reset or sacrificing coherence.

4. Improved streaming deployment: Adding a placeholder token as a dedicated attention sink during pre-training further enhances streaming deployment.

5. Speed optimization: In streaming settings, StreamingLLM achieves up to 22.2x speedup compared to sliding window recomputation baselines.

Use Cases:

1. Multi-round dialogues: StreamingLLM is optimized for scenarios where models need continuous operation without extensive memory usage or reliance on past data, making it ideal for multi-round dialogues.

2. Daily assistants based on LLMs: With StreamingLLM, daily assistants can function continuously and generate responses based on recent conversations without requiring cache refreshes or time-consuming recomputation.

StreamingLLM is an efficient framework that enables the deployment of LLMs in streaming applications while maintaining high performance and efficiency. By introducing attention sinks and retaining the KV of initial tokens, StreamingLLM allows LLMs to handle infinite sequence lengths without fine-tuning. It is particularly useful for multi-round dialogues and daily assistants based on LLMs, offering improved streaming deployment and significant speed optimizations compared to traditional methods.