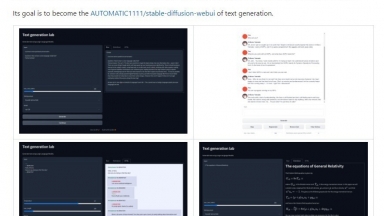

Text Generation WebUI

Text Generation WebUI

Text Generation WebUI

| Launched | 2023 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Text Generators |

Open WebUI

| Launched | 2024-2 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Google Analytics,Google Tag Manager,Svelte(Kit),Gravatar,Gzip,Nginx,Ubuntu |

| Tag | Web Design,Chrome Extension |

Text Generation WebUI Rank/Visit

| Global Rank | 0 |

| Country | |

| Month Visit | 0 |

Top 5 Countries

Traffic Sources

Open WebUI Rank/Visit

| Global Rank | 63556 |

| Country | United States |

| Month Visit | 792629 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Text Generator Plugin - Discover how TextGen revolutionizes language generation tasks with extensive model compatibility. Create content, develop chatbots, and augment datasets effortlessly.

LoLLMS Web UI - LoLLMS WebUI: Access and utilize LLM models for writing, coding, data organization, image and music generation, and much more. Try it now!

ChattyUI - Open-source, feature rich Gemini/ChatGPT-like interface for running open-source models (Gemma, Mistral, LLama3 etc.) locally in the browser using WebGPU. No server-side processing - your data never leaves your pc!

LLMLingua - To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

Open WebUI

Open WebUI