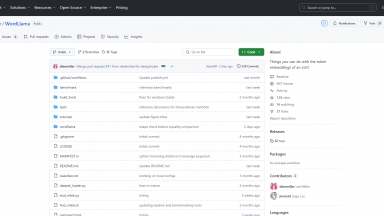

WordLlama

WordLlama

WordLlama is a utility for natural language processing (NLP) that recycles components from large language models (LLMs) to create efficient and compact word representations, similar to GloVe, Word2Vec, or FastText.

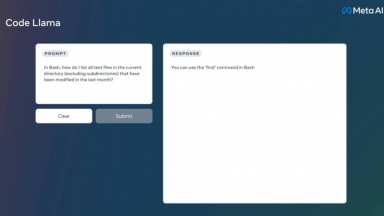

Code Llama

Code Llama

Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

WordLlama

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Data Analysis,Developer Tools |

Code Llama

| Launched | 1991-01 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Gzip,HTTP/3,OpenGraph,HSTS |

| Tag | Code Generation,Software Development,Developer Tools |

WordLlama Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Code Llama Rank/Visit

| Global Rank | 0 |

| Country | United States |

| Month Visit | 1064466 |

Top 5 Countries

26.78%

9.7%

4.67%

4.33%

3.93%

United States

India

Canada

China

Germany

Traffic Sources

3.95%

0.72%

0.07%

9.8%

48.6%

36.86%

social

paidReferrals

mail

referrals

search

direct

Estimated traffic data from Similarweb

What are some alternatives?

When comparing WordLlama and Code Llama, you can also consider the following products

TinyLlama - The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens.

Llama 4 - Meta's Llama 4: Open AI with MoE. Process text, images, video. Huge context window. Build smarter, faster!

LlamaEdge - The LlamaEdge project makes it easy for you to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally.

llamafile - Llamafile is a project by a team over at Mozilla. It allows users to distribute and run LLMs using a single, platform-independent file.