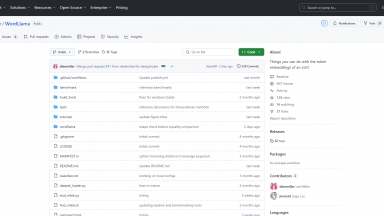

WordLlama

WordLlama

WordLlama is a utility for natural language processing (NLP) that recycles components from large language models (LLMs) to create efficient and compact word representations, similar to GloVe, Word2Vec, or FastText.

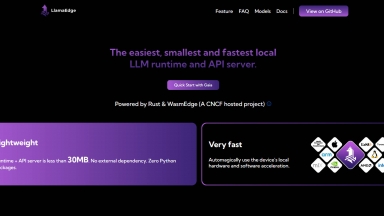

LlamaEdge

LlamaEdge

The LlamaEdge project makes it easy for you to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally.

WordLlama

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Data Analysis,Developer Tools |

LlamaEdge

| Launched | 2024-01 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Google Analytics,Google Tag Manager,Fastly,Google Fonts,GitHub Pages,Gzip,Varnish |

| Tag | Software Development,Chatbot Builder,Code Generation |

WordLlama Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

LlamaEdge Rank/Visit

| Global Rank | 3992632 |

| Country | Germany |

| Month Visit | 2956 |

Top 5 Countries

43.76%

37.88%

15.81%

2.54%

Germany

United States

India

France

Traffic Sources

4.01%

1.54%

0.13%

10.31%

25.52%

57.47%

social

paidReferrals

mail

referrals

search

direct

Estimated traffic data from Similarweb

What are some alternatives?

When comparing WordLlama and LlamaEdge, you can also consider the following products

TinyLlama - The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens.

Llama 4 - Meta's Llama 4: Open AI with MoE. Process text, images, video. Huge context window. Build smarter, faster!

Code Llama - Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

llamafile - Llamafile is a project by a team over at Mozilla. It allows users to distribute and run LLMs using a single, platform-independent file.