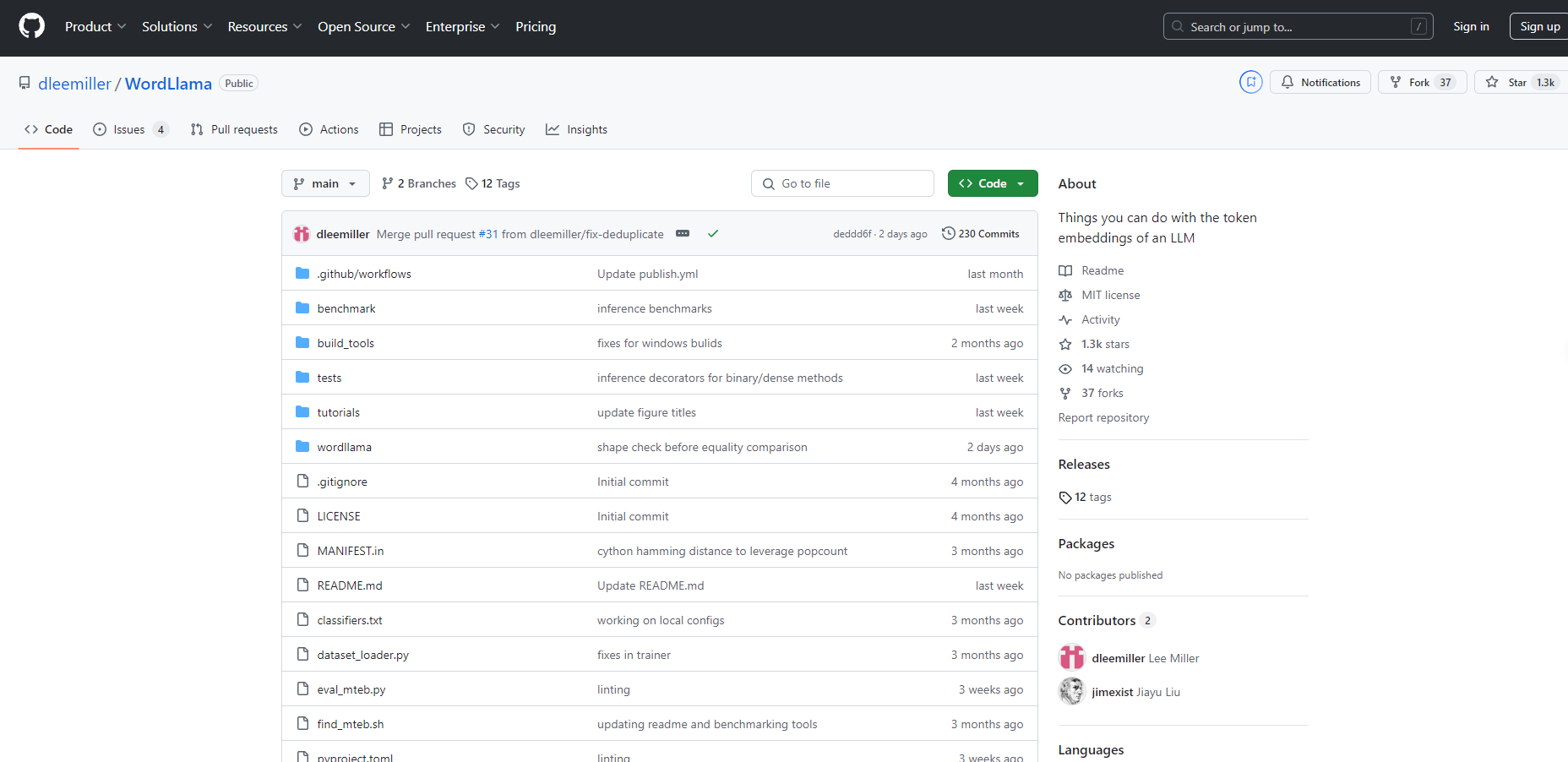

What is WordLlama?

WordLlama is an innovative natural language processing (NLP) toolkit optimized for performance on CPU hardware. It leverages components from state-of-the-art large language models to create compact and efficient word representations, suitable for tasks like fuzzy deduplication, similarity computation, and semantic text splitting. With its lightweight design and low resource requirements, WordLlama improves upon traditional word embeddings while maintaining a small footprint suitable for resource-constrained environments.

Key Features:

🧠 Matryoshka Representations: Flexible truncation of embedding dimensions for adaptable model size and performance.

🚀 Low Resource Requirements: Fast operation on CPUs without needing GPUs, utilizing simple token lookup and average pooling.

🌐 Binary Embeddings: Enable compact integer array storage for swift Hamming distance calculations with straight-through estimator training.

📊 Numpy-only Inference: Lightweight inference依靠 solely on NumPy for easy deployment and integration.

⚡ Versatile Tool: Designed for exploratory analysis and utility applications, enhancing LLM output evaluation and preparatory NLP tasks.

Use Cases:

Duplicate Detection: WordLlama effectively identifies and removes duplicate texts in large document sets, improving data quality for further analysis.

Content Clustering: Ideal for organizing large volumes of text data into meaningful groups, aiding in content categorization and management.

Information Retrieval: Enhances search capabilities by ranking documents based on similarity to a query, improving the efficiency of information access.

Conclusion:

WordLlama stands out as a robust, CPU-friendly NLP toolkit that delivers on performance without compromising on efficiency. Its innovative use of large language model components in a compact form factor makes it an indispensable tool for NLP tasks in environments with limited computational resources. Users looking to drive insights from text data without the overhead of heavy infrastructure will find WordLlama to be an optimal solution.

FAQs:

What are the system requirements for running WordLlama?

WordLlama is optimized for CPU usage and can run on most modern processors. It does not require a GPU for inference.How does WordLlama compare to traditional word embeddings like GloVe?

WordLlama models outperform GloVe 300d on all MTEB benchmarks while being significantly smaller in size, making them more efficient for deployment.Can WordLlama be used for real-time text processing?

Yes, with its fast single-core performance and minimal dependencies, WordLlama is suitable for real-time applications requiring quick text analysis and processing.

More information on WordLlama

WordLlama Alternatives

WordLlama Alternatives-

The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens.

-

Meta's Llama 4: Open AI with MoE. Process text, images, video. Huge context window. Build smarter, faster!

-

The LlamaEdge project makes it easy for you to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally.

-

Discover Code Llama, a cutting-edge AI tool for code generation and understanding. Boost productivity, streamline workflows, and empower developers.

-

Llamafile is a project by a team over at Mozilla. It allows users to distribute and run LLMs using a single, platform-independent file.