What is Ell?

Ell is a Python library designed to simplify and enhance the process of prompt engineering for large language models (LLMs). By treating prompts as functions, ell enables developers to manage, version, and optimize prompts more effectively, boosting the performance and efficiency of LLM applications. Ell supports a wide range of features including automatic versioning, local storage of LMP calls, multimodal inputs and outputs, and tools for prompt visualization and analysis. Whether you're building simple chatbots or complex AI applications, ell provides the tools you need to unlock the full potential of LLMs.

Key Features:

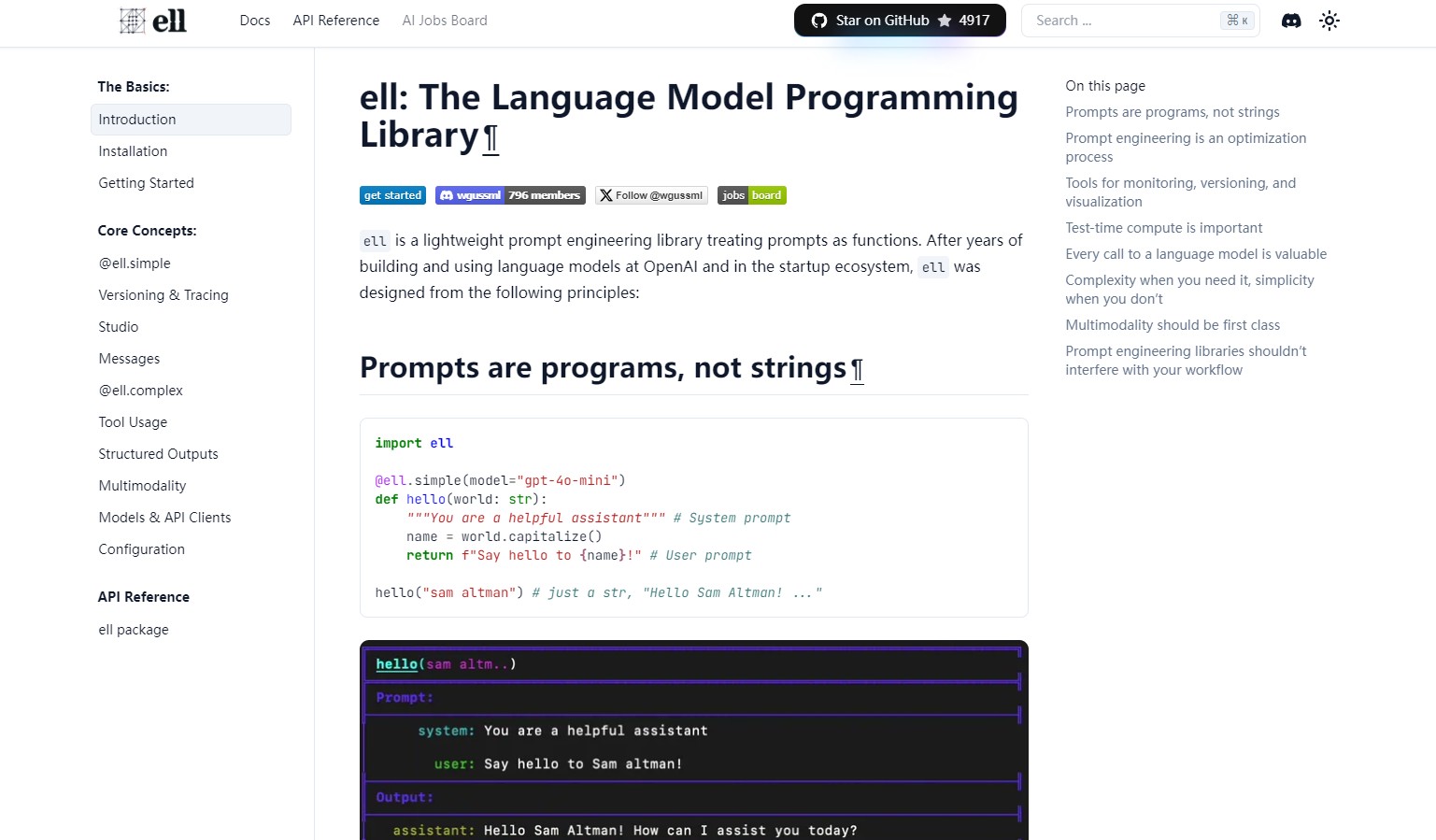

Prompts as Functions:📝 Ell encapsulates prompts as Python functions, making them easier to manage and reuse. This approach improves code organization and allows for a more modular design of LLM applications.

Automatic Versioning:🔄 Ell automatically versions and serializes prompts, allowing you to track changes, experiment with different versions, and easily revert to previous iterations. This feature streamlines the prompt optimization process and ensures that you can always access past versions of your prompts.

Local Storage of LMP Calls:💾 Ell can optionally save every call to a language model locally, creating a valuable dataset for analysis, fine-tuning, and other downstream tasks. This feature enables you to gain deeper insights into the performance of your prompts and LLMs.

Multimodal Support:🖼️ Ell supports multimodal inputs and outputs, including text, images, audio, and video. This enables you to build more sophisticated LLM applications that can process and generate a wider range of content types.

Ell Studio:📊 Ell Studio, a local, open-source tool, provides functionalities for prompt version control, monitoring, and visualization. This tool empowers you to empirically analyze your prompt optimization process and identify potential regressions.

Use Cases:

A chatbot developer can use ell to manage and optimize the prompts that drive the chatbot's conversations, leading to more engaging and natural interactions.

A researcher can leverage ell to track the evolution of prompts during an experiment, facilitating a deeper understanding of how prompt changes affect LLM behavior.

An AI application developer can use ell to build a system that automatically generates different versions of a prompt and selects the best-performing one based on user feedback.

Conclusion:

Ell offers a powerful and intuitive approach to prompt engineering, transforming it from a "dark art" into a more systematic and efficient process. Its focus on treating prompts as functions, combined with features like automatic versioning, local storage of LMP calls, and multimodal support, makes it an invaluable tool for anyone working with LLMs. By simplifying the development and optimization of prompts, ell empowers developers to build more robust, efficient, and effective LLM-powered applications.

More information on Ell

Ell Alternatives

Ell Alternatives-

PromptTools is an open-source platform that helps developers build, monitor, and improve LLM applications through experimentation, evaluation, and feedback.

-

SysPrompt is a comprehensive platform designed to simplify the management, testing, and optimization of prompts for Large Language Models (LLMs). It's a collaborative environment where teams can work together in real time, track prompt versions, run evaluations, and test across different LLM models—all in one place.

-

Streamline LLM prompt engineering. PromptLayer offers management, evaluation, & observability in one platform. Build better AI, faster.

-

Write AI prompts as structured, versionable code with PromptML. Bring engineering discipline to your prompt workflow for scalable, consistent AI apps.

-

The premier platform for crafting, testing, and deploying tasks and APIs powered by Large Language Models. Elevate your AI-driven solutions today.