What is Farfalle?

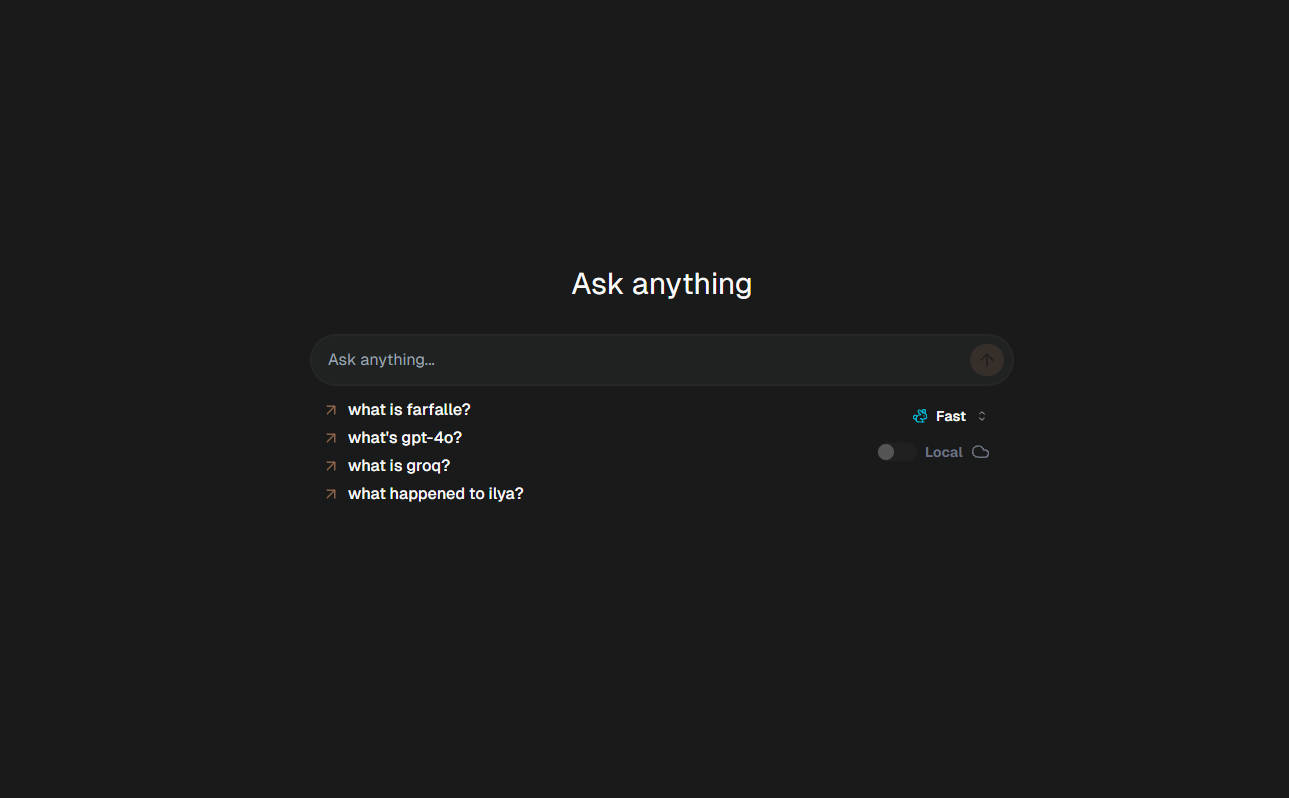

Farfalle is an open-source, AI-powered search engine that revolutionizes the way you access and interact with information. It’s designed to run on local LLMs like llama3, gemma, and mistral, or cloud models such as Groq/Llama3 and OpenAI/gpt4-o. This versatile tool empowers users with the flexibility to choose their preferred AI model, ensuring a customized and efficient search experience. Whether you’re a developer, researcher, or simply a curious mind, Farfalle is poised to transform your information retrieval journey.

Key Features

🌐 Model Flexibility: Seamlessly switch between local and cloud-based AI models to tailor your search experience.

🚀 Speed and Efficiency: Leveraging advanced AI models, Farfalle delivers fast and accurate search results, enhancing productivity.

🧩 Customizable Tech Stack: Built with a robust stack including Next.js, FastAPI, and Tavily, ensuring a scalable and maintainable solution.

🔒 Privacy-Focused: Run models locally, reducing reliance on external APIs and bolstering privacy and control over data.

🌟 Open-Source Community: Join a vibrant community of developers and users contributing to the ongoing development and improvement of Farfalle.

Use Cases

Research and Development: Accelerate your research with quick access to relevant information using AI-powered search.

Personal Knowledge Management: Enhance your learning and knowledge retention by efficiently organizing and retrieving information.

Enterprise Solutions: Implement Farfalle to streamline internal knowledge bases and improve decision-making processes.

Conclusion

Farfalle is more than just a search engine; it’s a gateway to a new era of AI-powered information retrieval. With its flexibility, speed, and robust tech stack, Farfalle is set to become an indispensable tool for anyone seeking to harness the power of AI in their daily search activities. Join the community, contribute, and be part of the AI revolution with Farfalle.