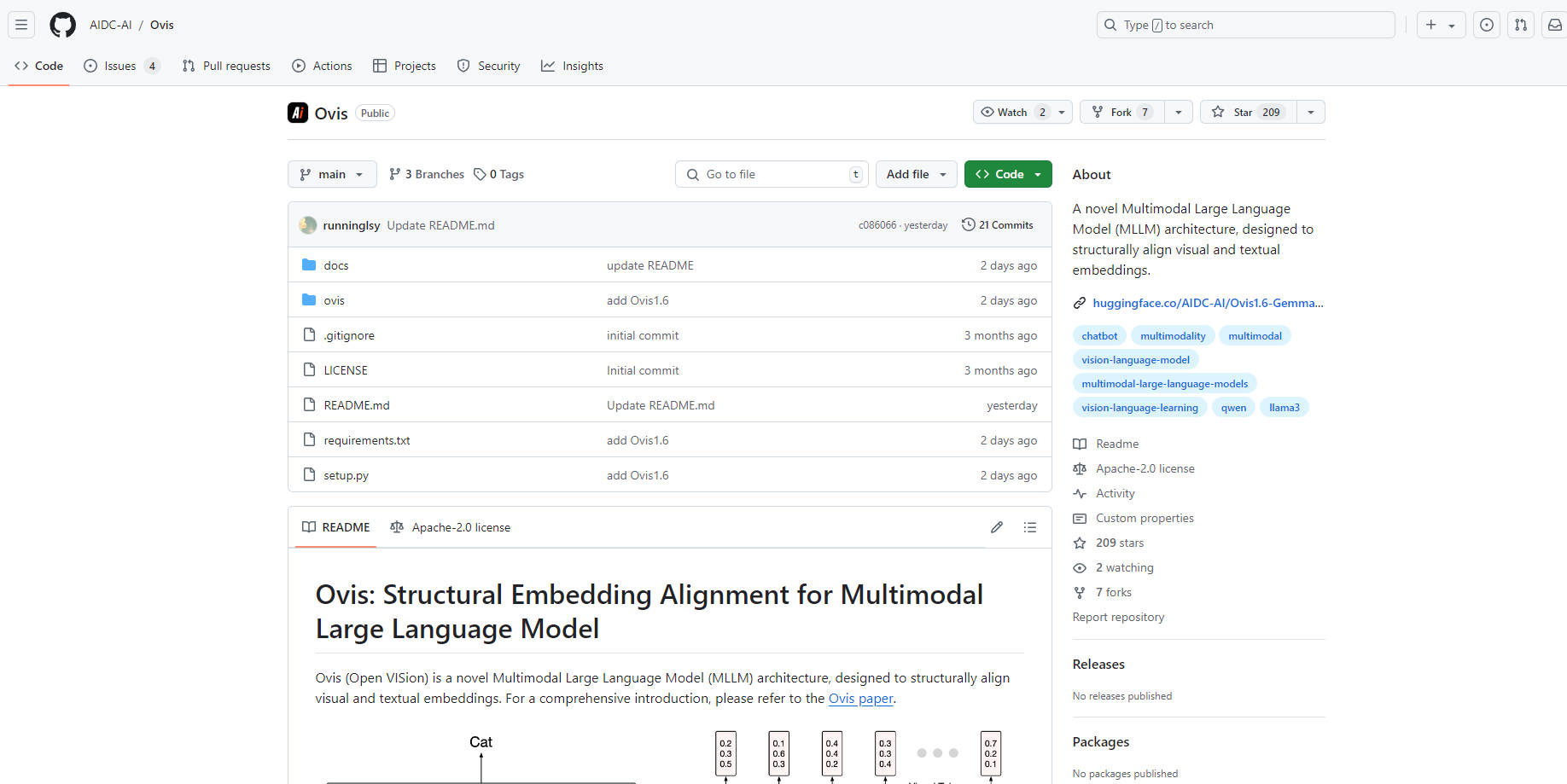

What is Ovis?

Ovis, developed by the Alibaba International AI team, is a groundbreaking Multimodal Large Language Model (MLLM) that structurally aligns visual and textual embeddings, achieving top scores in the OpenCompass benchmark for models under 3 billion parameters. It excels in tasks like mathematical reasoning, visual comprehension, and complex decision-making, even outperforming closed-source models like GPT-4o-mini. Ovis handles various data inputs, including text and images, and offers advanced capabilities in visual perception, mathematical problem-solving, and real-life scenario understanding.

Key Features:

🧮 Mathematical Reasoning: Accurately answers a wide range of math questions involving complex formulas and logical deductions.

Feature Description: Leverages advanced algorithms to solve and explain mathematical problems effectively.

🌐 Object Recognition: Identifies various objects, such as different flower species, showcasing its image recognition prowess.

Feature Description: Uses deep learning to detect and classify objects within images with high accuracy.

📚 Text Extraction: Extracts text information from documents in multiple languages.

Feature Description: Employs optical character recognition to pull text from various sources, supporting multilingual extraction.

💡 Complex Task Decision-Making: Handles multifaceted data inputs for intricate decision-making tasks, like comprehensive image and text analysis.

Feature Description: Integrates and interprets diverse data types to facilitate complex decision-making processes.

🖼️ Image Understanding: Achieves state-of-the-art performance in image comprehension, handling high-resolution and extreme aspect ratio images.

Feature Description: Delivers enhanced understanding of images with advanced processing techniques.

Use Cases:

🎓 Education: Ovis 1.6 aids in learning by explaining complex university-level mathematics.

📊 Business: Analyzes financial reports, providing insights for better decision-making.

🍟 Lifestyle: Teaches users how to cook classic dishes by interpreting and following along with images.

Conclusion:

Ovis 1.6 is a versatile and powerful AI tool designed to enhance the integration and understanding of visual and textual data. With its exceptional performance in multimodal tasks and a structure that aligns vision and text seamlessly, it is a prime choice for users seeking advanced AI assistance in various domains.

FAQs:

Q: What is the unique aspect of Ovis 1.6's design?

A:Ovis 1.6 uses a novel architecture that aligns visual and textual embeddings structurally, enhancing performance on multimodal tasks.

Q: Can Ovis 1.6 be used for commercial purposes?

A:Yes, Ovis is released under the Apache 2.0 open-source license, which is business-friendly and allows for commercial use.

Q: How does Ovis 1.6 perform compared to other models in similar parameter ranges?

A:Ovis 1.6 outperforms other models in its class, ranking first in the OpenCompass benchmark for models under 3 billion parameters, showing superior performance in both text and vision tasks.

More information on Ovis

Ovis Alternatives

Ovis Alternatives-

OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

-

Oumi is a fully open-source platform that streamlines the entire lifecycle of foundation models - from data preparation and training to evaluation and deployment. Whether you’re developing on a laptop, launching large scale experiments on a cluster, or deploying models in production, Oumi provides the tools and workflows you need.

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.

-

DreamOmni2 is a multimodal AI model designed specifically for intelligent image editing, allowing users to modify existing visuals by adjusting elements like objects, lighting, textures, and style based on text or visual prompts

-

Omost is a project to convert LLM's coding capability to image generation (or more accurately, image composing) capability.