What is OLMo 2 32B?

OLMo 2 32B is a state-of-the-art, large language model (LLM) that sets a new standard for open-source AI. Unlike many powerful LLMs that are closed-source, OLMo 2 32B provides full access to its data, code, and weights. This transparency empowers you to build, customize, and research advanced language models with unprecedented freedom. It is developed to solve the problem of limited access to high-performing LLMs for researchers and developers.

Key Features:

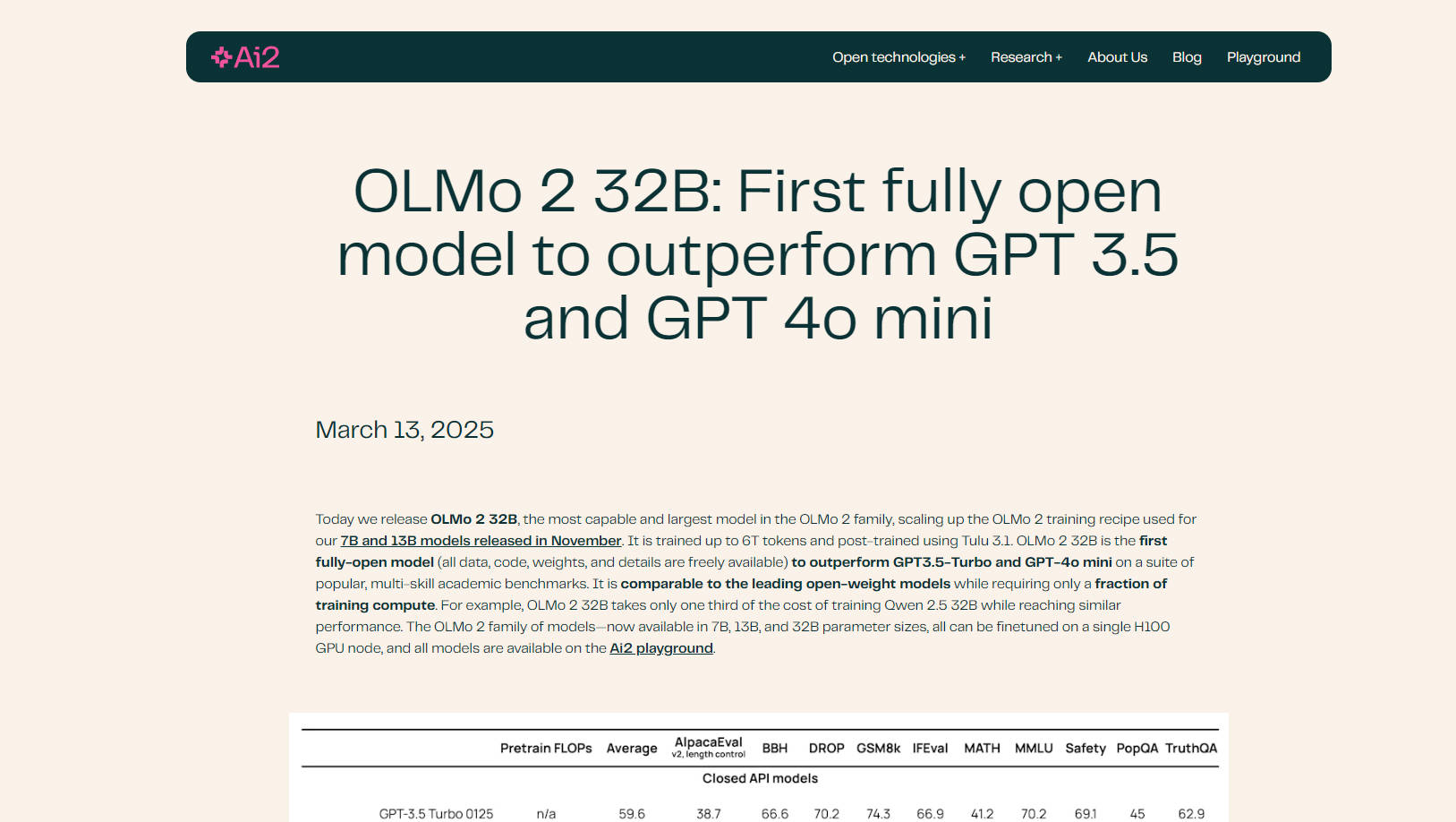

🤖 Outperforms Leading Models: OLMo 2 32B surpasses GPT-3.5 Turbo and GPT-4o mini on a variety of academic benchmarks, demonstrating its superior language understanding and generation capabilities.

💻 Fully Open-Source: Gain complete access to the model's training data, code, weights, and methodology. This transparency allows for unprecedented customization and research opportunities.

⚙️ Efficient Training: OLMo 2 32B achieves its performance with significantly less computational cost than comparable models. For instance, it requires only one-third of the training cost of Qwen 2.5 32B while achieving similar results.

📚 Improved Data and Pretraining: Built upon a refined training codebase (OLMo-core), OLMo 2 32B leverages extensive datasets (OLMo-Mix-1124 and Dolmino) for comprehensive pretraining and mid-training.

🧠 Advanced Post-Training with RLVR: Incorporates Reinforcement Learning with Verifiable Rewards (RLVR) using Group Relative Policy Optimization (GRPO), further enhancing its instruction-following and reasoning abilities.

⚡ Scalable and Flexible: Designed for modern hardware, OLMo-core supports 4D+ parallelism and fine-grained activation checkpointing, making it adaptable to various training scenarios.

☁️ Google Cloud Engine Optimized: Trained on Google Cloud's Augusta AI Hypercomputer, demonstrating real-world performance and scalability.

Use Cases:

Academic Research: Researchers can use OLMo 2 32B to study pretraining dynamics, the impact of data on model behavior, and the interplay between different training stages. The open-source nature facilitates in-depth analysis and experimentation. For example, a researcher could modify the training data to investigate how specific types of content influence the model's output.

Custom LLM Development: Developers can fine-tune OLMo 2 32B on their specific datasets to create customized language models for various applications, such as chatbots, content generation, or code completion tools. The model's compatibility with Hugging Face's Transformers library and vLLM simplifies integration into existing workflows.

Advanced Instruction Following: The refined post-training, including RLVR, makes OLMo 2 32B particularly adept at understanding and responding to complex instructions. This is beneficial for tasks requiring nuanced reasoning or creative text generation. For example, you can test the model's capabilities on complex math problems or ethical dilemmas.

Conclusion:

OLMo 2 32B represents a significant advancement in open-source language models. Its superior performance, complete transparency, and efficient training make it a powerful tool for researchers and developers seeking to push the boundaries of AI. By providing full access to all aspects of the model, OLMo 2 32B fosters innovation and collaboration within the AI community.

FAQ:

What makes OLMo 2 32B "fully open"? Fully open means that all components of the model, including the training data, code, weights, and detailed methodology, are publicly available. This level of transparency is uncommon in the field of large language models.

How does OLMo 2 32B's performance compare to other open-source models? OLMo 2 32B matches or outperforms leading open-weight models like Qwen 2.5 32B and Mistral 24B, while requiring significantly less computational resources for training.

What is OLMo-core? OLMo-core is the newly developed training framework for OLMo 2 32B. It's designed for efficiency, scalability, and flexibility, supporting larger models, different training paradigms, and modalities beyond text.

What is RLVR, and how does it benefit the model? RLVR stands for Reinforcement Learning with Verifiable Rewards. It's a technique used during post-training to improve the model's ability to follow instructions and reason effectively. It uses Group Relative Policy Optimization (GRPO) for enhanced training.

Can I fine-tune OLMo 2 32B on my own data? Yes, one of the key advantages of OLMo 2 32B is its open-source nature, which allows you to fine-tune it on your specific datasets to tailor its performance to your needs. It is supported in HuggingFace's Transformers library.

What hardware is needed to run OLMo 2 32b? All models in the OLMo 2 family (7B, 13B, and 32B parameter sizes) can be finetuned on a single H100 GPU node.

More information on OLMo 2 32B

Top 5 Countries

Traffic Sources

OLMo 2 32B Alternatives

OLMo 2 32B Alternatives-

Oumi is a fully open-source platform that streamlines the entire lifecycle of foundation models - from data preparation and training to evaluation and deployment. Whether you’re developing on a laptop, launching large scale experiments on a cluster, or deploying models in production, Oumi provides the tools and workflows you need.

-

Meta's Llama 4: Open AI with MoE. Process text, images, video. Huge context window. Build smarter, faster!

-

Run large language models locally using Ollama. Enjoy easy installation, model customization, and seamless integration for NLP and chatbot development.

-

Unlock state-of-the-art AI with gpt-oss open-source language models. High-performance, highly efficient, customizable, and runs on your own hardware.

-

OpenCoder is an open-source code LLM with high performance. Supports English & Chinese. Offers full reproducible pipeline. Ideal for devs, educators & researchers.