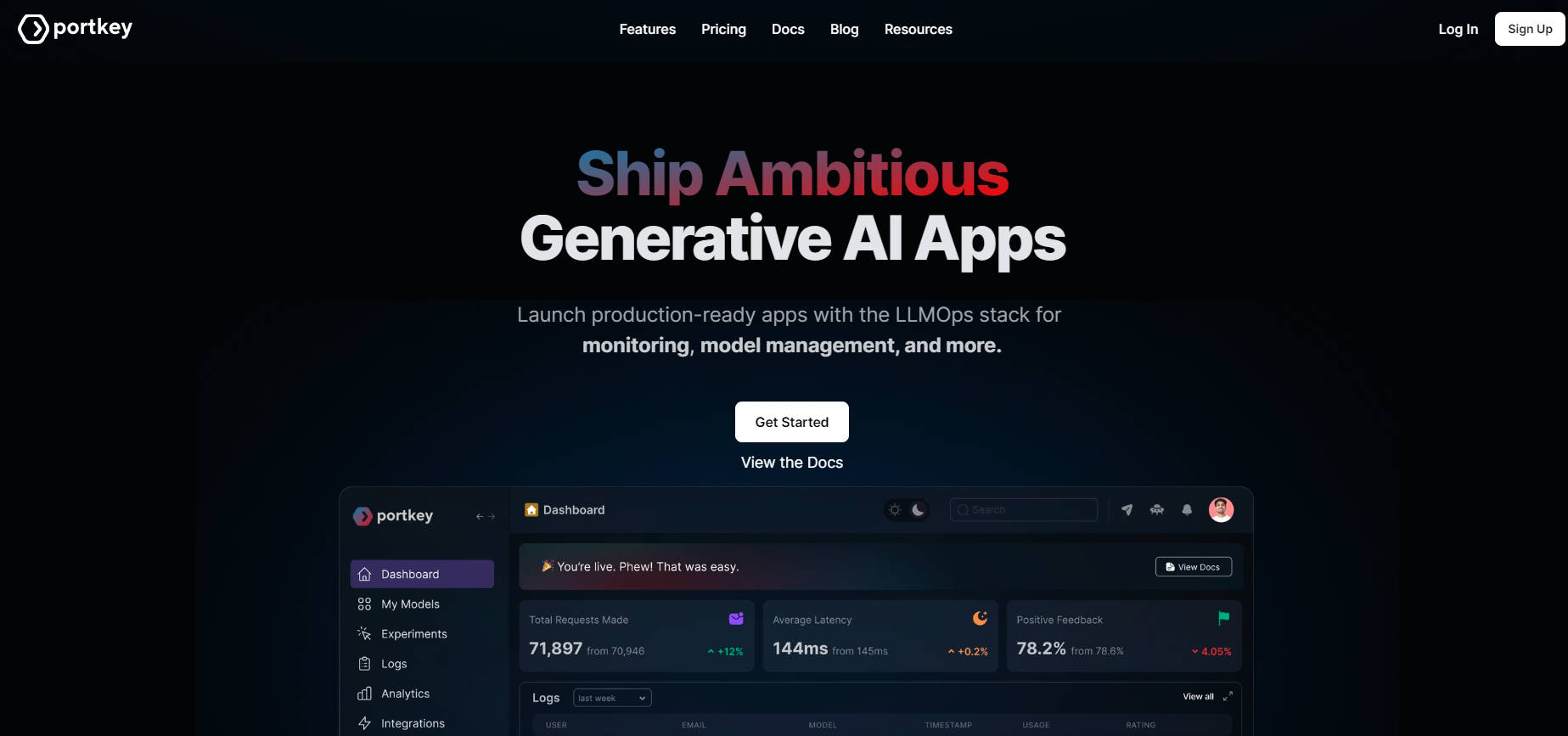

What is Portkey.ai?

Building and deploying AI applications often involves navigating a complex landscape of tools, managing unpredictable costs, and ensuring consistent performance under pressure. Portkey provides a unified control panel designed specifically for teams developing AI-powered products. It integrates the essential components—an AI Gateway, comprehensive Observability, collaborative Prompt Management, and robust Guardrails—to help you launch and scale reliable, cost-effective, and fast AI applications with confidence.

Think of Portkey as the mission control center for your AI stack. It simplifies operations, provides deep visibility, and gives you the levers to optimize performance, manage expenses, and maintain high-quality user experiences as you grow.

Key Features:

🔗 Unify LLM Access with the AI Gateway: Connect to over 250+ Large Language Models (LLMs) and AI providers through a single, consistent API endpoint. Eliminate the need for multiple integrations and manage everything centrally.

⚙️ Enhance Reliability with Smart Routing: Implement automatic fallbacks to switch models during outages, set up load balancing to distribute traffic efficiently, configure automatic retries for failed requests, and utilize caching to reduce latency and cost.

📊 Gain Full Visibility with the Observability Suite: Monitor your AI operations with over 40 metrics covering cost, latency, quality, and usage patterns. Debug issues quickly using detailed logs and request traces for end-to-end visibility.

✍️ Streamline Prompt Engineering: Develop, test, version, and deploy prompts collaboratively across your team. Manage different versions for staging and production environments, control access, and iterate faster with a dedicated studio.

🛡️ Enforce Consistent Behavior with Guardrails: Implement real-time checks on your AI requests and responses using built-in or partner guardrails (like Patronus AI). Synchronously validate outputs, enforce schemas, filter content, and route requests intelligently based on these checks.

🤖 Prepare AI Agents for Production: Seamlessly integrate Portkey's gateway, observability, and guardrails with popular agent frameworks like Langchain, CrewAI, and Autogen, making your agentic workflows robust and ready for real-world deployment.

🛠️ Build Agents with Real-World Tool Access: Utilize Portkey's MCP Client to empower your AI agents to interact with over 1,000 verified external tools, enabling them to perform complex tasks based on natural language instructions.

Portkey in Action: Use Cases

Ensure Consistent Uptime and Manage Costs: Imagine your primary LLM provider experiences an outage or introduces rate limits. Instead of your application failing, Portkey's AI Gateway automatically routes requests to a pre-configured fallback model (like Azure OpenAI or an open-source alternative). Simultaneously, its semantic caching serves identical requests instantly, reducing redundant calls and lowering your overall LLM spend. Benefit: Maintain application availability and control operational costs without manual intervention.

Debug Performance Issues and Improve Quality: Your users report slow AI responses, but pinpointing the bottleneck is difficult. Portkey's Observability suite provides detailed traces for each request, showing latency at every step – from your application to Portkey to the LLM provider and back. You can filter logs by custom metadata (like user ID or session ID) to analyze patterns, identify slow models or prompts, and use feedback collection to track response quality over time. Benefit: Quickly diagnose and resolve performance problems, leading to a better user experience.

Accelerate Secure Prompt Deployment: Your engineering and product teams need to collaborate on refining prompts for a new feature. Using Portkey's Prompt Engineering Studio, they can test variations across multiple LLMs side-by-side, track changes with version control, and store prompts centrally. Once approved, prompts can be deployed to production via the AI Gateway with labelled versions, allowing for easy rollbacks if needed, ensuring a smooth and controlled release process. Benefit: Improve team collaboration and deploy prompt updates faster and more reliably.

Your AI Operations, Simplified

Portkey brings together the essential infrastructure and tooling needed to move your AI applications from development to production efficiently and reliably. It addresses the core operational challenges—reliability, cost management, speed, and observability—through a single, integrated platform. By providing deep insights and granular control over your AI stack, Portkey allows your team to focus less on infrastructure management and more on building innovative AI features. Teams using Portkey often report faster launch times and greater confidence in scaling their AI initiatives.

Frequently Asked Questions (FAQ)

1. How does Portkey work? How do I integrate it? Integrating Portkey is straightforward. You typically replace the base URL of your current LLM provider (like OpenAI) in your application code with Portkey's unique API endpoint. Portkey then acts as a proxy, routing your requests while adding layers of control, reliability, and observability. Minimal code changes are usually required, and it works out-of-the-box with major providers and frameworks.

2. How does Portkey handle my data and security? Security is a top priority. Portkey is ISO:27001 and SOC 2 certified, and GDPR compliant. All data, including API keys stored in the optional vault and request/response logs, is encrypted both in transit (using TLS) and at rest. For organizations with specific requirements, Portkey offers managed hosting options to deploy within your private cloud environment.

3. Will using Portkey introduce latency to my AI calls? No, Portkey is designed for high performance. Leveraging edge compute infrastructure (like Cloudflare Workers), it typically adds minimal latency (benchmarked at <40ms). In many cases, features like intelligent caching and optimized routing can actually reduce the overall latency perceived by your end-users and improve the reliability of your application.

4. What AI models and frameworks does Portkey support? Portkey's AI Gateway supports over 250+ LLMs from various providers, including OpenAI, Azure OpenAI, Anthropic, Google Vertex AI, Cohere, and many open-source models. It also offers native integrations with popular development frameworks like Langchain, LlamaIndex, CrewAI, and Autogen, allowing you to easily incorporate Portkey's features into your existing workflow.

More information on Portkey.ai

Top 5 Countries

Traffic Sources

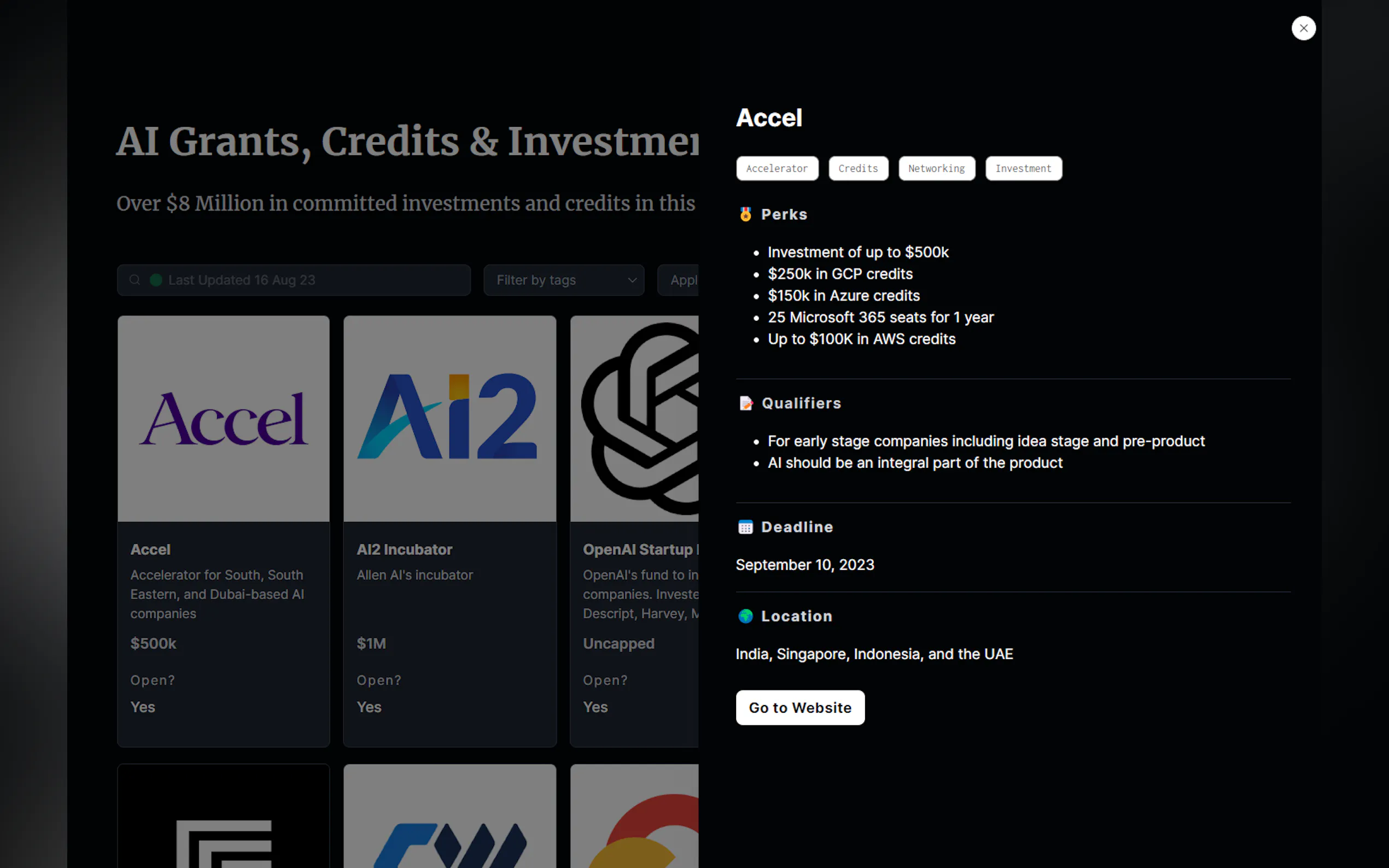

Portkey.ai Alternatives

Load more Alternatives-

Experience the power of Portkey's AI Gateway - a game-changing tool for seamless integration of AI models into your app. Boost performance, load balancing, and reliability for resilient and efficient AI-powered applications.

-

-

-

-

ModelPilot unifies 30+ LLMs via one API. Intelligently optimize cost, speed, quality & carbon for every request. Eliminate vendor lock-in & save.