What is Web LLM?

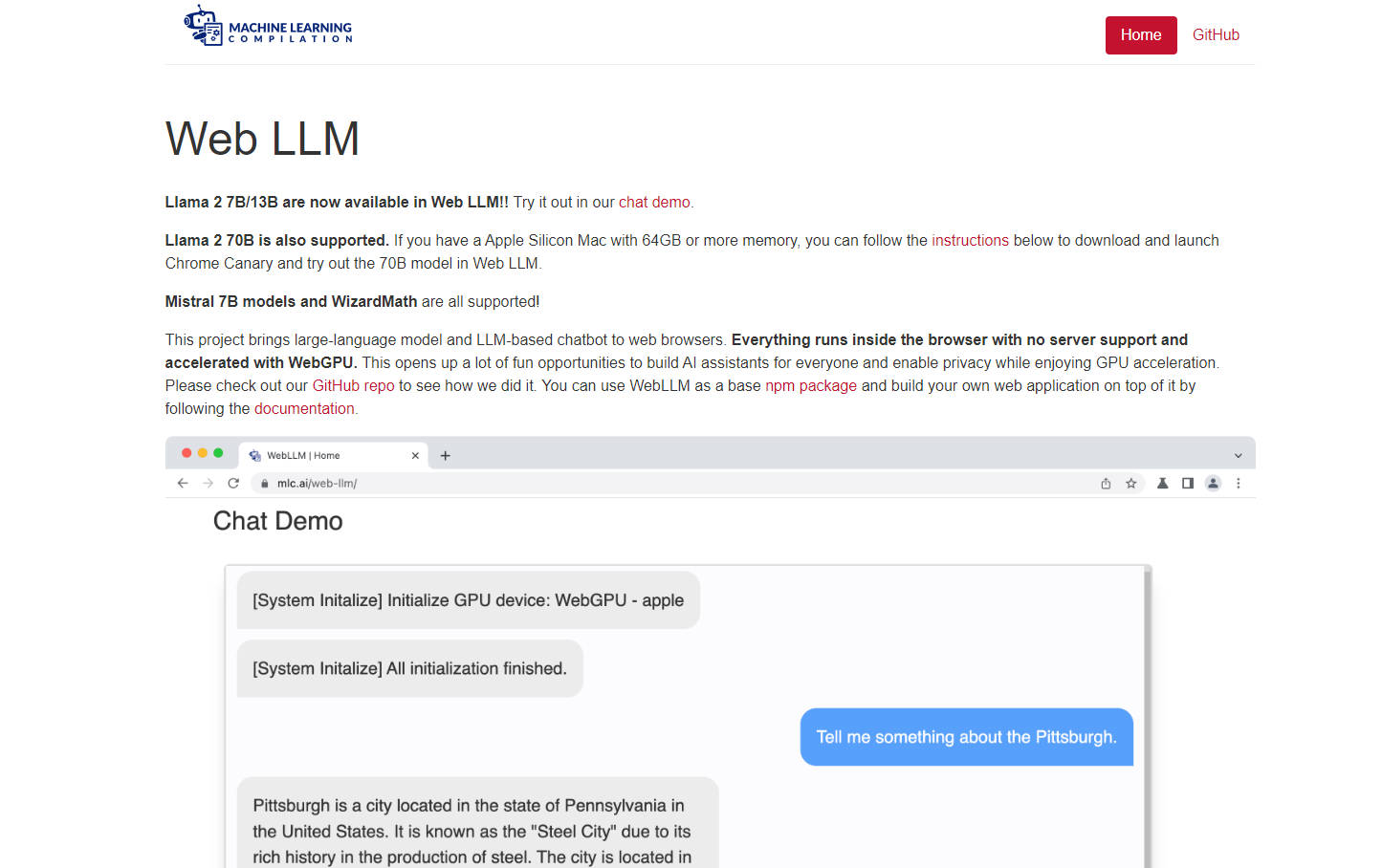

Web LLM, a modular and customizable JavaScript package, allows users to integrate language model chats directly into web browsers, making use of hardware acceleration. It operates entirely inside the browser without server support and leverages WebGPU for enhanced performance, presenting exceptional opportunities for developing private, GPU-accelerated AI assistants.

Key Features:

1. 💻 **Browser-Based:** Runs language model chats directly within the browser, eliminating the need for server support and allowing for privacy and local execution.

2. 🚀 **WebGPU Acceleration:** Taps into the power of WebGPU to accelerate model computations, resulting in improved performance and faster response times.

3. 🧩 **Modular and Customizable:** Designed with modularity in mind, Web LLM allows developers to seamlessly integrate it into their web applications and customize the user interface to match their specific requirements.

Use Cases:

1. 💬 **AI Chat Applications:** Create interactive AI chatbots for websites and online platforms, providing users with conversational, human-like interactions.

2. 💁 **Virtual Assistants:** Develop virtual assistants that help users with tasks, answer questions, and provide information, enhancing user engagement and efficiency.

3. 💡 **Conversational Interfaces:** Build conversational user interfaces that enable natural language interactions, making applications more intuitive and user-friendly.

Conclusion:

Web LLM empowers developers to create innovative applications powered by language model chats, while providing the flexibility to customize and seamlessly integrate these capabilities into web browsers. Its browser-based nature, WebGPU acceleration, and modular design make it a compelling choice for building engaging and intelligent user experiences.