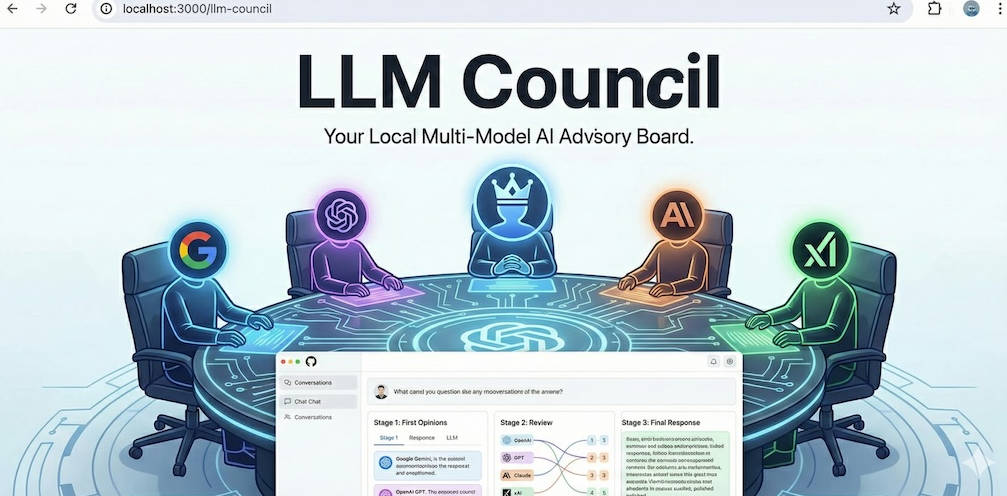

What is LLM Council?

The LLM Council is an insightful, locally hosted web application designed to overcome the limitations of single-model AI queries by leveraging the collective intelligence of multiple leading Large Language Models (LLMs). Instead of relying on one perspective, this system orchestrates a peer-review and synthesis process, ensuring the final response is vetted, comprehensive, and derived from a diversity of expert opinions. It’s the ideal tool for users who require depth, accuracy, and a comparative view of AI responses for complex or nuanced topics.

Key Features

The strength of the LLM Council lies in its three-stage process, which transforms parallel queries into a single, highly refined answer.

🧠 Parallel Query and First Opinions

The moment you submit a query, it is simultaneously sent to every configured model in your Council (e.g., GPT, Gemini, Claude, Grok). This immediately provides you with a spectrum of initial answers, displayed in a convenient tabbed view. This feature allows you to quickly inspect how different foundational models interpret and address your prompt, providing instant transparency and comparative insight.

⚖️ Anonymized Peer Review and Ranking

This is the critical quality assurance step. Each Council member is subsequently given the responses generated by the others. Crucially, the identities of the models are anonymized during this stage to prevent bias or "playing favorites." The models are then asked to critically rank and review each other’s work based on criteria like accuracy and insight. This built-in critique loop ensures that weaknesses, inaccuracies, or limited scopes in initial responses are identified by the models themselves.

👨⚖️ Chairman Synthesis for Final Output

Following the peer review, a designated Chairman LLM receives all the initial responses and the subsequent cross-rankings. The Chairman's role is to synthesize this wealth of information—combining the strongest elements, resolving contradictions, and integrating the critiques—into a single, unified, and highly robust final answer presented directly to the user. This final compilation represents the consensus and highest quality output derived from the entire Council.

Use Cases

The LLM Council mechanism is particularly powerful in situations where a single, definitive answer is insufficient, or where the complexity of the topic requires multiple expert viewpoints.

Deep Subject Matter Research

When exploring complex or ambiguous topics—such as historical analysis, philosophical concepts, or nuanced scientific principles—the Council ensures you receive not just one answer, but a comprehensive synthesis that addresses the topic from multiple angles. You benefit from the specialized training sets and inherent biases of diverse models being balanced by peer critique.

Advanced Software Development and Debugging

If you are asking for code generation or troubleshooting complex technical issues, different models often excel in different languages or frameworks. By submitting the query to the Council, you gain multiple viable code suggestions and expert critiques on those suggestions, enabling you to select or synthesize the most efficient and robust solution for your project.

Comparative AI Model Evaluation

For researchers or developers actively evaluating the performance of various LLMs, the Council provides a unique, real-time mechanism to compare outputs side-by-side, along with the models' own critical assessments of each other. This is invaluable for understanding the strengths and weaknesses of models before committing to one platform for production use.

Why Choose LLM Council?

The primary value proposition of the LLM Council is its commitment to vetted quality and transparency over speed or simplicity. Standard AI interfaces offer speed; the Council offers depth and reliability through structured, automated peer review.

- Verifiable Insight: You don't just receive a final answer; you receive the raw opinions and the cross-critiques that informed that answer. This transparency allows you to trust the final output more confidently than a black-box single response.

- Mitigation of Single-Model Bias: By forcing diverse models to review each other's work, the system naturally reduces the impact of inherent biases or "hallucinations" that might be present in any one model’s output, leading to a more objective final result.

- Customizable Expertise: You maintain complete control over which models constitute your "Council" and which model serves as the final "Chairman." This allows you to tailor the collective expertise precisely to the domain of your queries (e.g., prioritizing coding models for technical tasks).

Conclusion

The LLM Council offers a sophisticated approach to knowledge acquisition, transforming the AI interaction from a solo query into a structured, expert panel discussion. For users demanding comprehensive, critically reviewed, and deeply insightful responses, the Council provides a verifiable path to higher-quality AI output.

Explore how the LLM Council can elevate your research and analysis by providing answers vetted by the best minds in AI.