What is Deepchecks?

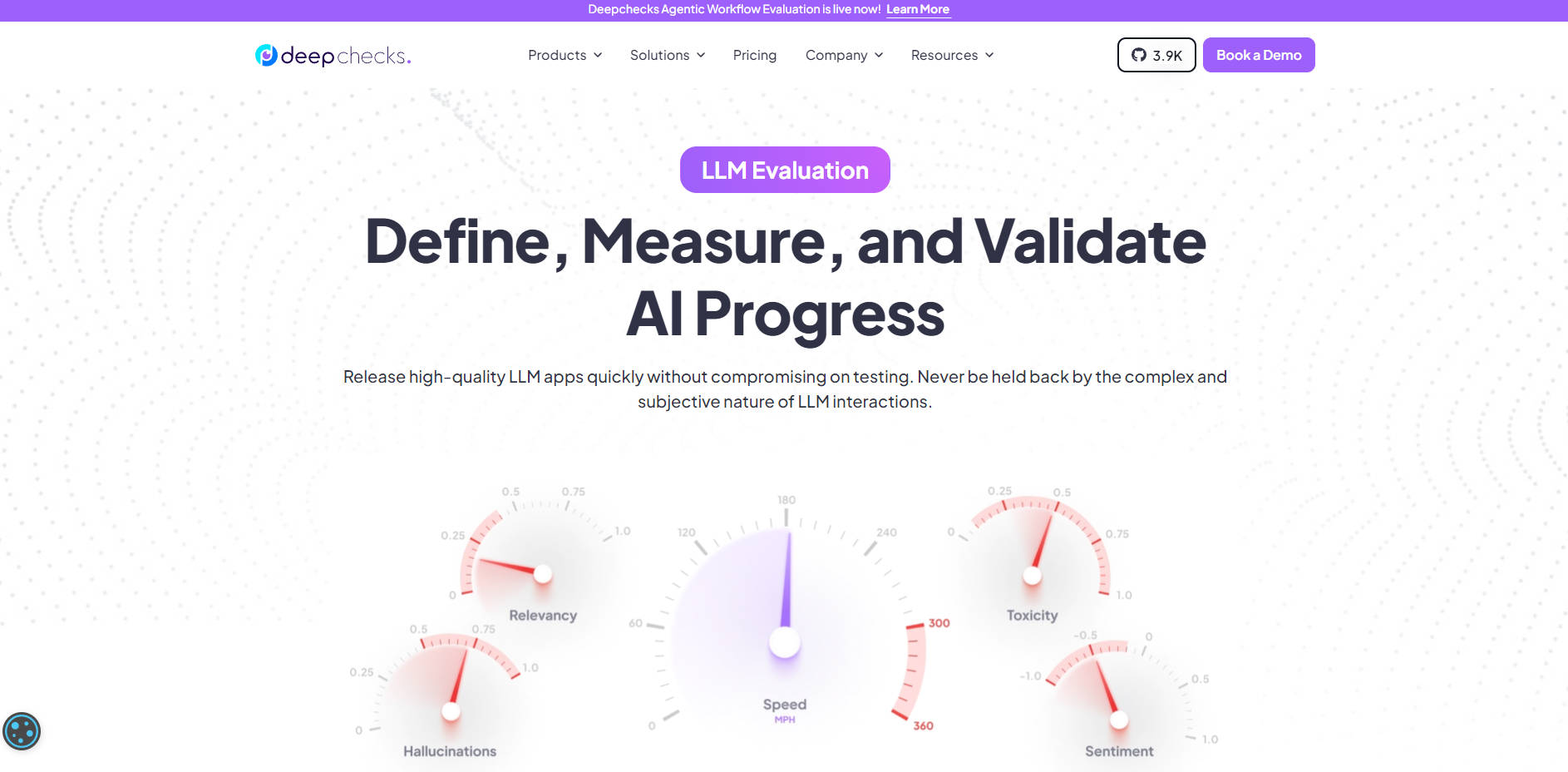

Deepchecks provides a complete, end-to-end evaluation platform designed for AI teams. It directly addresses the complex, subjective, and often manual process of testing LLM applications, enabling you to move from development to production faster and with greater confidence. This platform transforms LLM evaluation from a series of ad-hoc projects into a systematic, data-driven workflow.

Key Features

🧪 Automated Scoring & Annotation Leverage a sophisticated pipeline to automatically score and annotate your LLM interactions based on nuanced constraints. You retain full control with a manual override, allowing you to create a "golden set" or ground truth that fine-tunes the automated system for exceptional accuracy.

📊 Comprehensive Version Comparison Make metric-driven decisions by systematically comparing every component of your LLM stack. You can easily experiment with and validate different prompts, models (e.g., GPT-4 vs. Claude 3), vector databases, and retrieval methods to find the optimal configuration for your specific use case.

🔍 Full Lifecycle Monitoring & Debugging Go beyond pre-production testing. Deepchecks monitors your LLM applications live in production to catch hallucinations, performance degradation, or harmful content. Its root-cause analysis tools help you methodically identify your application's weakest segments and pinpoint the exact step where a failure occurred.

🛡️ Flexible & Secure Deployment Integrate Deepchecks into your existing stack with peace of mind. With multiple deployment options—from multi-tenant SaaS to AWS GovCloud and fully on-premise solutions—you can meet any data privacy or security constraint, including SOC2, GDPR, and HIPAA compliance.

Use Cases

1. Optimizing a Customer Support RAG Agent Imagine you're developing a RAG (Retrieval-Augmented Generation) agent to answer customer questions based on your knowledge base. Instead of relying on anecdotal evidence, you can use Deepchecks to run a dozen experiments comparing different embedding models and chunking strategies. The platform provides clear, quantitative scores on response relevance and factual accuracy, allowing you to definitively select the version that provides the most helpful answers and reduces hallucinations.

2. Ensuring AI Safety for a Content Generation Tool Your team has built a tool that generates marketing copy. To prevent brand damage, you need to ensure its outputs are always on-brand, safe, and free of harmful content. You can configure Deepchecks to run continuously within your CI/CD pipeline, automatically flagging any responses that violate your defined safety metrics. In production, it continues to monitor for unexpected behavior, alerting you instantly if the model generates problematic content, allowing you to intervene before it impacts users.

Unique Advantages

The market has many evaluation tools, but Deepchecks is engineered differently to solve the core challenges of LLM validation.

Beyond LLM-as-a-Judge: Instead of relying on a single, general-purpose LLM for evaluation, Deepchecks uses a proprietary Swarm of Evaluation Agents. This advanced architecture employs a set of specialized Small Language Models (SLMs) and multi-step NLP pipelines that work together using Mixture of Experts (MoE) techniques. This approach simulates an intelligent human annotator, delivering superior accuracy and consistency.

A True End-to-End Platform: While many open-source projects provide evaluation techniques, they often require significant DIY effort to become a usable solution. Deepchecks provides a complete, integrated platform that covers the entire lifecycle—from generating test datasets and comparing versions in development to robust monitoring and debugging in production.

Evidence-Based Results: Teams using Deepchecks report tangible, business-critical outcomes. The platform has been shown to deliver a 70% decrease in hallucinations and low-quality responses and a 5x improvement in the time-to-production for new LLM applications.

Conclusion:

Deepchecks provides the rigorous, scalable, and systematic framework necessary to build, deploy, and maintain high-quality LLM applications. By replacing subjective guesswork with automated, data-driven evaluation, you can innovate faster, mitigate risks, and ship products that consistently deliver value.

Explore how Deepchecks can streamline your LLM development lifecycle and ensure your applications perform as intended.

More information on Deepchecks

Top 5 Countries

Traffic Sources

Deepchecks Alternatives

Load more Alternatives-

Automate AI and ML validation with Deepchecks. Proactively identify issues, validate models in production, and collaborate efficiently. Build reliable AI systems.

-

Companies of all sizes use Confident AI justify why their LLM deserves to be in production.

-

Braintrust: The end-to-end platform to develop, test & monitor reliable AI applications. Get predictable, high-quality LLM results.

-

Evaluate & improve your LLM applications with RagMetrics. Automate testing, measure performance, and optimize RAG systems for reliable results.

-