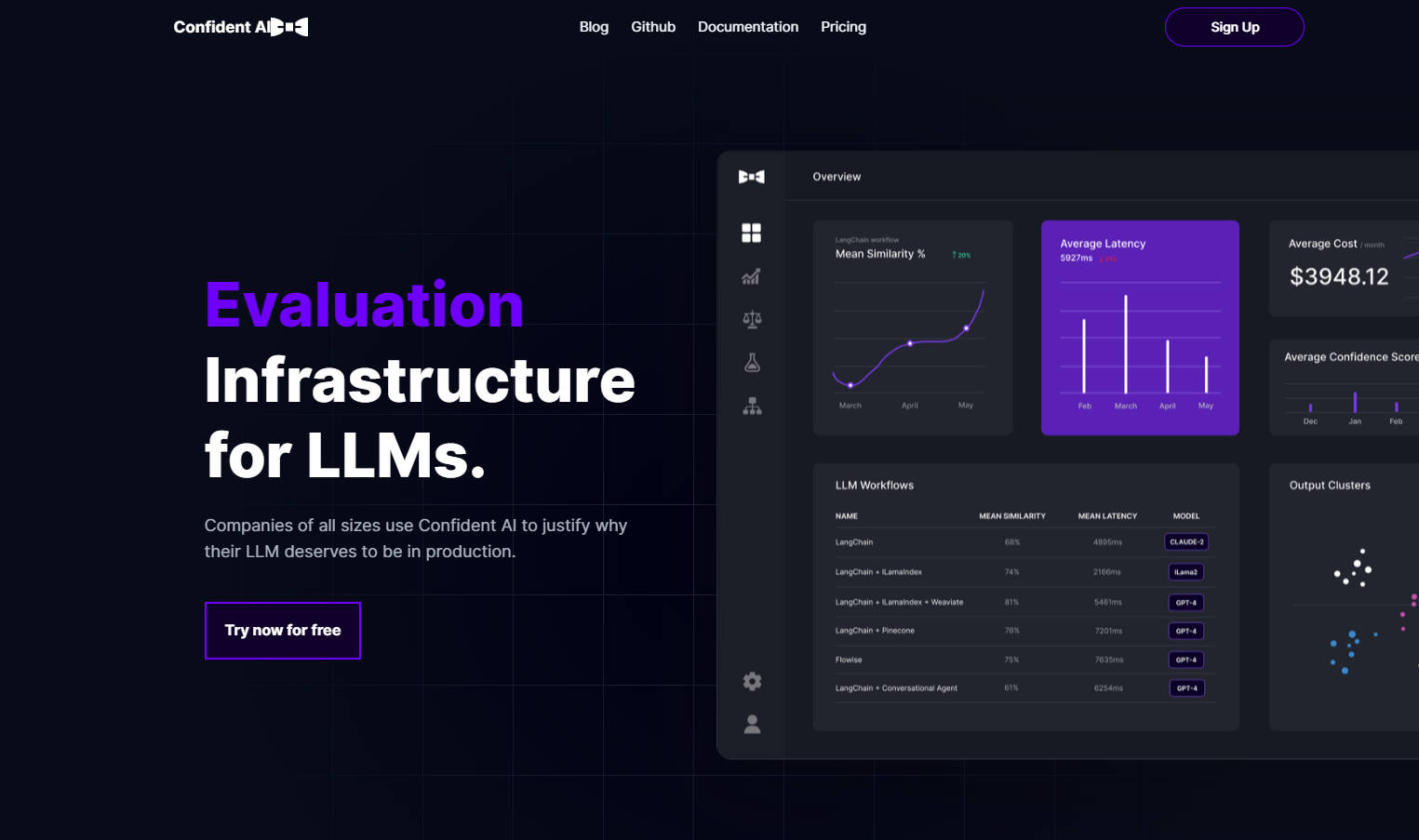

What is Confident AI?

Building and deploying LLM applications? Confident AI is your go-to platform for benchmarking, safeguarding, and improving their performance. Designed by the creators of DeepEval, this trusted, open-source solution offers best-in-class metrics, guardrails, and tools to ensure your LLM systems deliver reliable, high-quality outputs. Whether you're a startup or a global enterprise, Confident AI simplifies LLM evaluation, monitoring, and optimization—so you can focus on innovation, not debugging.

Key Features:

✨ Centralized Dataset Curation

Ditch scattered tools like Google Sheets or Notion. Confident AI unifies your LLM evaluation workflow, making it easy to curate, manage, and annotate datasets in one place.

🚀 Seamless CI/CD Integration

With Pytest integration, you can unit test LLM systems, detect performance drift, and pinpoint regressions—all without disrupting your existing workflows.

🔍 Real-Time Monitoring & Tracing

Monitor LLM outputs in production, filter unsatisfactory results, and automatically evaluate them to improve your datasets for future testing.

📊 Customizable Evaluation Metrics

Align your evaluation criteria with your company’s values using research-backed, human-like accuracy metrics. From RAG systems to chatbots, Confident AI covers it all.

🧠 Synthetic Dataset Generation

Generate tailored datasets grounded in your knowledge base. Customize output formats, annotate data, and version datasets directly on the cloud.

Use Cases:

Enterprise LLM Optimization

Toyota, Accenture, and Airbus trust Confident AI to benchmark and improve their LLM systems. Whether it’s reducing costs or enhancing accuracy, this platform helps enterprises scale AI with confidence.Developer-Friendly Testing

Developers can integrate Confident AI into their CI/CD pipelines to test LLM systems, compare results, and detect regressions—ensuring smooth deployments every time.Real-Time Performance Monitoring

Monitor your LLM applications in production, identify bottlenecks, and gather user feedback to continuously refine your models and datasets.

Conclusion:

Confident AI isn’t just another evaluation tool—it’s a comprehensive platform designed to help you build, deploy, and optimize LLM applications with ease. With best-in-class metrics, seamless integrations, and a focus on real-world performance, it’s no wonder top companies worldwide rely on Confident AI to safeguard their AI investments.

Ready to take your LLM systems to the next level? Request a demo today.

FAQ:

Q: Is Confident AI open-source?

A: Yes! Confident AI is proudly open-source, with over 4,400 GitHub stars and 400,000+ monthly downloads.

Q: Can I customize evaluation metrics?

A: Absolutely. Confident AI allows you to align evaluation metrics with your company’s unique values and use cases.

Q: Does it support real-time monitoring?

A: Yes, you can monitor LLM outputs in production, filter unsatisfactory results, and improve datasets for future testing.

Q: Who uses Confident AI?

A: Companies like Toyota, Accenture, and Airbus trust Confident AI to evaluate and optimize their LLM systems.

Q: Can I generate synthetic datasets?

A: Yes, Confident AI lets you generate tailored datasets grounded in your knowledge base, with full customization and annotation capabilities.

More information on Confident AI

Top 5 Countries

Traffic Sources

Confident AI Alternatives

Confident AI Alternatives-

Deepchecks: The end-to-end platform for LLM evaluation. Systematically test, compare, & monitor your AI apps from dev to production. Reduce hallucinations & ship faster.

-

Braintrust: The end-to-end platform to develop, test & monitor reliable AI applications. Get predictable, high-quality LLM results.

-

Evaligo: Your all-in-one AI dev platform. Build, test & monitor production prompts to ship reliable AI features at scale. Prevent costly regressions.

-

Literal AI: Observability & Evaluation for RAG & LLMs. Debug, monitor, optimize performance & ensure production-ready AI apps.

-

LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.