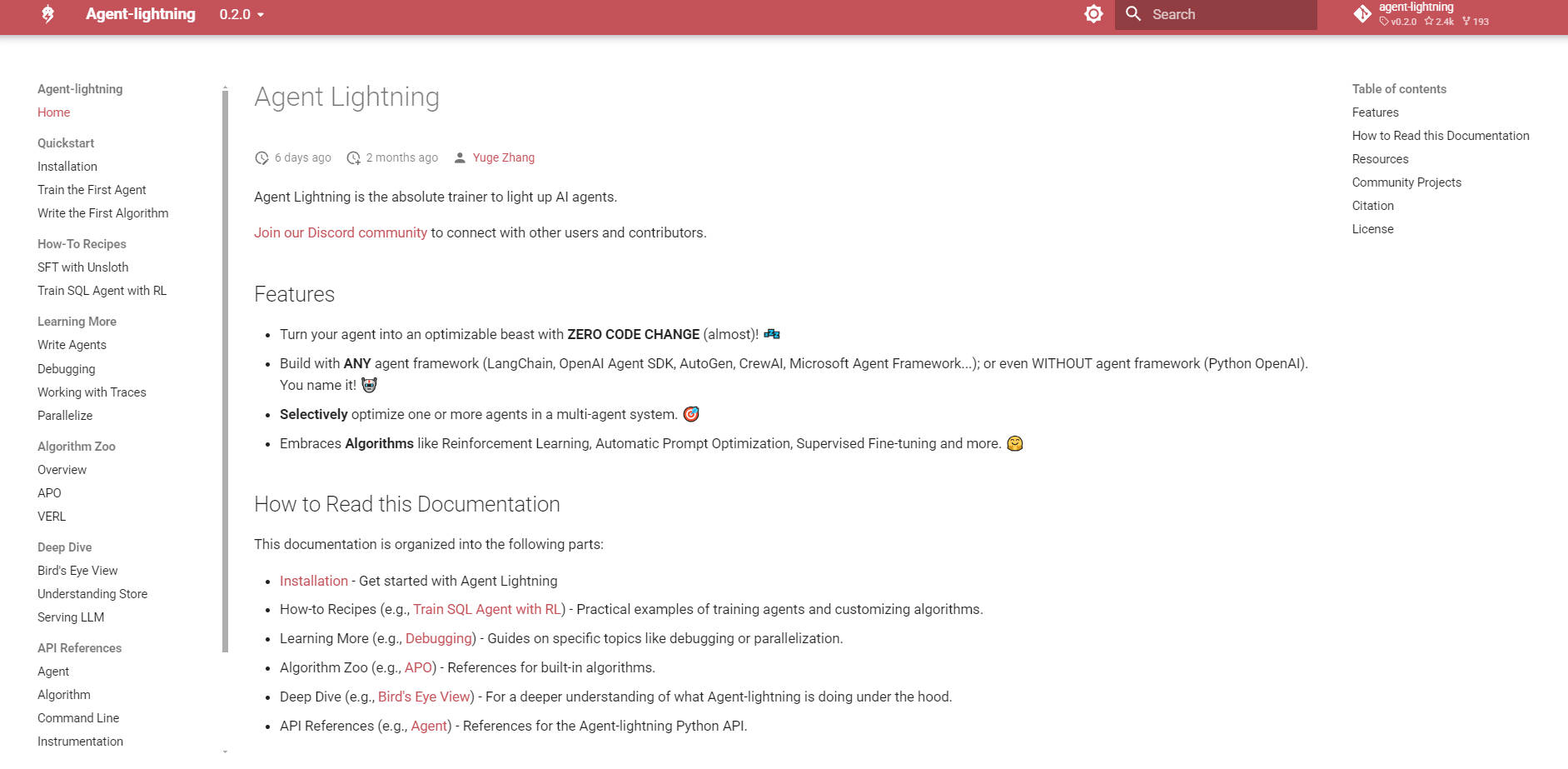

What is Agent Lightning?

AI agents solve complex, real-world problems, but optimizing their performance in dynamic, real-world scenarios—especially those involving multi-turn interactions, tool use, or proprietary data—remains a significant challenge for developers. Agent Lightning is a flexible, extensible framework designed to bridge the critical gap between agent workflow development and advanced model optimization. It empowers developers and researchers to unlock the full potential of adaptive, learning-based agents built with any popular orchestration framework.

Key Features

Agent Lightning enables data-driven customization—including model fine-tuning, prompt tuning, and model selection—for immediate performance improvements in deployed agents.

⚡️ Universal Framework Compatibility

Optimize agents built with any existing orchestration framework (e.g., LangChain, AutoGen, OpenAI Agent SDK) without requiring modifications to the agent’s core development code. By exposing an OpenAI-compatible API interface within the training infrastructure, Agent Lightning non-intrusively plugs into your existing agent logic, treating the trained model as a seamless drop-in replacement.

🧩 Decoupled Training Architecture

The system cleanly separates the agent’s execution logic (Lightning Client) from the compute-intensive optimization logic (Lightning Server). This unique architecture ensures high scalability and simplifies maintenance, allowing you to continue developing agent workflows independently while deploying resource-heavy model training on optimized GPU servers.

🌍 Optimized for Real-World Agent Complexity

Agent Lightning is specifically engineered to manage inherent complexities in advanced agent applications, including multi-turn interactions, dynamic context/memory management, tool usage, and multi-agent coordination. This focus ensures that model optimization is directly aligned with the agent’s actual deployment behavior and task logic, resulting in meaningful, real-world performance gains.

🛡️ Robust Built-in Error Monitoring

Complex agents frequently encounter execution errors or get stuck during training rollouts. The framework tracks agent execution status, detects failure modes, and reports detailed error types back to the optimization algorithm. This critical feedback loop provides sufficient signal for algorithms to handle edge cases gracefully, maintaining a stable optimization process even with imperfect agents.

Use Cases

Agent Lightning enables continuous learning and performance improvement across diverse, complex agent systems:

Enhancing Multi-Agent Systems: Train agents within a complex, multi-step workflow—such as a Text-to-SQL system involving separate agents for SQL generation, checking, and rewriting. Agent Lightning can simultaneously optimize the models responsible for distinct roles, significantly improving coordination and the final output accuracy of the entire system.

Improving Retrieval-Augmented Generation (RAG): Optimize agents interacting with large knowledge bases (like complex web searches or internal documents) to generate more effective search queries and better synthesize information based on retrieved content. This directly boosts the agent’s ability to handle complex, multi-hop question answering and reasoning tasks.

Refining Tool Use and Reasoning: Apply Reinforcement Learning to teach the underlying LLM to accurately decide when and how to invoke external tools (such as code interpreters or calculators) and seamlessly integrate the tool's output back into the reasoning chain. This leads to higher accuracy in tasks requiring precise, verifiable computation, such as advanced mathematical problem-solving.

Unique Advantages

Agent Lightning delivers superior optimization results by addressing the fundamental architectural friction between agent development and model training.

Unified Data Interface via MDP: Agent Lightning abstracts complex, multi-turn agent execution into a standardized Markov Decision Process (MDP) transition tuple (state, action, reward, next state). This unified interface transforms heterogeneous, real-world interaction data into a format instantly consumable by any mature single-round Reinforcement Learning algorithm, dramatically simplifying training complexity and improving data efficiency.

Zero-Intrusion Optimization: Unlike traditional methods that require embedding training hooks or heavily modifying agent code, Agent Lightning uses a sidecar-based design and an OpenAI-compatible API layer. This separation ensures that the optimization process is non-intrusive, drastically reducing engineering overhead and preventing training-deployment misalignment.

RL Designed for Long-Horizon Tasks: While other fine-tuning methods struggle to assign credit across multi-step reasoning, Agent Lightning leverages advanced RL infrastructure. This allows the system to effectively apply credit allocation across complex, multi-turn interactions, ensuring the model learns to optimize for the ultimate task success signal, rather than just the quality of the immediate response.

Conclusion

Agent Lightning fundamentally changes how developers approach agent deployment, transforming agents from static tools into continuously learning entities. By providing a scalable, zero-intrusion path to data-driven optimization, it significantly lowers the barrier to developing and deploying high-performance, adaptive AI systems. Explore Agent Lightning today and start unlocking the full evolutionary potential of your AI agents.