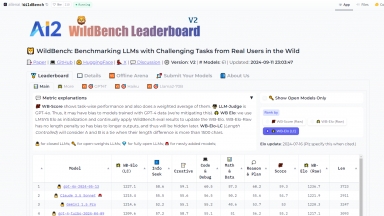

AI2 WildBench Leaderboard

AI2 WildBench Leaderboard

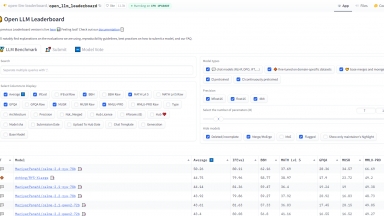

Huggingface's Open LLM Leaderboard

Huggingface's Open LLM Leaderboard

AI2 WildBench Leaderboard

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Llm Benchmark Leaderboard,Data Analysis,A/B Testing |

Huggingface's Open LLM Leaderboard

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Llm Benchmark Leaderboard,Data Analysis |

AI2 WildBench Leaderboard Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Huggingface's Open LLM Leaderboard Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

LiveBench - LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.

ModelBench - Launch AI products faster with no-code LLM evaluations. Compare 180+ models, craft prompts, and test confidently.

BenchLLM by V7 - BenchLLM: Evaluate LLM responses, build test suites, automate evaluations. Enhance AI-driven systems with comprehensive performance assessments.

Web Bench - Web Bench is a new, open, and comprehensive benchmark dataset specifically designed to evaluate the performance of AI web browsing agents on complex, real-world tasks across a wide variety of live websites.

xbench - xbench: The AI benchmark tracking real-world utility and frontier capabilities. Get accurate, dynamic evaluation of AI agents with our dual-track system.