What is AI2 WildBench Leaderboard?

WildBench is a cutting-edge benchmarking tool designed to evaluate the capabilities of large language models (LLMs) by pitting them against a diverse set of challenging tasks that mimic real-world user interactions. This innovative platform ensures that the performance of LLMs is assessed based on a nuanced understanding of human language and context, providing valuable insights into their strengths and weaknesses.

Key Features

Real-World Task Simulation: WildBench uses tasks collected from WildChat, a vast dataset of human-GPT interactions, ensuring that evaluations reflect genuine user scenarios.

Diverse Task Categories: With 12 categories of tasks, WildBench covers a wide array of real-user scenarios, maintaining a balanced distribution that traditional benchmarks can't match.

Comprehensive Annotations: Each task includes detailed annotations such as secondary task types and user intents, offering a deeper level of insight for response assessments.

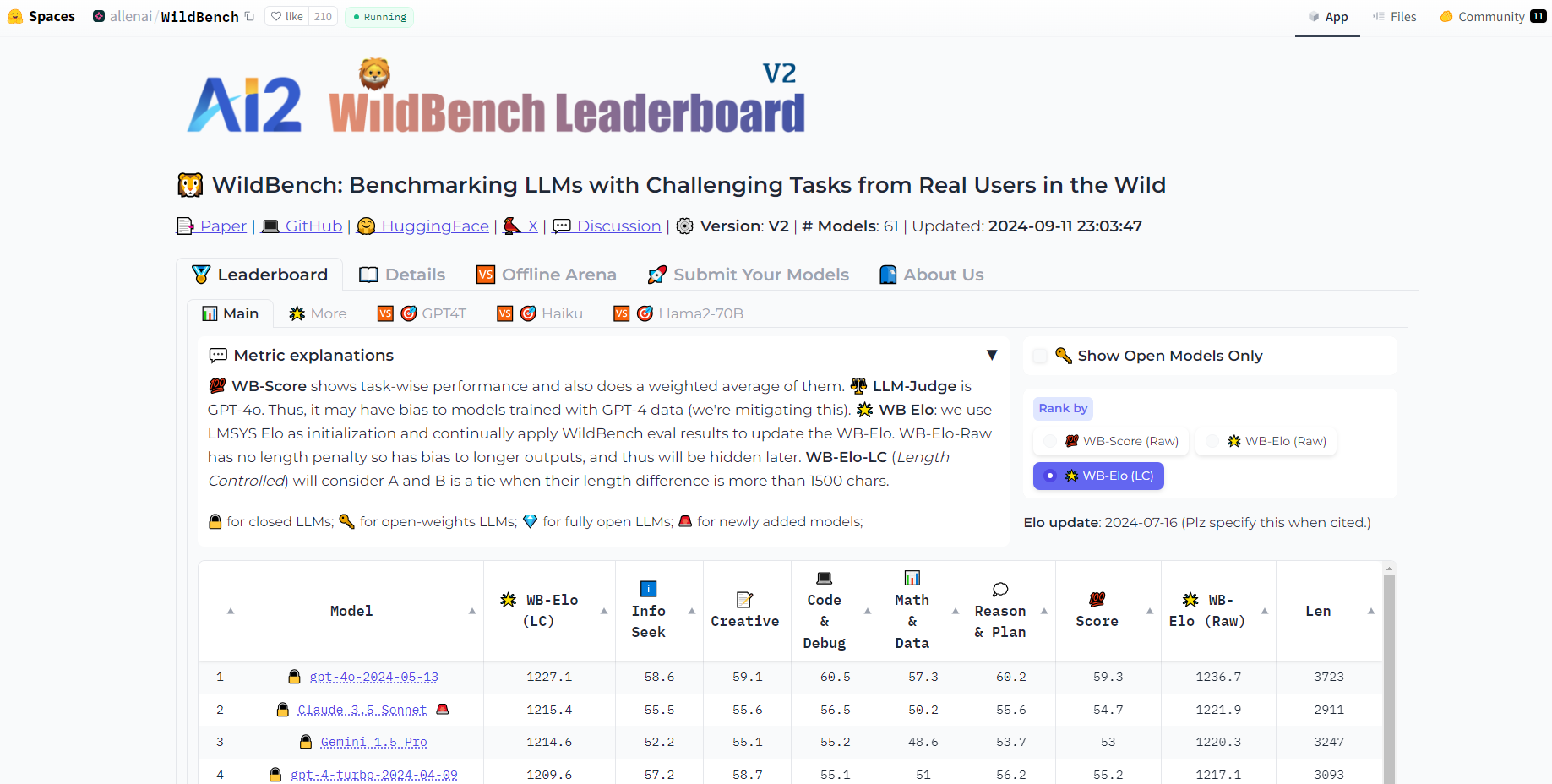

Innovative Evaluation Metrics: WildBench employs a checklist-based scoring system, a WB score for individual model assessment, and a WB Reward for comparative analysis between models.

Length Bias Mitigation: To ensure fair evaluations, WildBench has introduced a customizable length penalty method that counters the倾向 of LLM judges to favor longer responses.

Use Cases

Model Developers: Enhance the performance of LLMs by identifying their weaknesses through WildBench's comprehensive evaluations.

AI Researchers: Gain new insights into the capabilities of LLMs when faced with the complexities of real-world tasks, informing future research directions.

Enterprise Solutions: Companies can use WildBench to select the most suitable LLMs for customer service, content creation, and other business applications.

Conclusion

WildBench is revolutionizing the way we assess AI language models by providing a realistic and nuanced evaluation platform. Its practical impact extends across industries, enabling the development of more capable and reliable AI solutions. Discover the true potential of AI with WildBench – where real-world challenges meet cutting-edge AI.