gemma.cpp

gemma.cpp

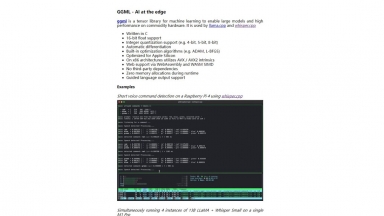

GGML

GGML

gemma.cpp

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag |

GGML

| Launched | 2023-04-13 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Fastly,GitHub Pages,Gzip,Varnish |

| Tag |

gemma.cpp Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

GGML Rank/Visit

| Global Rank | 3783975 |

| Country | United States |

| Month Visit | 15669 |

Top 5 Countries

Traffic Sources

What are some alternatives?

Google's open Gemma models - Gemma is a family of lightweight, open models built from the research and technology that Google used to create the Gemini models.

CodeGemma - CodeGemma is a lightweight open-source code model series by Google, designed for code generation and comprehension. With various pre-trained variants, it enhances programming efficiency and code quality.

Google Gemini - Discover Gemini, Google's advanced AI model designed to revolutionize AI interactions. With multimodal capabilities, sophisticated reasoning, and advanced coding abilities, Gemini empowers researchers, educators, and developers to uncover knowledge, simplify complex subjects, and generate high-quality code. Explore the potential and possibilities of Gemini as it transforms industries worldwide.

Mini-Gemini - Mini-Gemini supports a series of dense and MoE Large Language Models (LLMs) from 2B to 34B with image understanding, reasoning, and generation simultaneously. We build this repo based on LLaVA.